One of the most crucial aspects of machine learning is understanding the mathematics & statistics behind it. In my journey to becoming a data scientist, I wanted to master not only the theoretical aspects of math & stats but also understand how I could apply them to my area of work.

There is an ever-increasing number of machine learning algorithms, and this post is going to focus on one of my favorites — the Naive Bayes algorithm. Specifically, I’m going to break this exploration into two parts—the first part is going to broadly cover the Naive Bayes algorithm and how it can be applied in text classification. And the second part of it is going to focus on building a REST API from the model we create in Part I. So stay tuned and enjoy!

The objectives of this blog post are to:

- Understand Bayesian methods and how they work.

- Perform text classification with the Naive Bayes algorithm.

- Evaluate the model’s performance.

What are Bayesian Methods?

Since the algorithm we’re going to use in this post is Naive Bayes, it makes sense to talk about the Bayesian methods underlying the algorithm itself.

Bayesian methods are methods of statistical inference that establish the fact that an individual’s prior beliefs or opinions about an event may be updated/changed given the presence of new evidence/data.

Bayesian statistics provide a firm mathematical procedure for putting together prior opinions and new evidence to produce new posterior opinions. This is in sharp contrast to frequentist statistics, which deals with the fact that probabilities are a measure of frequencies of random events in a repeated number of trials.

Now that we’ve got a basic introduction to Bayesian statistics, let’s see how we can use this to derive the popular Bayes’ theorem. If you already know how to do this, feel free to skip this part.

Bayes’ Theorem

Bayes’ theorem is a powerful theorem that’s changed the way we think about probabilities. As complex as it may sound, it’s simply based on conditional probabilities.

From conditional probability, we know that the probability of A given B, mathematically denoted by P(A|B), is equal to the probability of A’s intersection with B divided by the probability of B:

Similarly:

From equation 1 we can derive:

Similarly, from equation 2 we can derive:

Since P(A n B) = P(B n A), we can equate equation 3 & equation 4 and arrange the final equation to yield the Bayes’ Theorem, which is:

Now that we’ve looked at the underlying Bayes’ Theorem, let’s briefly discuss how this works in the Naive Bayes algorithm specifically.

At this point, you might be asking, why the name Naive Bayes? What actually makes Naive Bayes…Naive? A Naive Bayes classifier works by asking “Given a set of features, which class does a measurement belong to—say, class 1 or class 0?”

Naive Bayes is naive because it assumes that features in a given measurement are independent, which is in reality never the case. This assumption is strong but extremely useful. It’s what makes this model work well with little data or data that may be mislabeled. Additional advantages of the Naive Bayes algorithm are that it’s very fast and scalable.

From the Bayes’ Theorem, we can replace A and B with X and Y, representing the feature matrix and the response vector respectively, and this would yield:

Which can be re-arranged and extended to:

This can be interpreted as the probability of predicting a target with class k given feature matrix X, and is given by the probability of predicting feature matrix X given a certain class of y times the probability of belonging to a certain class k.

And of course, we know in real life there would be multiple features, so we can extend the Bayes’ Theorem “naively”, assuming the features are independent, into:

The above should serve as enough of an intro to the Naive Bayes algorithm for us to get started. Now it’s time to work with our hands-on example.

Text Classification with Naive Bayes

In order to understand how perform text classification using the Naive Bayes algorithm, we need to first define the dataset we’re going to use and import it.

The dataset we’re going to use is the 20 newsgroups dataset (download link). The dataset comprises around 18,000 newsgroups posts on 20 topics split in two subsets: one for training (or development) and the other one for testing (or for performance evaluation).

The split between the train and test set is based upon messages posted before and after a specific date. Alternatively, one can download and extract the files in Scikit-learn using:

In this tutorial, we’re going to focus on five categories in our target variable, namely alt.atheism, soc.religion.christian, talks.politics.misc, comp.graphics, and sci.med, which we specify in the train and test sets as shown below:

In order to access the target names of our training data, we can print it out in a cell of a Jupyter notebook by print(news_train[‘target_names’]). Similarly we can view the contents of the training data by print(news_train) — which essentially is a dictionary of texts.

Data Preparation & Modeling

Now we need to cover some important concepts in the text classification process. We are going to use a special class from sklearn.feature_extraction.text called CountVectorizer. So the idea is that, given that we have a text string, we can assign unique numbers to each of the words in the text.

After assigning the unique numbers, we count the occurrence of these numbers. This concept of assigning unique numbers to each of the words in a text is called tokenization. Let’s take a look at an example:

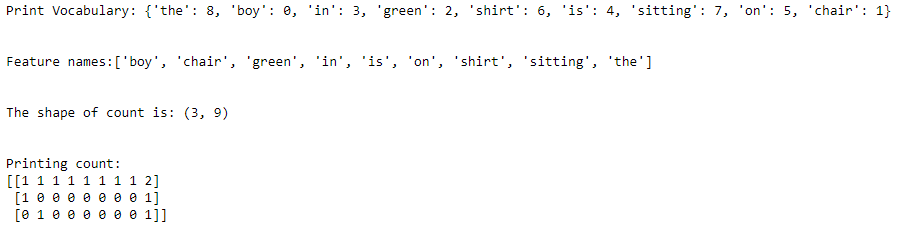

So the features in the vocabulary dictionary are as shown below:

We can implement this in Scikit-learn with the code snippet below:

Do take note, however, that in order to use CountVectorizer, we first have to import the class, create an instance of the class which we call vector, and call the fit method to learn the vocabulary in the raw document. The transform method counts the occurrences of each word: basically in ML terms, we say “encoding documents”. The results of the snippet is the vocabulary dictionary, feature names, and shape and the count of features.

This looks promising. However, one thing to note that you might have figured out is that words like “the” tend to appear often in almost all text documents. As such, the count of the word “the” doesn’t necessarily mean that it’s important in classification.

There are ways to handle this:

- Removing what we call stop words (commonly occurring words in English language like articles, prepositions, etc) and/or

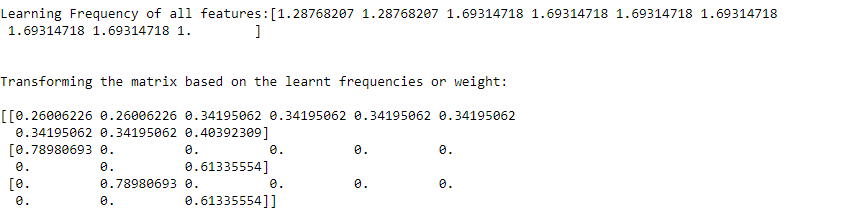

- Introducing a concept called Term-Frequency Inverse Document Frequency (TF-IDF). In summary, TF-IDF first counts the number of times a given word appears in a document and then gives a weight to the word, which delineates its importance in classification. So let’s see how that’s done in Python:

Again, we import the TfidfTransformer, create an instance of the class, and call the fit method — passing in as argument the array of counts returned by CountVectorizer in the previous block—and finally, we use the transform method to assign relevant weights to these features, as printed below:

Now that we have a firm understanding of CountVectorizer and TF-IDF, we can repeat the same steps for our 20 news group dataset.

Now that the respective transformation and assignment of weights has been done, it’s now time to actually build the Naive Bayes model :).

We do this by importing MultiNomialNB from sklearn.naive_bayes to fit the model to the training set:

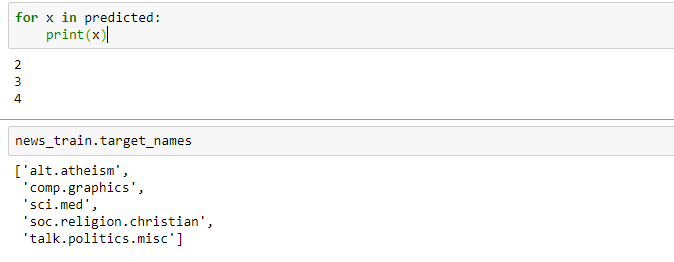

We can create our own sentences to test the predictions of the classifier:

As we can see it performs really well, classifying the documents in docs_new into their respective targets.

Performance of the Model

It’s now time to check the performance of the model on the test data.

This outputs an accuracy of about 80%, which is a very impressive performance level to start with. Feel free to add some hyperparmeter tuning to the model to boost its performance even more.

I hope you enjoyed the ride in part one of this series. Part two is going to focus on building a REST API for the model we just built. Thanks for reading. Stay tuned! Cheers!

Comments 0 Responses