(Want to jump to the code? click here)

What is machine learning?

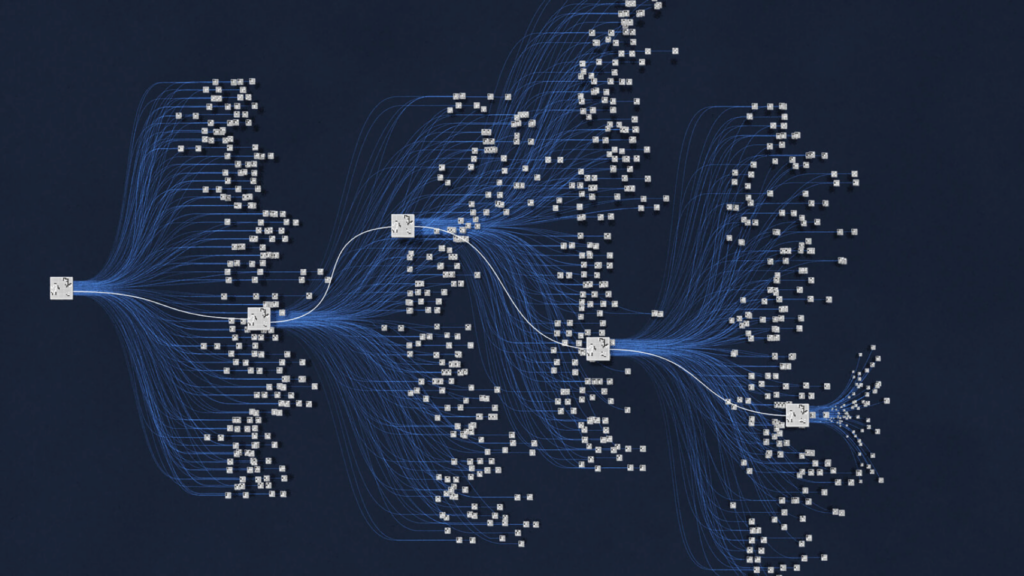

Training Data (generates)=> Patterns

Input => Patterns => Prediction

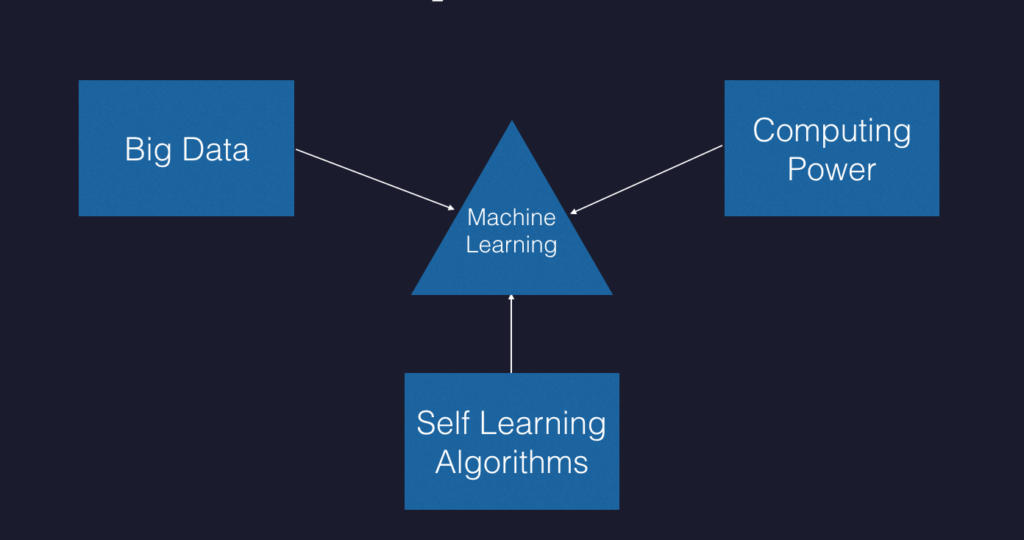

Why Now?

The first artificial neural network was designed in 1958. Why now—when artificial intelligence, the parent technology to machine learning, has been around for more than 50 years—is there a surge of ML applications?

There are 3 things that are simultaneously responsible for this!

The reason is the availability of large volumes of big data, unprecedented computing power, and the growing number and sophistication of self-learning algorithms available at this time.

ML on mobile today

Let’s narrow down our topic from ML to ML on mobile, as that’s what we are interested in for the purposes of this article.

As of now, machine learning on mobile generally involves pre-trained models that are typically trained on a cloud. Yes, it is possible to train models now on iOS 13, but some kind of pre-trained model is still typically required to get started.

Two of the biggest reasons why machine learning on mobile works amazingly well:

- Privacy: As all the inference (i.e. prediction) is done locally, the data never leaves the device! For example, when classifying an image, the user can rest assured knowing the image their classifying (i.e. their data) won’t have to communicate with the cloud or an external server.

- Speed: As no API calls are involved, this leads to faster response times — and provides a more seamless experience.

No need to be a ML expert

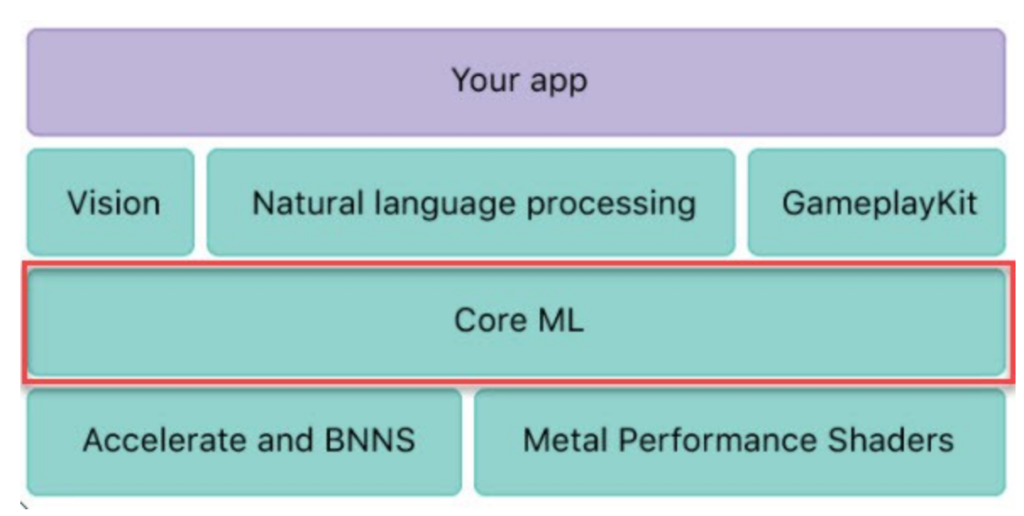

You don’t need to be a ML expert who understands all the complex neural network algorithms to develop ML-powered apps on iOS using Core ML, Apple’s on-device ML framework for iOS (or Android apps using tools like ML Kit, for that matter).

I am definitely not an expert! Of course if it interests you, you could absolutely dive deeper into it, and it will add value. For those of you who’d like to explore ML, there are some great references at the end of this post that can help you get started.

But the point is, it’s optional. Apple has already done a lot of work for us, so let’s take advantage of this to build great apps!

Incorporating ML into your apps

So ML is easy? How do I incorporate it then?

When experimenting with on-device ML, there’s no need to come up with a new idea for a machine learning app. You can use machine learning in the app that you are already working on! Let’s see some examples.

The first example is Apple’s Photos app. Its core feature is to view pictures you have already taken from your camera or downloaded from elsewhere. But it doesn’t stop there! It categorizes your photos by using ML-based image classification so that you can search through your camera roll by category.

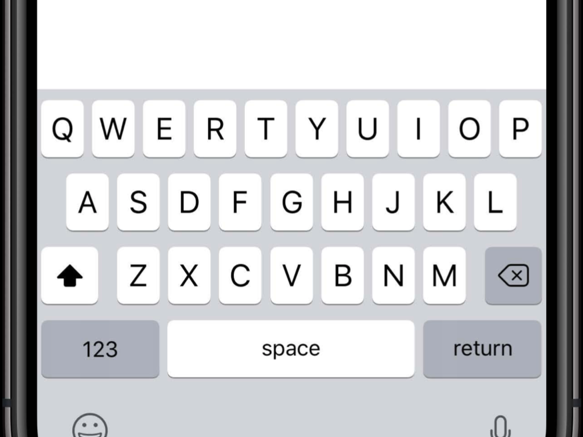

The second example is my absolute favorite: Apple’s default keyboard.

The keyboard app optimizes the touch area based on how you type each letter. This is the reason why, when you use your friends’ iPhone to type something, you don’t seem to get it right on the first try. This is because the keyboard is always optimized for the primary user of that phone.

In my opinion this is the perfect example of how little ML-powered enhancements can significantly impact an app’s usability!

Implementation

All right, we have a couple great ideas to incorporate machine learning—how do we proceed?

I’ve got 4 steps for you!

- Find a Core ML model

- Import the model

- Provide the input to the model and generate the predictions

- Handle the results

Let’s dive into each of the steps in detail!

1. Finding a Core ML model

You can select any open source model available online! (References below👇)

When looking for a model, there are 2 things to keep in mind:

- The model needs to be in .mlmodel format. (This is addressed in the next section)

- Understand the inputs and outputs of the model to judge whether it fits your needs.

For example, if you are operating on images, does the model accept images as an input? What is the format? Is it easy to covert to that format? What is the required output for your use case? Is it easy to convert from the model’s output to that format, etc..

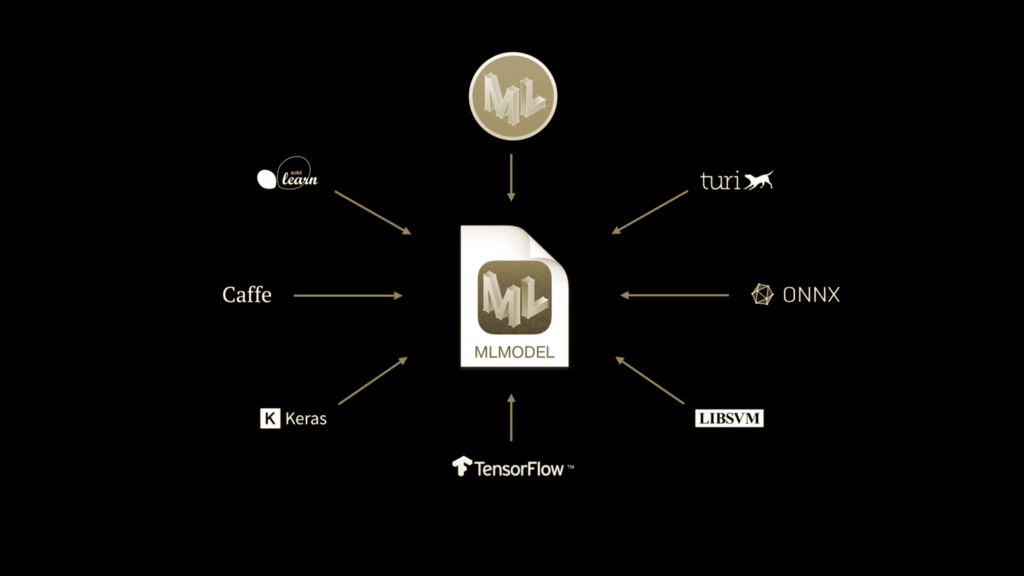

Core ML Tools

There are tons of amazing models available online, but many of them might not be in Core ML format!

To take advantage of them, Apple has provided coremltools!

Coremltools are Python utilities used to convert other types of models int the Core ML format. No you don’t need to be a python expert to do this. The repository is well-documented for its usage.

2. Import the model

This is the easiest step!

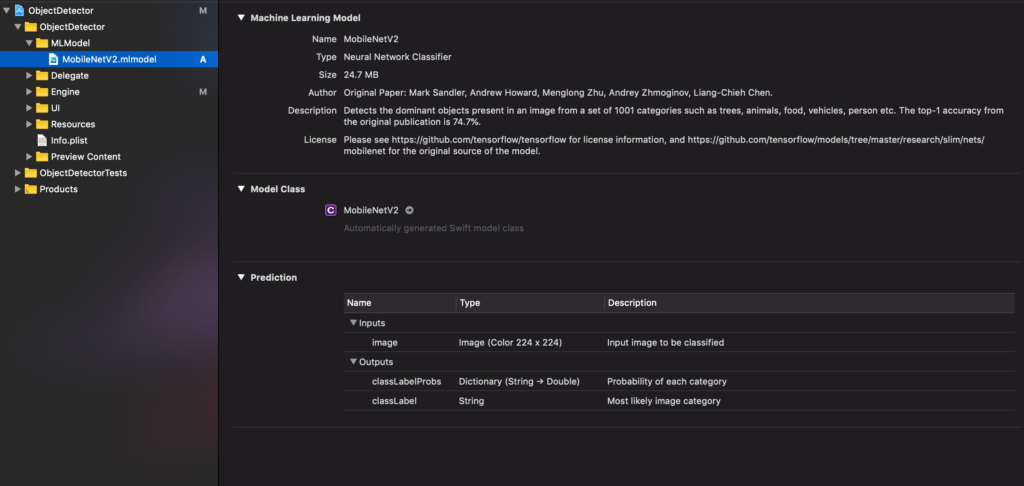

Once you find your Core ML model, download it. After that, just drag and drop the model into your Xcode project. When you click on the model, it should look something like this. Do note the Model Class — we will use this auto-generated class in the next step.

3. Generate predictions using the model

This is a two-step process. I’m using Apple’s Vision framework along with Core ML to do this. More on the Vision framework later.

Initialize the model

This is a pretty standard code to initialize the model. Three things are happening here:

- We are creating a classification request (type: VNCoreMLRequest)

- We’re supplying the model imported in the previous step while creating this request.

- We’re also supplying a completion handler (named- processMLResults). This is called when the execution of the request is complete.

Note that we are not executing the request yet. This is just the initialization part.

Run the Vision request

This is done once the user input is received. For example, when the user uploads an image:

Again, pretty standard code. The only thing that would change here is the user input. Let’s go through this code:

- Assuming our ML model operates on images, we create a VNImageRequestHandler and supply our image to it.

- Execute the request created in the previous step (named: classificationRequest)

- Handle exception if the model fails to do the classification

4. Handle the results

Last step! Once the request created in the previous step is completed, it will call the completion handler (named- processMLResults) that we supplied when initializing the model:

- We’re first checking to see if the request passed contains any results — this tells us if the model was able to classify the image.

- Next, we’re checking if the result array is empty — this tells us if the model was able to recognize anything in this image.

- As this request was a classification request, the output should be an array of VNClassificationObservation type. This step could vary depending on the type of request being used.

This array represents multiple detections that the model has identified from the image provided. This array is sorted by the confidence level of each result.

In this case, we’re printing in the identifier property and the confidence of the first observation, which is the observation with the maximum confidence level.

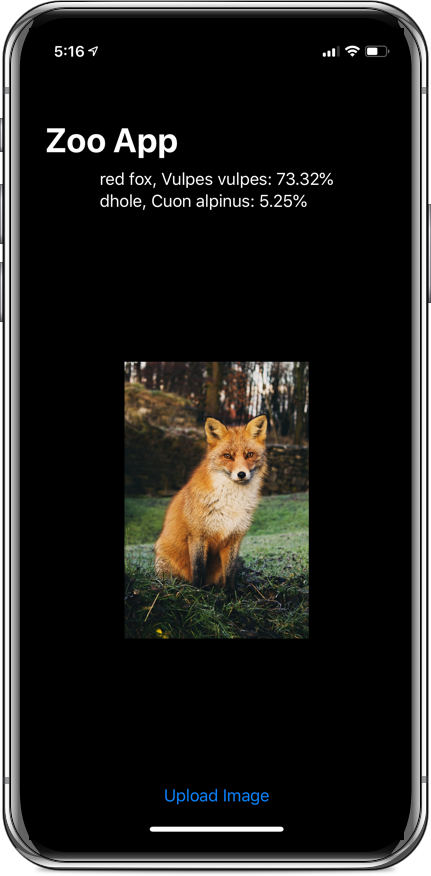

The example app below displays the first 2 classifications to the user along with their confidence levels. Typically, when displaying any prediction to the user, it’s good to have some threshold for the confidence level. For example, displaying the classification to the user with more than x% of confidence level.

You can check out the app and the code in these steps here.

Core ML + Vision Framework

So why did we use Apple’s Vision framework in the above code?

Vision can be thought of as a pipeline to Core ML. Vision does all the heavy lifting from AVFoundation to CVPixelBuffer format that Core ML expects.

For example, Core ML requires images in the CVPixelBuffer format to operate on them. With Vision, you don’t need to worry about the right conversions. As we saw in the “handle the results” section, vision presented us with VNClassificationObservation which was very convenient for parsing results. Vision simply makes Core ML easier to use!

The Vision framework also performs face and landmark detection, text detection, barcode recognition, and feature tracking. These features are provided out-of-the-box by Vision. Check out the excellent WWDC 2019 video on Vision if you want to know more about this.

Will your app be the next breakthrough?

To conclude this post:

With the availability of latest machine learning frameworks and tools, we can make our mobile apps smarter with very little effort.

ML and AI functionality in mobile apps will soon be the norm. And your customers will begin to expect and demand these solutions.

Think about what problems you want to solve with your app, and play around and have fun with ML to see if you can’t make it happen!

Who knows? You might identify a huge gap and disrupt an entire industry.

References:

Demo App Repository -> https://github.com/pradnya-nikam/object-detector

Designing ML experiences

ML basics

- https://christophm.github.io/interpretable-ml-book/what-is-machine-learning.html

- https://medium.com/@dmennis/understand-core-ml-on-ios-in-5-minutes-bc8ba5411a2d

Models

- https://developer.apple.com/machine-learning/models

- https://github.com/juanmorillios/List-CoreML-Models

- https://github.com/SwiftBrain/awesome-CoreML-models

Converting other models to coreml format

- https://developer.apple.com/documentation/coreml/converting_trained_models_to_core_ml

- https://github.com/apple/coremltools/

Vision Framework

Comments 0 Responses