Most smartphones come with a sensor of some sort: Gyroscope, accelerometer, ambient light, proximity, GPS, among others. These sensors each have their own functions, ranging from detecting the your phone’s orientation to determining your current position on the globe.

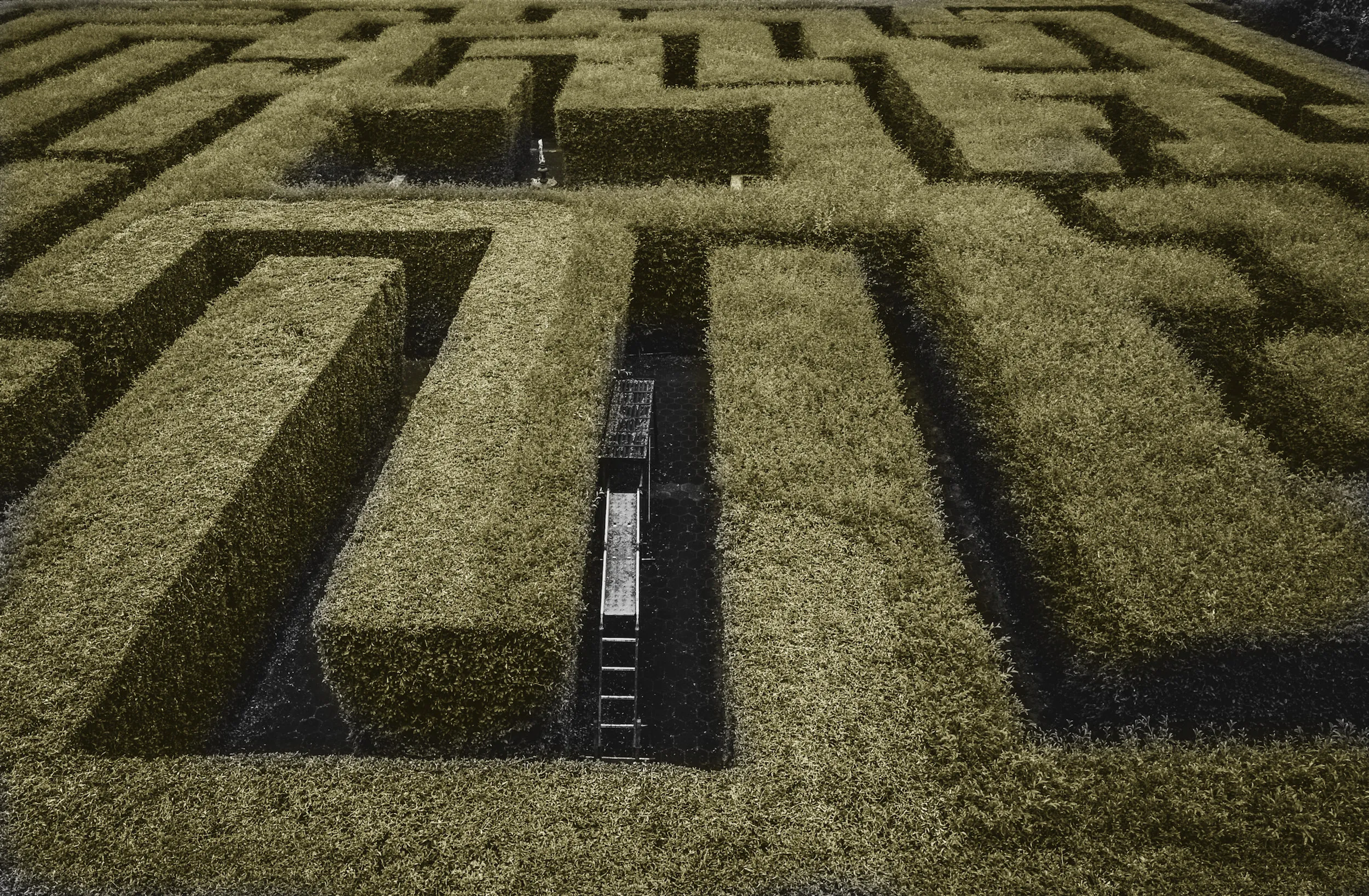

In this tutorial, we’ll take a look at how we can use the phone’s accelerometer to move a ball through a maze. We will be using React Native to create the app. For the accelerometer data, we’ll employ the help of React Native Sensors and build the game with MatterJS and React Native Game Engine.

Continue reading “Creating an accelerometer-powered maze game in React Native”