Once you’ve trained a TensorFlow model and it’s ready to be deployed, you’d probably like to move it to a production environment. Luckily, TensorFlow provides a way to do this with minimal effort. In this article, we’ll use a pre-trained model, save it, and serve it using TensorFlow Serving. Let’s get moving!

TensorFlow ModelServer

TensorFlow Serving is a system built with the sole purpose of bringing machine learning models to production. TensorFlow’s ModelServer provides support for RESTful APIs. However, we’ll need to install it before we can use it. First, let’s add it as a package source.

Installing TensorFlow ModelServer can now be done by updating the system and using apt-get to install it.

Developing the Model

Next, let’s use a pre-trained model to create the model we’d like to serve. In this case, we’ll use a version of VGG16 with weights pre-trained on ImageNet. To make it work, we have to get a couple of imports out of the way:

- VGG16 the architecture

- image for working with image files

- preprocess_input for pre-processing image inputs

- decode_predictions for showing us the probability and class names

Next, we define the model with the ImageNet weights.

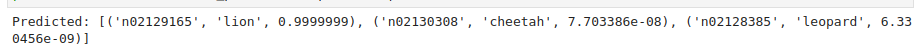

With the model in place, we can try out a sample prediction. We start by defining the path to an image file (a lion) and using image to load it.

After pre-processing it, we can make predictions using it. We can see that it was able to predict that the image is a lion with 99% accuracy.

Now that we have our model, we can save it to prepare it for serving with TensorFlow.

Saving the Model

Let’s now save that model. Notice that we’re saving it to a /1 folder to indicate the model version. This is critical, especially when you want to serve new model versions automatically. More on that in a few.

Running the Server with TensorFlow ModelServer

Let’s start by defining the configuration we’ll use for serving:

- name is the name of our model—in this case, we’ll call it vgg16.

- base_path is the absolute path to the location of our saved model. Be sure to change this to your own path.

- The model_platform is obviously TensorFlow.

- model_version_policy enables us to specify model versioning information.

model_config_list:{

config:{

name: "vgg16",

base_path: "/media/derrick/5EAD61BA2C09C31B/Notebooks/Python/serving/vgg16",

model_platform:"tensorflow",

model_version_policy{

specific{

versions : 1

}

}

}Now we can run the command that will serve the model from the command line:

- rest_api_port=8000 means that our REST API will be served at port 8000.

- model_config_file defines the config file that we’d defined above.

- model_config_file_poll_wait_seconds indicates how long to wait before checking for changes in the config file. For example, changing the version to 2 in the config file would lead to version 2 of the model being served automatically. This is because changes in the config file are being checked every 300 seconds, in this case.

Making Predictions using the REST API

At this point, the REST API for our model can be found here: http://localhost:8000/v1/models/vgg16/versions/1:predict .

We can use this endpoint to make predictions. In order to do that, we’ll need to pass JSON-formatted data to the endpoint. To that end — no pun intended — we’ll use the json module in Python. In order to make requests to the endpoint, we’ll use the requests Python package.

Let’s start by importing those two.

Remember that the x variable contained the pre-processed image. We’ll create JSON data containing that. Like any other RESTFUL request, we set the content type to application/json. Afterward, we make a request to our endpoint as we pass in the headers and the data. After getting the predictions, we decode them just like we did at the beginning of this article.

data = json.dumps({"instances": x.tolist()})

headers = {"content-type": "application/json"}

json_response = requests.post('http://localhost:8000/v1/models/vgg16/versions/1:predict',

data=data,

headers=headers)

predictions = json.loads(json_response.text)

print('Predicted:', decode_predictions(np.array(predictions['predictions']), top=3)[0])

Serving with Docker

There is an even quicker and shorter way for you to serve TensorFlow models—using Docker. This is actually the recommended way, but knowing the previous method is important, just in case you need it for a specific use case. Serving your model with Docker is as easy as pulling the TensorFlow Serving image and mounting your model.

With Docker installed, run this code to pull the TensorFlow Serving image.

Let’s now use that image to serve the model. This is done using docker run and passing a couple of arguments:

- -p 8501:8501 means that the container’s port 8501 will be accessible on our localhost at port 8501.

- — name for naming our container—choose the name your prefer.I’ve chosen tf_vgg_server in this case.

- — mount type=bind,source=/media/derrick/5EAD61BA2C09C31B/Notebooks/Python/serving/saved_tf_model,target=/models/vgg16 means that the model will be mounted to /models/vgg16 on the Docker container.

- -e MODEL_NAME=vgg16 indicates that TensorFlow serving should load the model called vgg16.

- -t tensorflow/serving indicates that we’re using the tensorflow/serving image that we pulled earlier.

- & running the command in the background.

Run the code below on your terminal.

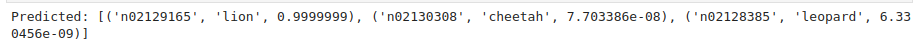

Now we can use the REST API endpoint to make predictions, just like we did previously.

json_response = requests.post('http://127.0.0.1:8501/v1/models/vgg16:predict',

data=data,

headers=headers)

predictions = json.loads(json_response.text)

print('Predicted:', decode_predictions(np.array(predictions['predictions']), top=3)[0])

Clearly, we obtained the same results. With that, we’ve seen how we can serve a TensorFlow model with and without Docker.

Final Thoughts

This article from TensorFlow will give you more information on the TensorFlow Serving architecture. If you’d like to dive deeper into that, this resource will get you there.

You can also explore alternative ways of building using the standard TensorFlow ModelServer. In this article, we focused on serving using a CPU, but you can explore how to serve on GPUs, as well.

This repo contains links to more tutorials on TensorFlow Serving. Hopefully, this piece was of service to you!

Comments 0 Responses