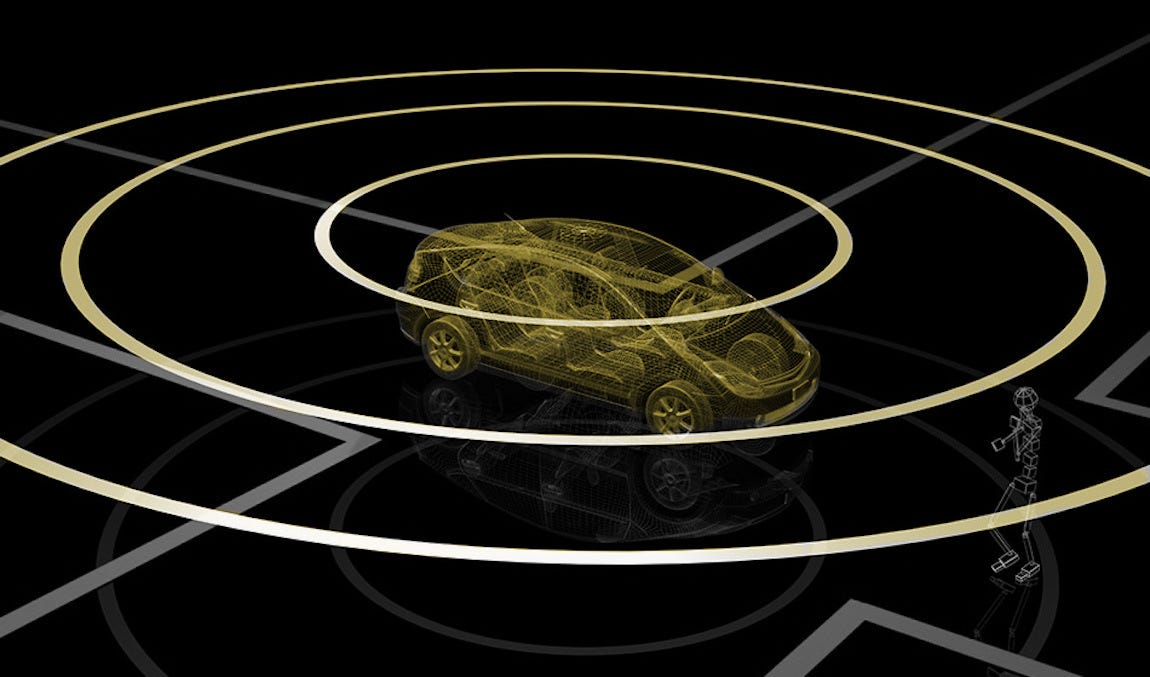

LiDAR is a sensor currently changing the world. It is integrated in self-driving vehicles, autonomous drones, robots, satellites, rockets, and many more.

This sensor understands the world in 3D using laser beams and measuring the time it takes for the laser to come back. The output is a point cloud.

It’s a technology harder to understand than the camera because less people have access to it. I had access to it and today will help you understand how LiDAR detection works for self-driving cars.