In this tutorial, our work on implementing various demo apps using the TensorFlow.js library continues. In the most recent tutorial on using TensorFlow.js, we detected a specific hand gesture—a “thumbs-up” pose using the handpose library.

This tutorial is going to demonstrate similar logic for detecting the keypoints of a full face mesh using the facemesh model library.

Specifically, we’ll learn how to detect face poses using the webcam and then draw the facial landmarks that make up a face mesh, using path and triangular face data matrices. The idea is to create a React app with a webcam stream that feeds the video data to the model, allowing it to make keypoint predictions.

Then, we’re going to load the facemesh library from TensorFlow.js and estimate the key points from the video stream.

Lastly, we will draw these key points and triangles on a JavaScript canvas using the estimated data matrices.

Table of contents:

What we will cover in this tutorial

- Creating a canvas to stream video out from webcam.

- Detecting a face mesh using a pre-trained facemesh model from TensorFlow.

- Detecting landmarks on faces in real-time using a webcam feed.

Let’s get started!

Creating a React App

First, we’re going to create a new React app project. For that, we need to run the following command in the required local directory:

After the successful setup of the project, we can run the project by running the following command:

After the successful build, a browser window will open up showing the following result:

Installing the required packages

Next, we need to install the required dependencies into our project. The dependencies we need to install in our project are the facemesh model, tfjs TensorFlow, and react-webcam. We can use either npm or yarn to install the dependencies by running the following commands in our project terminal:

- @tensorflow/tfjs: The core Tensorflow package based on JavaScript.

- @tensorflow-models/facemesh: This package delivers the pre-trained facemesh TensorFlow model.

- react-webcam: This library component enables us to access a webcam in our React project.

We need to import all the installed dependencies into our App.js file, as directed in the code snippet below:

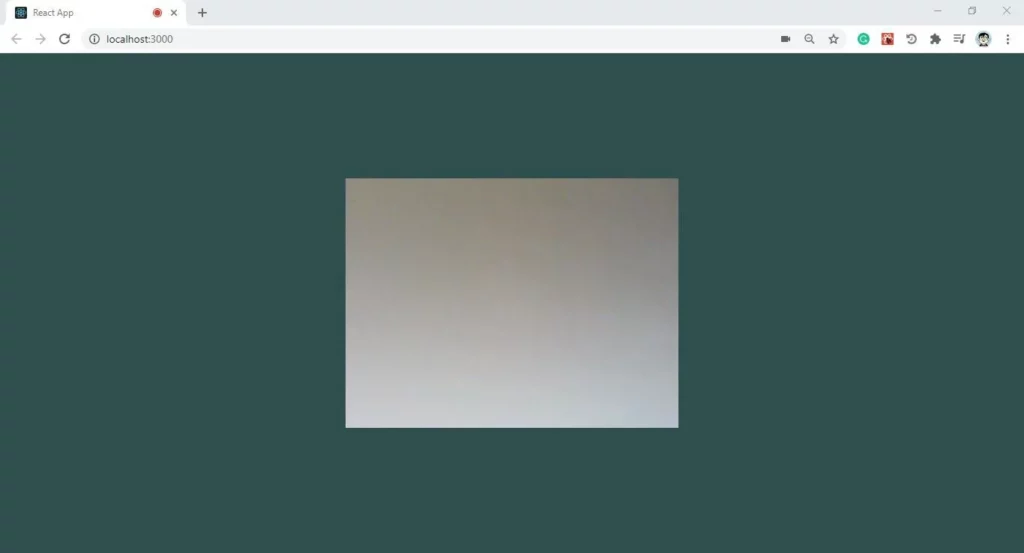

Set Up Webcam and Canvas

Here, we’re going to initialize the webcam and canvas to view the webcam stream in the browser display. For that, we are going to make use of the Webcam component that we installed and imported earlier. First, we need to create reference variables for the webcam as well as canvas using the useRef hook, as shown in the code snippet below:

Next, we need to initialize the Webcam component in our render method. Using this, we can stream the webcam feed in the canvas, also passing the refs as prop properties.

We also need to add the canvas component just below the Webcam component. The canvas component enables us to draw anything that we want to display in the webcam feed. The coding implementation is provided in the snippet below:

Here, the styles applied for both the Webcam and canvas components are the same since we are going to draw the landmarks canvas on top of the webcam stream.

Hence, we’ll now be able to get the webcam stream in our browser window:

Loading facemesh model

In this step, we’re going to create a function called loadFacemesh, which initializes the facemesh model using the load method from the facemesh module. The overall code for this function is provided in the snippet below:

Here, the inputResolution option defines how big of an image we’re grabbing from the webcam, and the defining scale determines the performance. Reducing the scale of the image results in better performance.

Detecting Faces

Here, we’re going to create a function called detectFace, which will handle the face detection. Later on, we’re going to draw the landmark mesh around the overall mesh using the same function. First, we need to check to see if the webcam is up and running and receiving video data stream using the following code:

Then, we need to get the video properties along with dimensions using the webcamReference that we defined before:

Then, we need to set the height and width of the Webcam and canvas to be the same based on the dimensions of the video stream, using the code from the following snippet:

Then, we start estimating the face using the estimateFaces method provided by the network module, obtained as a parameter. The estimateFaces method takes video frames as a parameter,:

The complete function is provided in the code snippet below:

Now, we need to call this detectFace function inside the loadFacemesh method under the setInterval method. This enables the detectFace function to run every 10 milliseconds:

Lastly, we need to call the loadFacemesh function in the main function body as directed in the code snippet below:

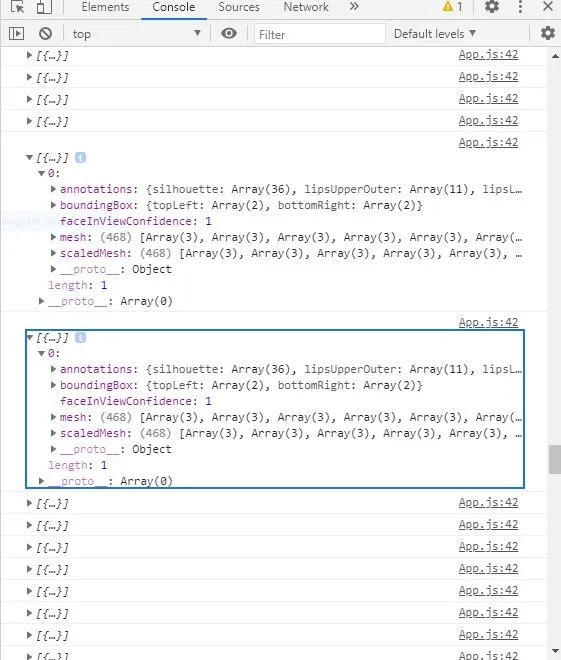

Now, if we run the project, our face detection data from the webcam will get logged in the browser console:

Here, we get the mesh data as well, which we’re going to use the define the matrices for drawing face landmarks.

Implementing Code to Draw the Mesh

Next, we are going to implement the functions to draw paths and the mesh around faces in the canvas of the webcam. For that, we need to create a separate file called meshUtilities.js inside the ./src folder of the project. Inside the file, we need to define the triangulation metrics, which we can get from the detected face data shown in the screenshot above.

The part of the matrices defined is provided in the code snippet below:

Now, we can devise a function to draw the path. The overall implementation of the function is provided in the code snippet below:

This function is used to draw the paths in the triangular mesh. It uses the strokes to join the paths using lines from one coordinate point to another. This function is to be called when drawing the complete mesh.

The function to draw the complete mesh around the detected face is provided below:

This drawMesh function makes use of triangulation matrices data to draw the triangular mesh using the drawPath function to join the triangular coordinate points. The function also uses mathematical modules to render out dots where the lines meet. The drawMesh function is exported from the file.

Hence, we need to import it in our App.js file:

Now, we need to call the drawMesh method inside the detectFace function by passing the canvasReference context and faceEstimate data:

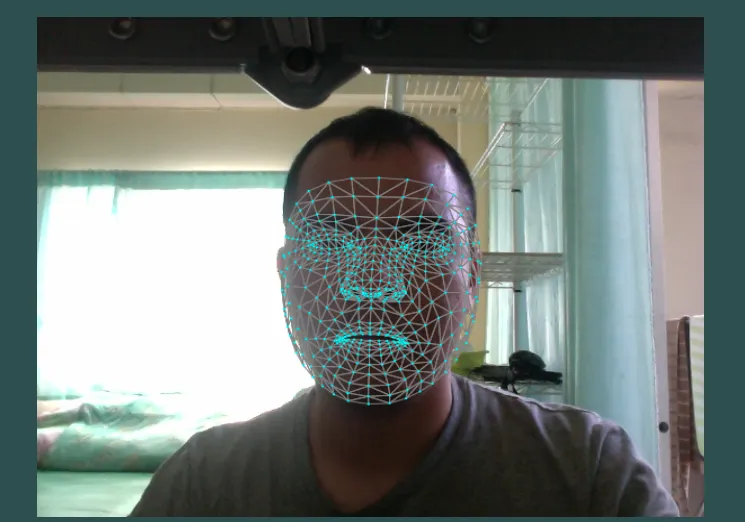

The final result is demonstrated in the screenshot below:

We can see the formation of triangular mesh around the overall mesh. The dots in the mesh are joined by the path lines.

Hence, we have successfully implemented real-time face landmark estimation using a TensorFlow.js model and a webcam feed in our React project.

Conclusion

In this tutorial, we learned how to use facemesh TensorFlow.js model and webcam library to detect human faces and draw a landmark mesh around them in real-time. The overall process of implementing this tutorial was simplified due to the availability of a pre-trained TensorFlow model.

These types of face landmarks using face mesh models are used in apps that make extensive use of camera filters, like Snapchat. This same technology is a foundational component while devising filters for the camera.

For the demo of this entire project, you can check out the following Codesandbox.

Comments 0 Responses