Machine learning tech is being integrated into mobile applications more and more today. Its integration helps optimize overall performance and can add significant functionality to an app’s experience. And over time, ML models can learn and improve from user interactions, making these experiences super intuitive and intelligent.

Here, we’re going to continue working with this technology in the Flutter ecosystem. Check out my previous post on ML in Flutter for more:

This tutorial aims to explore using the TensorFlow machine learning library in a Flutter project to perform image classification. Here, we’ll be classifying images of emojis.

To do this, we’ll either need to work with a pre-trained model or train our own using a tool like Teachable machine, a no-code service provided by Google. The TensorFlow Lite library will help us load the model and apply it within our app.

The idea is to integrate the TensorFlow lite plugin and use it to classify the emoji image in terms of its emotion. Additionally, we’ll need to be able to fetch the image from the gallery—for which, we’ll make use of the Image Picker library. All of this is made simpler by the TensorFlow Lite model and library, which we will explore in this tutorial.

So, let’s get started!

Create a new Flutter project

First, we need to create a new Flutter project. For that, make sure that the Flutter SDK and other Flutter app development related requirements are properly installed. If everything is properly set up, then to create a project, we can simply run the following command in the desired local directory:

After the project has been set up, we can navigate inside the project directory and execute the following command in the terminal to run the project in either an available emulator or on an actual device:

After a successful build, we will get the following result in the emulator screen:

Creating the Main Screen

Here, we’re going to implement a UI that allows users to fetch an image from the device library and display it on the app screen. For fetching the image from the gallery, we’re going to make use of the Image Picker library. This library offers modules to fetch the image and video source from the device camera, gallery, etc.

But first, we’re going to create a basic main screen. In the main.dart file, remove the default code and use the code from the snippet below:

Here, we’ve created a new class object EmojiClassifier which is initialized to the home option of the MaterialApp widget in the MyApp class object. For simplicity, we have used the Scaffold widget with AppBar and an empty body Container for the EmojiClassifier class screen.

Hence, we will get the result as shown in the screenshot below:

Creating the UI for Image View

Next, we are going to place an Image View section on the screen, which will have an image display container and a button to select the image from the gallery.

To select the image from the device gallery, we are going to use the image_picker library. To install it, we need to copy the text provided in the following code snippet and paste it into the pubspec.yaml file of our project:

Now, we need to import the necessary packages in the main.dart file of our project:

In the main.dart file, we need to initialize an File object that stores the image object when selected from the image gallery of the device:

Now, we’re going to code the UI, which will enable users to pick and display the emoji image. The UI will have an image view section and a button that allows users to pick the image from the gallery. The overall UI template is provided in the code snippet below:

Hence, we will get the result as shown in the screenshot below:

Fetch and Display the Emoji Image

In this step, we’re going to code a function that enables us to access the gallery, pick an image, and then display the image in the Image View section on the screen. The overall implementation of the function is provided in the code snippet below:

Here, we have initialized the ImagePicker instance into the picker variable and used the getImage method provided by it to fetch the image from the gallery to the image variable.

Then, we have set the _imageFile state to the result of the fetched image using the setState method. This will cause the main build method to re-render and show the image on to the screen.

Now, we need to call the selectImage function in the onPressed property of the FloatingActionButton widget, as shown in the code snippet below:

Hence, we can now fetch the image from our gallery and display it on the screen:

Performing Emoji Identification with TensorFlow

Now that we have the UI set up, it is time to configure our emoji image classification model. Here, we are going to classify the image of a particular emoji based on the model and identify which emoji is it. For that, We are going to use the pre-trained emoji classifier model that was trained by using TensorFlow’s Teachable Machine.

Here, we have provided the trained models ourselves. You can go ahead and download the trained model files from this link. You can also try training train our own model using the Teachable Machine. The model linked above offers the trained images of various emojis along with label tags. Hence, we are going to identify the emotion in emoji for testing purposes.

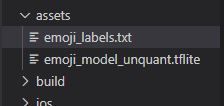

Once downloaded, we will get two files:

- emoji_model_unquant.tflite

- emoji_labels.txt

The labels file included can only distinguish between three emoji emotions, but you could certainly build a model that classifies more. I’v done it this way for quick testing purposes.

We need to move the two files provided to the ./assets folder in the main project directory:

Then, we need to enable access to assets files in pubspec.yaml:

Installing the TensorFlow lite

Next, we need to install the TensorFlow Lite package. It is a Flutter plugin for accessing TensorFlow Lite APIs. This library supports image classification, object detection, Pix2Pix and Deeplab, and PoseNet on both iOS and Android platforms.

To install the plugin, we need to add the following line to the pubspec.yaml file of our project:

For Android, we need to add the following setting to the android object of the ./android/app/build.gradle file:

Here, we need to check to see if the app builds properly by executing a flutter run command.

If an error occurs, we may need to increase the minimum SDK version to ≥19 in the ./android/app/build.gradle file.

Once the app builds properly, we‘re ready to implement our TFLite model.

Implementing TensorFlow Lite for Emoji Classification

First, we need to import the package into our main.dart file, as shown in the code snippet below:

Loading the Model

Now, we need to load the model files in the app. For that, we’re going to configure a function called loadEmojiModel. Then, by making use of the loadModel method provided by the Tflite instance, we are going to load our model files in the assets folder to our app. We need to set the model and labels parameter inside the loadModel method, as shown in the code snippet below:

Next, we need to call the function inside the initState method so that the function triggers as soon as we enter the screen:

Perform Emoji Classification

Now, we are going to write code to actually perform emoji classification. First, we need to initialize a variable to store the result of classification, as shown in the code snippet below:

This _identifiedResult List type variable will store the result of classification.

Next, we need to devise the function called identifyImage that takes an image file as a parameter. The overall implementation of the function is provided in the code snippet below:

Here, we have used the runModelOnImage method provided by the Tflite instance to classify the selected image. As parameters, we have passed the image path, result quantity, classification threshold, and other optional configurations for better classification. After the successful classification, we have set the result to the _identifiedResult list.

Now, we need to call the function inside the selectImage function and pass the image file as a parameter as shown in the code snippet below:

This will allow us to set the emoji image to the image view as well as classify the emoji image as soon as we select it from the gallery.

Now, we need to configure the UI template to display the results of the classification. We are going to show the result of classification in card style as a list just below the FloatingActionButton widget.

The implementation of the overall UI of the screen is provided in the code snippet below:

Here, just below the FloatingActionButton widget, we have applied the SingleChildScrollView widget so that the content inside it is scrollable. Then, we have used the Column widget to list out the widgets inside it horizontally. Inside the Column widget, we have kept the result of the classification inside the Card widget.

Hence, we will get the result as shown in the demo below:

We can see that as soon as we select the image from the gallery, the classified emoji emotion result is displayed on the screen as well. Likewise, you can add more labels to the model labels file and test for different emoji images. We can even display the result of the classification percentage (i.e. the model’s confidence in its prediction). But for this kind of analysis, the model needs to be trained with a lot more emoji images.

And that’s it! We have successfully implemented a prototype Emoji Classifier in a Flutter app using TensorFlow Lite.

Conclusion

In this tutorial, we learned how to perform emoji image classification to recognize 3 different emojis and classify them based on the emotion they’re expressing.

The model files were made available for this tutorial, but you can create your own trained models using services like Google’s Teachable Machine. The TensorFlow Lite library made the overall loading and classifying of images simple by providing different methods and instances.

Now the challenge is to train your own emoji classification models using your preferred emojis and apply them to the existing project. The TensorFlow Lite library can be used to implement other complex ML tasks in mobile applications, such as object detection, pose estimation, gesture detections, and more.

The overall coding implementation is provided in GitHub.

Comments 0 Responses