There are now a bunch of off-the-shelf tools for training artistic style transfer models and thousands of open source implementations. Most use a variation of the network architecture described by Johnson et al to perform fast, feed-forward stylization.

As a result, the majority of the style transfer models you find are the same size: 7MB. That’s not an unreasonably large asset to add to your application, but it’s also not insignificant.

Research suggests that neural networks are often way larger than they need to be—that many of the millions of weights they contain are insignificant and needlessly precise. So I wondered: What’s the smallest model I can create that still reliably performs style transfer?

The answer: A 17KB neural network with just 11,686 trained weights.

Jump right to the code: Fritz Style Transfer GitHub repo.

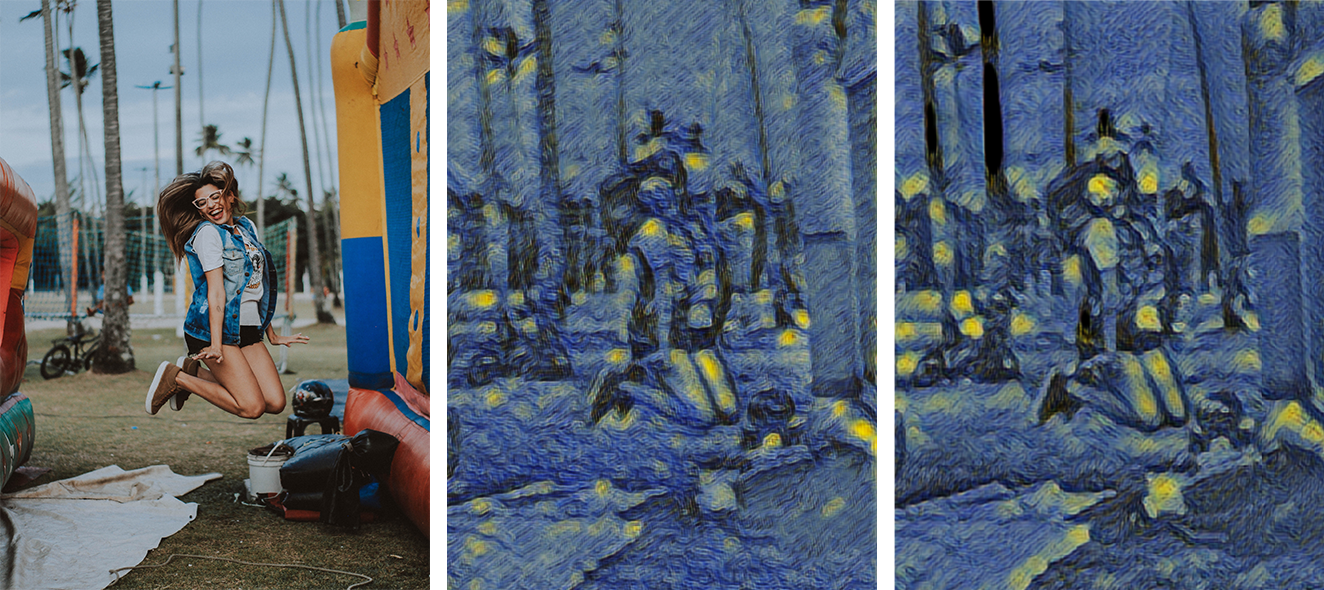

Left: Original image. Middle: Stylized image from the our small, 17KB model. Right: Stylized Image from the larger 7MB model.

Quick TL;DR.

Original model:

Size: 7MB

Number of Weights: 1.7M

Speed on iPhone X: 18 FPS

Tiny Model:

Size: 17KB

Number of Weights: 11,868

Speed on iPhone X: 29 FPS

Model optimization is only one part of a complicated development lifecycle for mobile ML projects.

How to shrink a style transfer model

It turns out that making a tiny model was actually pretty easy. I ended up relying on two techniques, both of which generalize to other models.

1. Ruthlessly pruning away layers and weights.

2. Converting 32bit floating point weights into 8bit integers through quantization.

Pruning Strategies

Let’s start with pruning. Convolutional neural networks typically contain millions or even hundreds of millions of weights that are tuned during training. As a general rule of thumb (I said general, don’t at me), more weights means higher accuracy. But the exchange is highly inefficient.

The stock configuration of Google’s MobileNetV2 has 3.47 million weights and takes up 16MB of space. The InceptionV3 architecture is almost 6 times larger with 24 million weights taking up 92MB. Despite containing more than 20 million additional weights, InceptionV3’s top-1 classification accuracy on ImageNet is only 7 percentage points higher than MobileNetV2 (80% vs 73%).

So if we have a neural network, we can assume most of the weights aren’t that useful and remove them. But how? There are three options: pruning at the individual weight level, the layer level, and the block level.

Weight level: As we’ve seen, the vast majority (like >95%) of trained weights in certain neural networks aren’t helpful. If we can identify which weights actually contribute to network accuracy, we can keep those and remove the rest.

Layer level: Weights are packaged inside individual layers. 2D convolution layers, for example, have a tensor of weights called a kernel with a user-defined width, height, and depth. Making the kernel smaller shrinks the size of the entire network.

Block level: Layers are typically combined into reusable subgraphs known as blocks. ResNets, for example, take their name from a “residual block” repeated 10 to 50 times over. Pruning at the block level removes multiple layers, and thus parameters, in one cut.

In practice, there aren’t good implementations of sparse tensor operations necessary to make weight level pruning worthwhile. Hopefully more is done in this area in the future. That leaves layer and block level pruning.

Pruning in practice

My favorite layer pruning technique is introducing a width multiplier as a hyper parameter. First introduced by Google in their famous MobileNet paper, it’s both shockingly simple and effective.

The width multiplier adjusts the number of filters in each convolution layer by a constant fraction. For a given layer and width multiplier alpha, the number of filters F becomes alpha * F. That’s it!

With this hyperparameter, we can generate a continuum of networks with the same architecture but different numbers of weights. Training each configuration, we can plot the tradeoff between a model’s speed and size and it’s accuracy.

Let’s take a look at a method that builds a fast style transfer model resembling the one Johnson et al describe, but this time, a width multiplier is added as a hyperparameter:

Note that the rest of the model builder class isn’t shown.

When alpha=1.0 , the resulting network contains 1.7M weights. When alpha=0.5 , we get a network with just 424,102 weights.

You can make some pretty small networks with low width parameters, but there are also quite a few repetitive blocks. I decided to prune some of those away, as well.

In practice, I found I couldn’t remove too many. Deeper networks produced better results, even while holding the number of parameters fixed.

I ended up removing two of the five residual blocks and decreased the default number of filters for every layer to 32. My small network ended up looking like this:

A smaller style transfer network with a width parameter.

Through trial and error, I found I could still achieve good stylization with the above architecture, all the way down to a width parameter of 0.3, leaving 9 filters on each layer. The end result: a neural network with only 11,868 weights. Anything less than 10,000 weights didn’t train consistently and produced poorly stylized images.

It’s worth mentioning that this pruning technique is applied before the network is trained. You can achieve better performance on many tasks by iteratively pruning during and after training. That’s pretty involved, though, and I’m impatient. If you’re interested, you can refer to this great post by Matthijs Hollemans for more information.

Quantization

The last piece of compression comes after the network has been trained. Neural network weights are typically stored as 64 or 32 bit floating point numbers. The process of quantization maps each of these floating point weights to an integer with a lower bit width. Going from 32 bit floating point weights to 8 bit integers reduces storage size by a factor of 4. Thanks to an awesome post by Alexis Creuzot, I knew that I could go down to 8 bit quantization with little impact on style.

Quantization is now supported by every major mobile framework including TensorFlow Mobile, TensorFlow Lite, Core ML, and Caffe2Go.

It takes a lot of tweaking and fine-tuning to move from V1 of a mobile-ready model to one that’s ready for production.

Final Results

To summarize, our tiny network architecture has 11,868 parameters, compared to the 1.7 million in Johnson’s original model.

When converted to Core ML and quantized, the final size is just 17KB compared to the original 1.7MB—just 0.10% of the original size. Here are the results trained on van Gogh’s Starry Night. I actually think I prefer the tiny version!

I was a bit surprised to find that, despite a 400X difference in size, the tiny model only ran 50% faster on my iPhone X. I’m not sure if that’s because we are compute bound with this general architecture or if the bottleneck is transferring the images to the GPU for processing.

If you don’t believe me, you can download and use the tiny model yourself. And even train your own! Everything is in the Fritz Style Transfer GitHub repo.

Conclusion

To summarize, I used two simple techniques to reduce the size of a style transfer neural network by 99.9%. Layers were pruned with a simple width multiplier hyper parameter and trained weights were quantized from 32 bit floats to 8 bit integers.

In the future, I’m interested to see how well these methods generalize to other neural networks. Style transfer is easy in the sense that “accuracy” is in the eye of the beholder. For a more quantifiable task like image recognition, we’d likely see much worse performance after such extreme pruning.

Discuss this post on Hacker News and Reddit

Comments 0 Responses