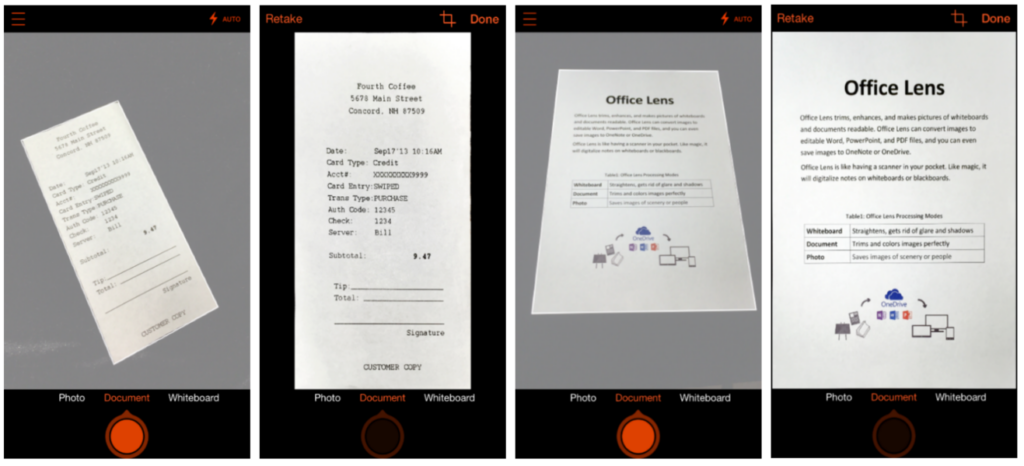

Nowadays, lots of mobile applications like Office Lens and Genius Scan can turn a physical document into a digital one using only a phone—you actually no longer need to have a bulky, costly scanner.

Let’s imagine you want to extract text from the scanned document…easy right? Well, not exactly—it’s actually one of the most challenging problems in computer vision.

In this article, I’ll first provide an overview of OCR (optical character recognition), which is the main technology used to solve this problem, and then I’ll compare two main libraries that use OCR to detect and recognize characters from a given image. Both are on-device tools, and they’re both made by two giants: Google and (more recently) Apple.

But before that, let’s understand what optical character recognition means.

What is OCR?

OCR is a system that allows you to scan a text or document that can be edited on your smartphone or on your computer.

The OCR system corresponds to the automated recognition of printed texts, and to their re-transcription in an electronic file. By scanning a document, the device is able to “read” the content.

OCR systems can recognize different types of fonts and different types of typewriters, but also computers. Some OCR systems can even identify handwriting.

The text that a smartphone or computer reads from a scanned document can then be used to automatically fill out a form, for instance. This is the case when you register a fee on billing software.

How does OCR work?

OCR systems uses the latest technologies to collect information from a document (text, photograph) that you scan, and then convert it into a text file.

For this, the OCR system compares the black and white colors of a document to determine each alphanumeric code. The system then recognizes each character and converts it into ASCII text (US Standard Code for Information Exchange).

This allows you to edit, search, and copy the text as fast as you can on Word.

Where is OCR used?

OCR is a versatile technology that’s used in many situations ranging from managing official documentation to facilitating recreational games.

For example, mail sorting centers often use an OCR system to manage and sort mail. This helps these centers classify mail faster and improve delivery times.

This system is also popular on smartphone apps. It’s useful for different functions: scan a receipt, or scan and recognize a document written by hand.

Why is OCR so hard?

Current OCR software, generally speaking, is already familiar with a large number of fonts, but they can also learn from new inputs. Even with recent developments in computer vision, the recognition of handwritten and written texts in a natural way with linked characters is still a challenge. The software must know how to navigate a document—like for example a newspaper page— and stick to the text concerned, by identifying the lines and ignoring other articles, photo captions, or advertising inserts.

Why an iOS application?

As I mentioned in the introduction, a lot of mobile apps do offer ways to scan documents or even whiteboards with the use of mobile cameras. But very few offer ways to transcribe and recognize the text from these same documents.

At the end of this tutorial, you’ll be able to extract text using two free on-device libraries.

Google MLVision — Firebase ML Kit:

Firebase ML Kit is the OCR technology offered by Google. There are two versions: an on-device library and a cloud-based one. It seems as though the on-device version isn’t as powerful as the cloud-based one, but the objective is to compare it to the native Apple version, which is also on-device.

Apple’s Text recognition in Vision Framework:

Vision is Apple’s framework for everything related to image and video processing. At WWDC 19, Apple announced support for OCR, which allows the detection and recognition of characters without any external library.

Create the App Skeleton:

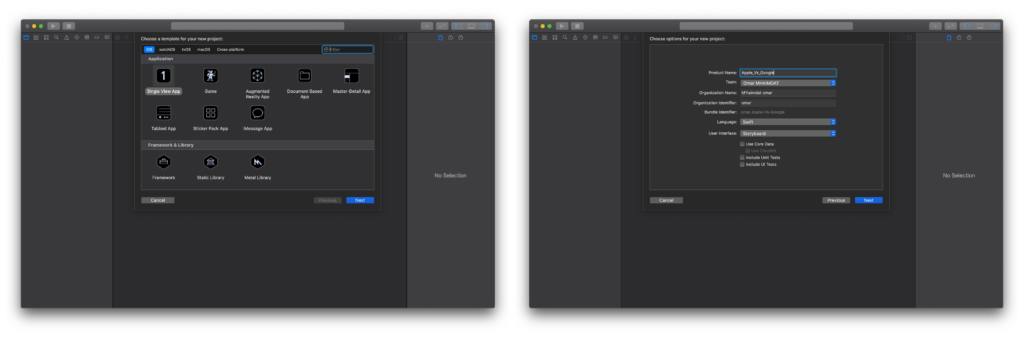

Create a new project:

To begin, we need to create an iOS project with a single view app, make sure to choose Storyboard in the “User interface” dropdown menu (Xcode 11 only):

Now we have our project ready to go. I don’t like using storyboards myself, so the app in this tutorial is built programmatically, which means no buttons or switches to toggle — just pure code 🤗.

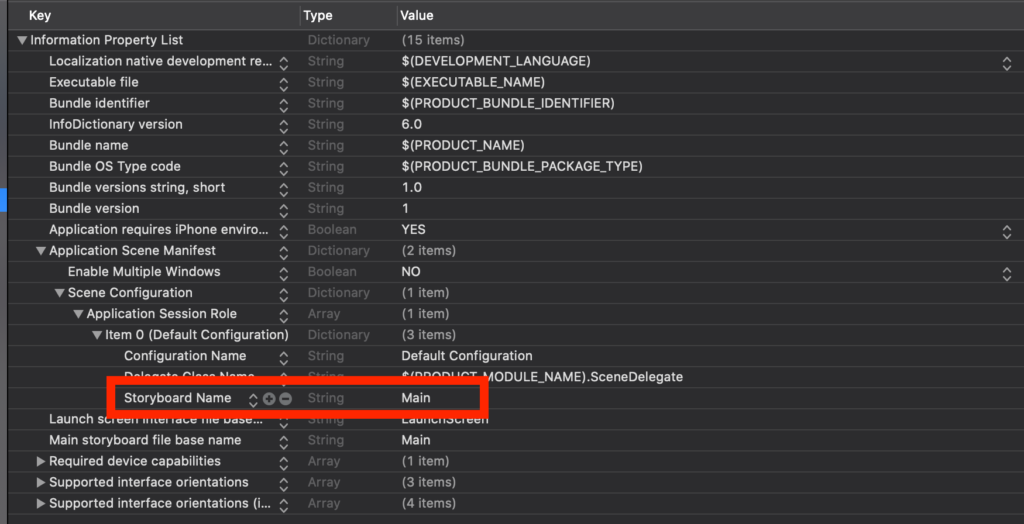

To follow this method, you’ll have to delete the main.storyboard and set your SceneDelegate.swift file (Xcode 11 only) like so:

func scene(_ scene: UIScene, willConnectTo session: UISceneSession, options connectionOptions: UIScene.ConnectionOptions) {

// Use this method to optionally configure and attach the UIWindow `window` to the provided UIWindowScene `scene`.

// If using a storyboard, the `window` property will automatically be initialized and attached to the scene.

// This delegate does not imply the connecting scene or session are new (see `application:configurationForConnectingSceneSession` instead).

guard let windowScene = (scene as? UIWindowScene) else { return }

window = UIWindow(frame: windowScene.coordinateSpace.bounds)

window?.windowScene = windowScene

window?.rootViewController = ViewController()

window?.makeKeyAndVisible()

}With Xcode 11 you’ll have to change the Info.plist file like so:

You need to delete the “Storyboard Name” in the file, and that’s about it.

Main ViewController

Now let’s set our ViewController with the buttons and a logo. I used some custom buttons in the application — you can obviously use the system button.

First, you need to inherit from UIButton and create your own custom button — we inherit from UIButton because the custom button ‘is’ a UIButton, so we want to keep all its properties and only inherit to change the look of it:

import UIKit

class Button: UIButton {

override func awakeFromNib() {

super.awakeFromNib()

titleLabel?.font = UIFont(name: "Avenir", size: 12)

}

}import UIKit

class BtnPlein: Button {

override func awakeFromNib() {

super.awakeFromNib()

}

var myValue: Int

///Constructor: - init

override init(frame: CGRect) {

// set myValue before super.init is called

self.myValue = 0

super.init(frame: frame)

layer.borderWidth = 6/UIScreen.main.nativeScale

layer.backgroundColor = UIColor(red:0.24, green:0.51, blue:1.00, alpha:1.0).cgColor

setTitleColor(.white, for: .normal)

titleLabel?.font = UIFont(name: "Avenir", size: 22)

layer.borderColor = UIColor(red:0.24, green:0.51, blue:1.00, alpha:1.0).cgColor

layer.cornerRadius = 5

}

required init?(coder aDecoder: NSCoder) {

fatalError("init(coder:) has not been implemented")

}

}import UIKit

class BtnPleinLarge: BtnPlein {

override func awakeFromNib() {

super.awakeFromNib()

contentEdgeInsets = UIEdgeInsets(top: 0, left: 16, bottom: 0, right: 16)

}

}BtnPleinLarge is our new button, and we use it to create our main two buttons for ViewController.swift, our main view.

I have two options in my application, so I’ll make one button for Apple and the other for Google.

Now set the layout and buttons with some logic as well:

let apple: BtnPleinLarge = {

let button = BtnPleinLarge()

button.translatesAutoresizingMaskIntoConstraints = false

button.addTarget(self, action: #selector(buttonToUploadApple(_:)), for: .touchUpInside)

button.setTitle("Apple", for: .normal)

let icon = UIImage(named: "upload")?.resized(newSize: CGSize(width: 50, height: 50))

button.addRightImage(image: icon!, offset: 30)

button.backgroundColor = .systemBlue

button.layer.borderColor = UIColor.systemBlue.cgColor

button.layer.shadowOpacity = 0.3

button.layer.shadowColor = UIColor.systemBlue.cgColor

button.layer.shadowOffset = CGSize(width: 1, height: 5)

button.layer.cornerRadius = 10

button.layer.shadowRadius = 8

button.layer.masksToBounds = true

button.clipsToBounds = false

button.contentHorizontalAlignment = .left

button.layoutIfNeeded()

button.contentEdgeInsets = UIEdgeInsets(top: 0, left: 0, bottom: 0, right: 20)

button.titleEdgeInsets.left = 0

return button

}()

let google: BtnPleinLarge = {

let button = BtnPleinLarge()

button.translatesAutoresizingMaskIntoConstraints = false

button.addTarget(self, action: #selector(buttonToUploadGoogle(_:)), for: .touchUpInside)

button.setTitle("Google", for: .normal)

let icon = UIImage(named: "upload")?.resized(newSize: CGSize(width: 50, height: 50))

button.addRightImage(image: icon!, offset: 30)

button.backgroundColor = #colorLiteral(red: 0.1215686277, green: 0.01176470611, blue: 0.4235294163, alpha: 1)

button.layer.borderColor = #colorLiteral(red: 0.1215686277, green: 0.01176470611, blue: 0.4235294163, alpha: 1)

button.layer.shadowOpacity = 0.3

button.layer.shadowColor = #colorLiteral(red: 0.1215686277, green: 0.01176470611, blue: 0.4235294163, alpha: 1)

button.layer.shadowOffset = CGSize(width: 1, height: 5)

button.layer.cornerRadius = 10

button.layer.shadowRadius = 8

button.layer.masksToBounds = true

button.clipsToBounds = false

button.contentHorizontalAlignment = .left

button.layoutIfNeeded()

button.contentEdgeInsets = UIEdgeInsets(top: 0, left: 0, bottom: 0, right: 20)

button.titleEdgeInsets.left = 0

return button

}()We now need to set up some logic. It’s important to change the Info.plist file and add a property so that an explanation of why we need access to the camera and the library is given to the user. Add some text to the “Privacy — Photo Library Usage Description”:

@objc func buttonToUploadApple(_ sender: BtnPleinLarge) {

self.whatService = "Apple"

if UIImagePickerController.isSourceTypeAvailable(.photoLibrary) {

let imagePicker = UIImagePickerController()

imagePicker.delegate = self

imagePicker.sourceType = .photoLibrary

imagePicker.allowsEditing = false

self.present(imagePicker, animated: true, completion: nil)

}

}

@objc func buttonToUploadGoogle(_ sender: BtnPleinLarge) {

self.whatService = "Google"

if UIImagePickerController.isSourceTypeAvailable(.photoLibrary) {

let imagePicker = UIImagePickerController()

imagePicker.delegate = self

imagePicker.sourceType = .photoLibrary

imagePicker.allowsEditing = false

self.present(imagePicker, animated: true, completion: nil)

}

}To differentiate between Google and Apple, I’ve created a variable like so:

var whatService: String?Of course, you’ll need to set up the layout and add the subviews to the view, too. I’ve added a logo on top of the view, as well:

override func viewDidLoad() {

super.viewDidLoad()

view.backgroundColor = #colorLiteral(red: 0.8801638484, green: 0.9526746869, blue: 0.9862166047, alpha: 1)

addSubviews()

setupLayout()

}

func addSubviews() {

view.addSubview(logo)

view.addSubview(apple)

view.addSubview(google)

}

func setupLayout() {

logo.centerXAnchor.constraint(equalTo: self.view.centerXAnchor).isActive = true

logo.topAnchor.constraint(equalTo: self.view.safeTopAnchor, constant: 20).isActive = true

google.centerXAnchor.constraint(equalTo: view.centerXAnchor).isActive = true

google.bottomAnchor.constraint(equalTo: view.bottomAnchor, constant: -120).isActive = true

google.widthAnchor.constraint(equalToConstant: view.frame.width - 40).isActive = true

google.heightAnchor.constraint(equalToConstant: 80).isActive = true

apple.centerXAnchor.constraint(equalTo: view.centerXAnchor).isActive = true

apple.widthAnchor.constraint(equalToConstant: view.frame.width - 40).isActive = true

apple.heightAnchor.constraint(equalToConstant: 80).isActive = true

apple.bottomAnchor.constraint(equalTo: google.topAnchor, constant: -20).isActive = true

}Output ViewController: Where We Show Our Result

Here, we need three things:

- Our original image:

let inputImage: UIImageView = {

let image = UIImageView(image: UIImage())

image.translatesAutoresizingMaskIntoConstraints = false

image.contentMode = .scaleAspectFit

return image

}()2. A label with the results:

let result: UILabel = {

let label = UILabel()

label.textAlignment = .justified

label.font = UIFont(name: "Avenir", size: 16)

label.textColor = .black

label.translatesAutoresizingMaskIntoConstraints = false

label.lineBreakMode = .byWordWrapping

label.numberOfLines = 0

label.sizeToFit()

return label

}()3. A button to dismiss the view:

let dissmissButton: BtnPleinLarge = {

let button = BtnPleinLarge()

button.translatesAutoresizingMaskIntoConstraints = false

button.addTarget(self, action: #selector(buttonToDissmiss(_:)), for: .touchUpInside)

button.setTitle("Done", for: .normal)

button.backgroundColor = .systemRed

button.layer.borderColor = UIColor.systemRed.cgColor

return button

}()We need to add the subviews to the main view and set up the layout, too:

override func viewDidLoad() {

super.viewDidLoad()

view.backgroundColor = #colorLiteral(red: 0.8801638484, green: 0.9526746869, blue: 0.9862166047, alpha: 1)

addSubviews()

setupLayout()

}

func addSubviews() {

view.addSubview(dissmissButton)

view.addSubview(inputImage)

view.addSubview(result)

}

func setupLayout() {

dissmissButton.centerXAnchor.constraint(equalTo: view.centerXAnchor).isActive = true

dissmissButton.heightAnchor.constraint(equalToConstant: 60).isActive = true

dissmissButton.widthAnchor.constraint(equalToConstant: view.frame.width - 40).isActive = true

dissmissButton.bottomAnchor.constraint(equalTo: view.bottomAnchor, constant: -100).isActive = true

inputImage.centerXAnchor.constraint(equalTo: view.centerXAnchor).isActive = true

inputImage.centerYAnchor.constraint(equalTo: view.centerYAnchor, constant: -150).isActive = true

inputImage.widthAnchor.constraint(equalToConstant: view.frame.width - 50).isActive = true

result.centerXAnchor.constraint(equalTo: view.centerXAnchor).isActive = true

result.bottomAnchor.constraint(equalTo: dissmissButton.topAnchor, constant: -40).isActive = true

result.leftAnchor.constraint(equalTo: view.safeLeftAnchor, constant: 20).isActive = true

result.rightAnchor.constraint(equalTo: view.safeRightAnchor, constant: -20).isActive = true

}Install Firebase ML-Kit:

I have used Cocoapods to install the package. You’ll need to install three pods in order to access the text recognition API:

To install the pod and set up your dependencies, check out the Cocoapods website; as for Firebase you can check out the official documentation, which is pretty easy and straightforward. Then, download the on-device text recognition API.

Here, I’ve created a function with a completion block that returns a string:

func googleMlOCR(image: UIImage, completion: @escaping (String?)->()) {

let textRecognizer = vision.onDeviceTextRecognizer()

let image = VisionImage(image: image)

textRecognizer.process(image) { result, error in

if let error = error {

completion(error as? String)

} else {

completion(result?.text)

}

}

}Apple’s VNRecognizeTextRequest (The challenger):

let requestHandler = VNImageRequestHandler(cgImage: pickedImage.cgImage!, options: [:])

let request = VNRecognizeTextRequest { (request, error) in

guard let observations = request.results as? [VNRecognizedTextObservation] else { return }

for currentObservation in observations {

let topCandidate = currentObservation.topCandidates(1)

if let recognizedText = topCandidate.first {

print(self.recognizedTxt!)

}

}

}

request.recognitionLevel = .accurate

request.usesLanguageCorrection = true

try? requestHandler.perform([request])The results:

When it comes to testing OCR results, you have to be very careful with the set of images you want to test on.

I’ve tried to give you examples that range from fairly easy to challenging. I will judge them based on the following criteria:

- Typed text: The number of errors will be based on the amount of extracted text that isn’t exactly the same as the text on the input image. For simplicity, one mistake by the OCR will be counted as an error.

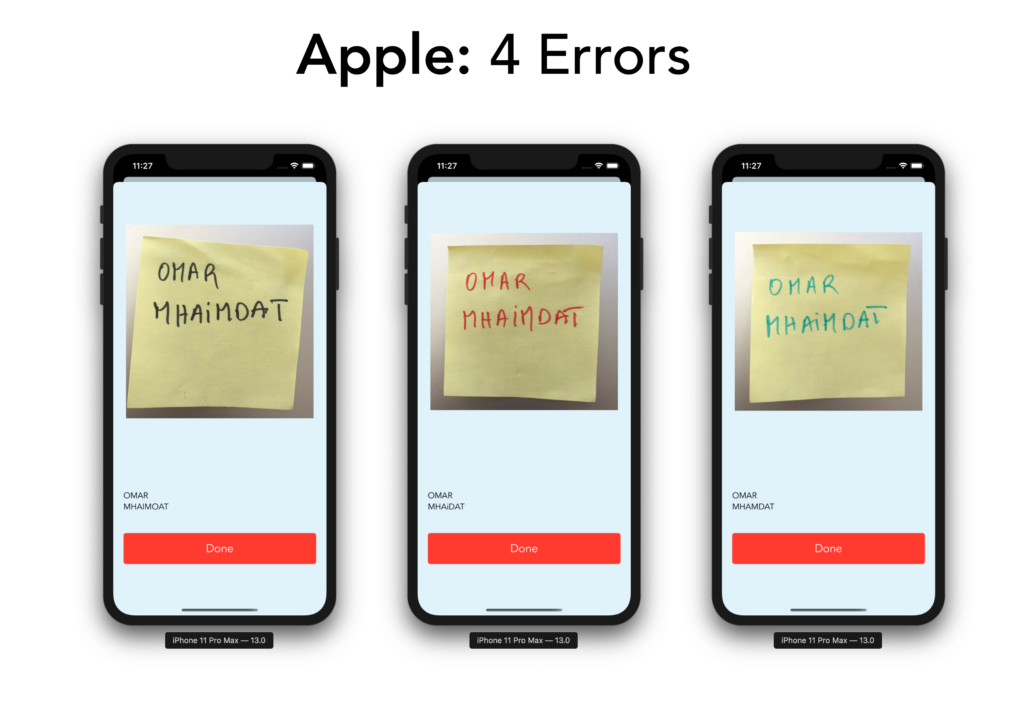

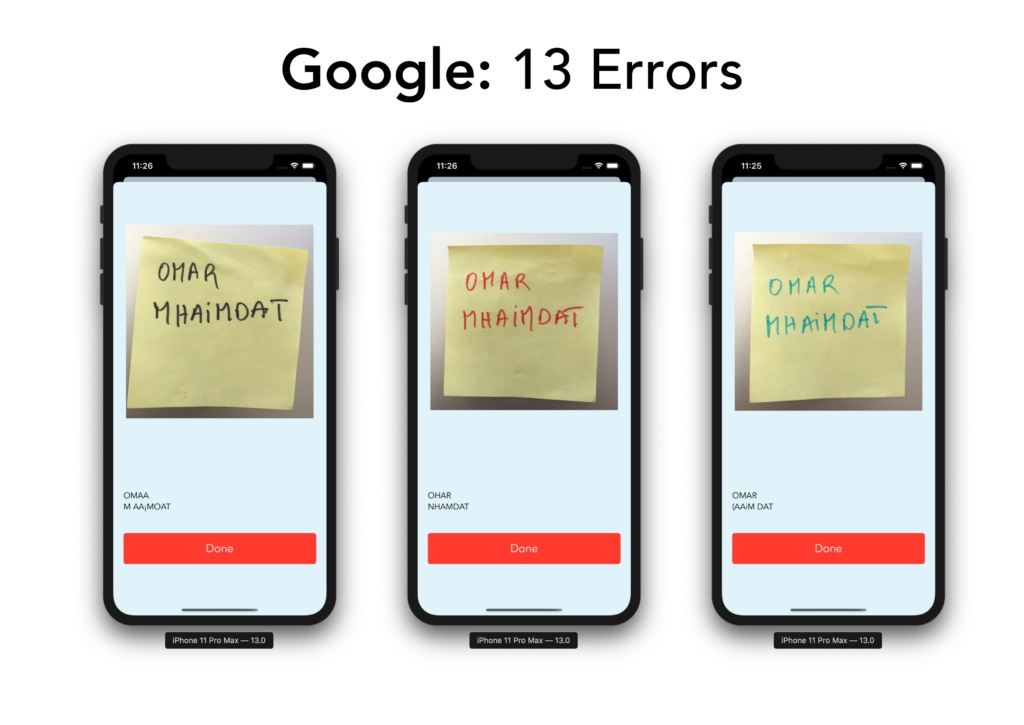

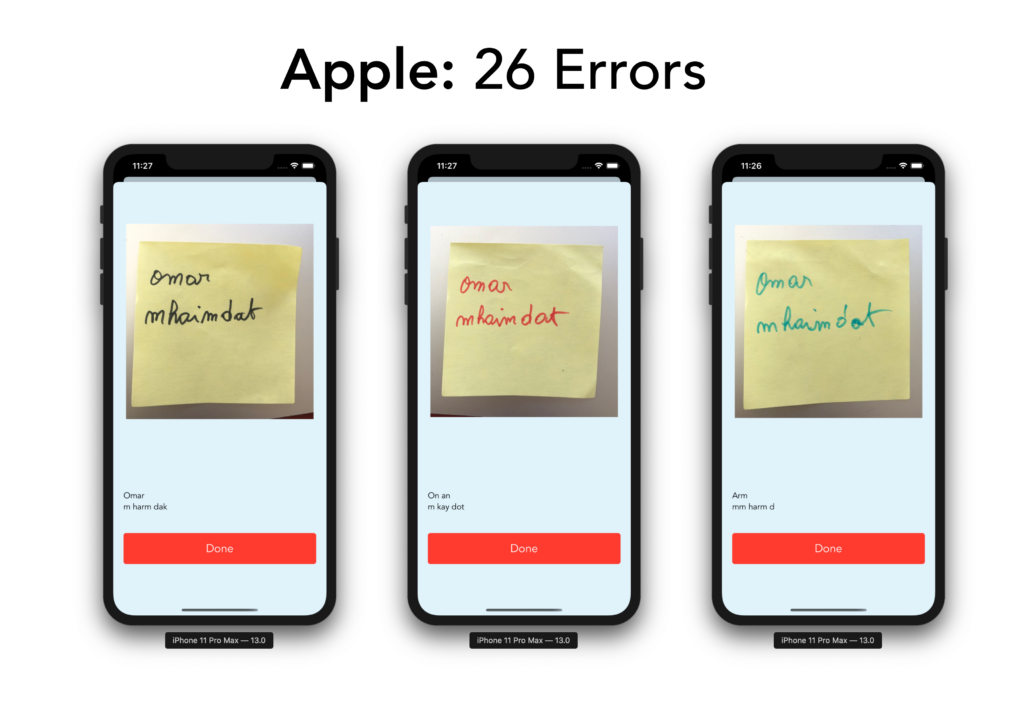

- Handwritten text: Because recognizing handwritten text is a much harder challenge, I’ll be more specific, which means that the number of errors will be based on the number of extracted characters that aren’t exactly the same as the text on the input image, that means: Upper case, lowercase, each character, etc

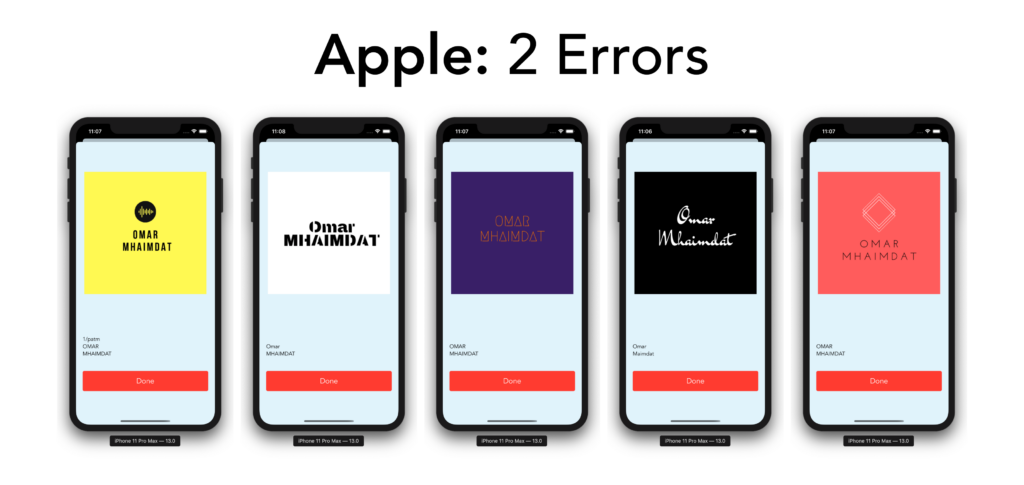

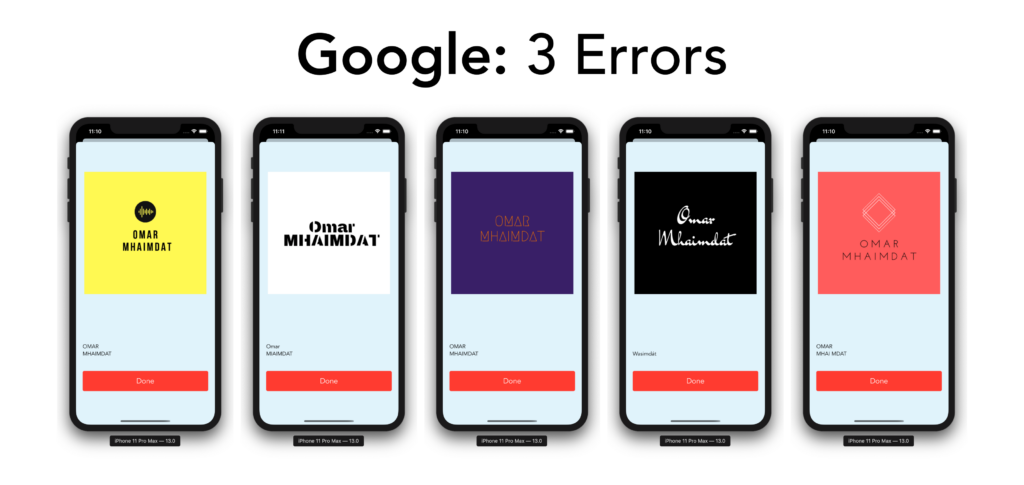

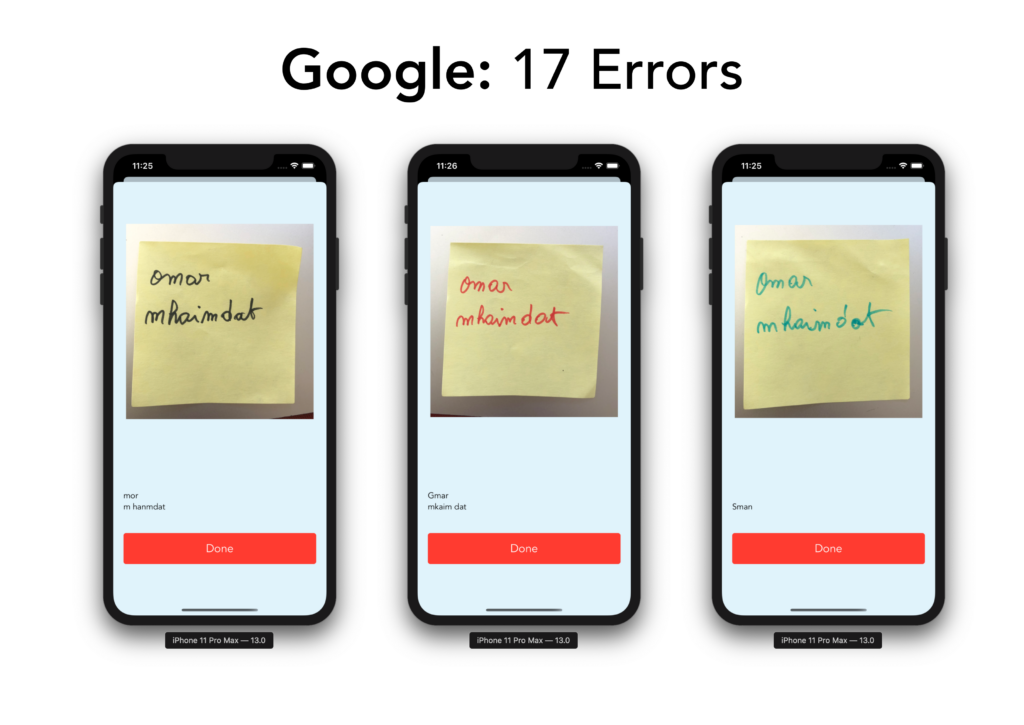

I used my name “Omar MHAIMDAT” as a way to test the performance of the two libraries.

Typed text:

- The easy part:

No mistakes whatsoever—it’s interesting to see them both capturing every single detail from the images, even with different fonts and backgrounds.

2. The challenging part:

Honestly, I wasn’t expecting these results. I thought to myself, there’s no way they would predict any of this text correctly. I was wrong—not only did they do a great job, but they were even able to capture harder fonts, like the third and fourth ones.

Handwritten text:

- The easy part:

2. The challenging part:

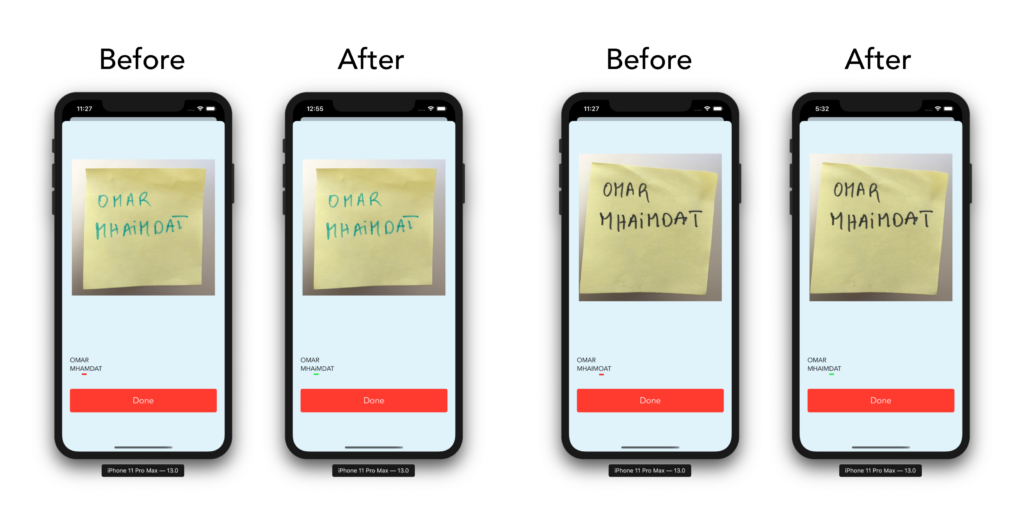

This is probably the most impressive part of Apple’s API. I’m stunned by the results, not only because it’s the first version, but also because in reality my last name isn’t common, which makes it pretty hard to correct with a simple classifier.

And for Apple to compete with Google on this task would be quite the challenge—Google has been in this game for almost fifteen years now, developing one of the most used open source library Tesseract since 2006 and digitalizing a huge amount of books (even turning it into a service called Google Books).

With more data and more development time, Apple can clearly compete on this front, and I think their next version will mark a significant improvement. But you have to remember that neither one of these OCR technologies performed perfectly — even Google’s.

One way to improve Apple’s output is to add language correction and to add custom words, like so:

request.usesLanguageCorrection = true

request.customWords = ["Omar", "MHAIMDAT"]This alone improved the results:

Conclusion and final thoughts:

I remember a while back trying other third party libraries like TesseractOCR for iOS, SwiftOCR, or Google MLVision — and concluded that Google MLVision was crushing them all and is/was by far the best option.

As of today, I can say that Apple is little bit ahead, for one simple reason that will resonate with every developer. YOU DON’T NEED ANOTHER DEPENDENCY! You can get the same or even better results with a native Swift API made by Apple.

Apple is really giving value to iOS developers with the new set of “ML” APIs. They’ve done a great job at making them simple to use, easy to expand, and to customize.

If you liked this tutorial, please share it with your friends. If you have any questions don’t hesitate to send me an email at [email protected].

This project is available to download from my GitHub account.

Comments 0 Responses