WWDC 2020

Over the last few years, Apple has been pushing hard in the augmented reality department. From a simple, finicky API a few years ago, ARKit has grown into a seamless integration between cutting edge hardware and advanced software. With the introduction of ARKit 4 during WWDC20, augmented reality connects virtual objects to the real world better than ever. In this article, you’ll learn about the latest from Apple in augmented reality.

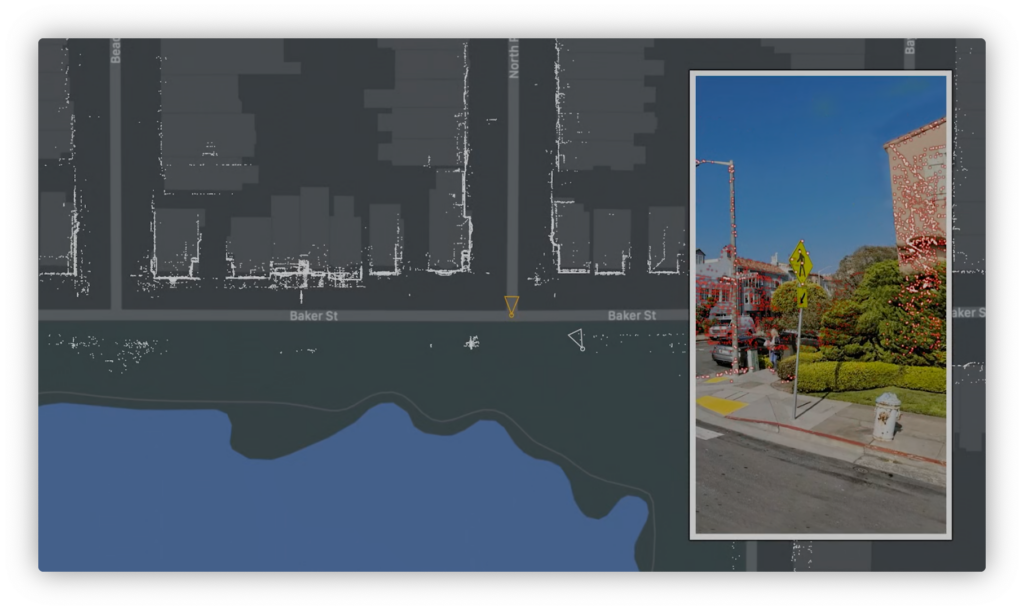

Location Anchors

Last year, Apple announced the ability to share augmented reality experiences across devices — so two users could simultaneously interact with the same experience. This year, ARKit will allow augmented reality scenes to be linked to physical locations.

Using a technique that Apple calls “visual localization,” ARKit will use machine learning techniques to map a physical scene to 3D data from Apple Maps. This way, a virtual object can remain anchored to the physical map and appear in the exact same position every time. Apple’s map data already contains feature points, which means that that you don’t need to handle any of the location matching — it’s all handled by the API!

Location anchors are available for development in most major US cities and are compatible with iOS devices that have a GPS chip and an A12 Bionic chip or newer. The API requires you to check for these capabilities before starting the augmented reality experience. By far, location anchoring is one of the biggest updates to ARKit 4 this year.

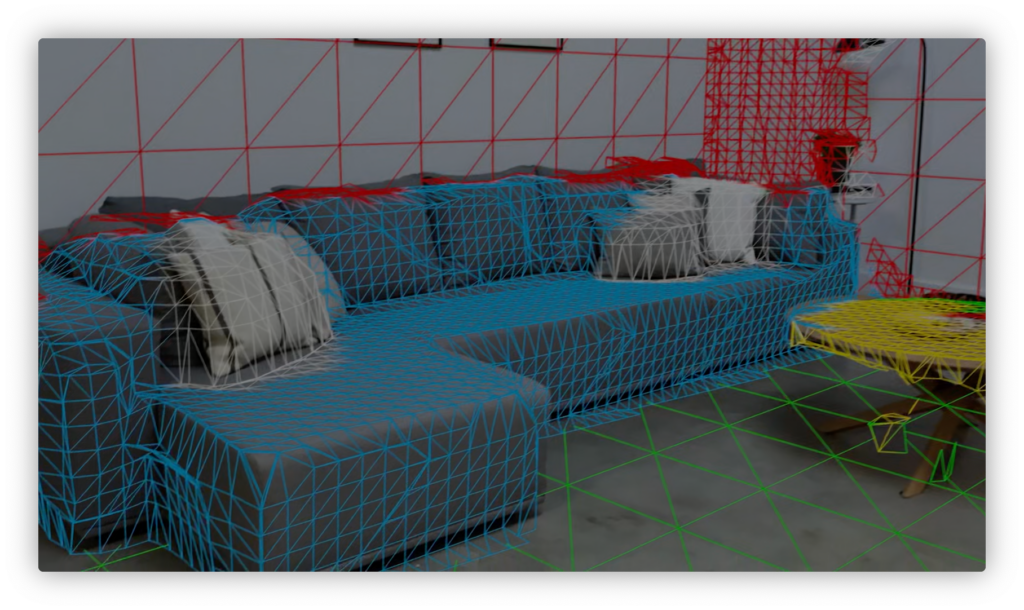

Scene Geometry

Using the new iPad’s LiDAR scanner ARKit 3.5 could create an accurate topological map of the surroundings. The iPad Pro’s LiDAR sensor, in short, shoots laser beams at the environment and then uses a scanner to check how fast the light gets reflected back to the sensor.

The idea is that the longer the light takes to come back, the farther the object is. If you want, you can learn more about this in one of my previous articles:

With the release of ARKit 4, semantic segmentation of scenes is now possible, which allows us to recognize objects in a given scene for more dynamic augmented reality experiences. So — instead of only creating a map, ARKit can detect what types of objects are present in the map.

Live object recognition can allow augmented reality experiences to occlude — or cover — virtual objects with physical objects and differentiate between different objects. For example, you could have a virtual pet be occluded by living room couches but not by the table. This fine-tuned control over the experience has tremendous potential for developers.

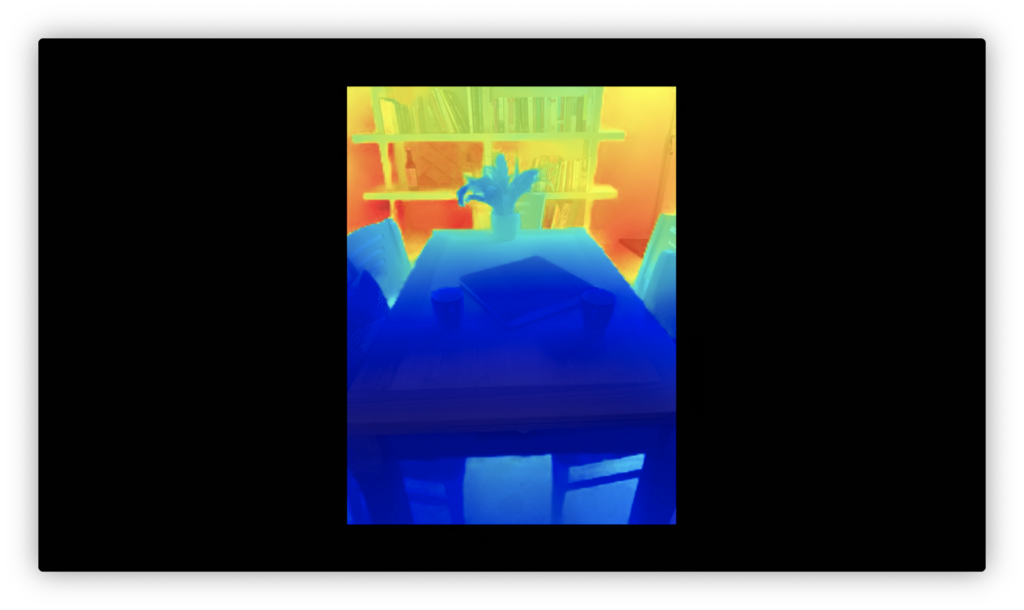

Depth

The new Depth API provides detailed information about the topography of the environment. A depth map is available for each frame of a live video feed at up to 60 frames per second — that’s a lot of frames to do depth analysis on! This API combines data from the LiDAR sensor and maps it onto images to generate a dense depth map.

In addition to a depth map, the Depth API also provides a confidence map, which indicates how accurate the depth reading is. This can help developers evaluate when to rely on the depth map reading or to use an alternate method for determining depth. The depth and confidence maps are lower in resolution than the actual image because they use individual pixel values to convey the depth information.

Object Placement

Ray casting is a technique used to place virtual objects in augmented reality environments and has been optimized for precision in ARKit 4 — especially on devices with LiDAR sensors. In the past, hit testing was the recommended technique for ARKit experiences, and Apple now recommends ray casting.

If you aren’t familiar with ray casting, it’s a technique that uses a mathematical ray to determine the position of a point on a 3D plane. In essence, ray casting is a better method than hit-testing and allows for faster and more precise object placement.

Face Tracking

Face Tracking was previously only available on devices with a TrueDepth camera — in other words, Face ID-supported devices. With ARKit 4 comes support across all devices with the A12 processor or newer. The API no longer requires TrueDepth cameras to operate, making face tracking capabilities more widely accessible.

Now, augmented reality is more connected to the actual environment than ever before with the release of ARKit 4. If you’d like to start using the new APIs, they’re currently in beta and available via macOS Big Sur, iOS 14, and Xcode 12. If you want to start building your own augmented reality experiences, check out my tutorials on Reality Composer:

Be sure to smash that “clap” button as many times as you can, share this tutorial on social media, and follow me on Twitter.

Comments 0 Responses