It’s widely speculated that Apple will at some point transition the Mac platform from Intel-based processors to the very same ARM-based systems that power the iPhone and iPad.

For some, this possibility is more than just speculation, but fact. And that the only real questions are simply when will Apple make the jump?

And how?

Roadmap One: The Low Road

The most commonly-accepted transition path looks like this:

Apple reboots the MacBook Air as an ultra-thin and light mobile notebook that runs macOS on ARM. This new MacBook Air provides the horsepower most people need to perform their common, day-to-day tasks.

This new machine is Apple’s direct answer to Google’s Chromebook and the ARM-powered Surface notebooks produced by Microsoft. It runs any macOS app the user can download from the App Store, and also directly supports many, many, many iOS apps via Catalyst.

Moving up the chain

Later MacBooks follow, with ARM chips eventually moving up the chain into the MacBook Pro and even onto the desktop via iMac.

Critics will protest that ARM simply isn’t ready yet. That it’s not — and some say it can never be — a replacement for the higher-end Intel processors used in Apple’s Pro notebooks and desktops.

But is that true?

Their arguments basically boil down to three main points.

- Those concerning performance

- Those about running macOS on ARM

- Those concerning Intel compatibility

Let’s delve into those now.

1. ARM Performance

There have been several sets of benchmarks (LINK?) that indicate that Apple’s current ARM-based processors are at least as fast as today’s Intel i5’s and even the mid-range i7’s in terms of both single-threaded and multi-threaded performance.

When Apple introduced the new iPad Pro, they even went as far as to say that Apple’s latest tablet was as fast — or faster — than 92% of the notebook and tablet computers then in use.

That’s 92%, in a tablet that’s 1/4″ thick and without a fan. Think about it.

Critics will argue those numbers and point out — rightfully so — that the A12x can’t sustain those speeds. That it’s hobbled by the iPad’s power budget and thermal characteristics — which is also true.

But what if it wasn’t? Apple has never shown us an A-series chip with a notebook’s power budget and with a notebook’s heat sinks and cooling systems…and one just has to wonder how a current generation A12x would fare in that regard…

Nor what would happen if Apple’s engineers focused on building a custom notebook-class ARM chip, though possible benchmarks indicate some staggering scores for an as of yet unannounced 12-core processor.

Regardless, Apple’s engineers are also not known for sitting on their laurels, and it’s all but certain that a newer, better, and faster A13 chip for iOS is getting ready to step onto the stage in just a few month’s time.

2. Running macOS on ARM

This is somewhat tied to performance, with the primary idea being that ARM isn’t capable of running a “real” multitasking operating system. Critics again point to how iOS was hobbled and incapable of performing “true” multithreaded background tasks.

And again, it’s true that iOS was hobbled in this regard…by Apple.

The primary culprit in this case, however, wasn’t the performance or capabilities of the ARM chipset, but the battery life.

Modern mobile devices are a miracle of present-day engineering and battery technology, but earlier devices ran cruder processors and used less efficient displays and used more power hungry radios, all powered by less efficient batteries that simply had to last the length of an average user’s day.

Let one app’s errant background process continually poll a server for social media updates, and that process could drain the battery down to zero and leave the user without a phone just when they might have needed it the most.

So Apple deliberately restricted third-party access to background tasks and processing.

iOS effectively uses the same Mach kernel and BSD-Unix subsystems as macOS, so it’s not that the OS and chip weren’t capable of handling the processes involved, they simply lacked the battery capacity to do so.

3. Intel Compatibility

When Apple made its second processor transition, that from PowerPC to Intel, one of the many claimed benefits was that Apple now ran on the the same Intel chipset that powered Windows, and as such users could now run Windows software on their Macs.

In 2005, Apple was still struggling, and the world had yet to even catch a glimpse of the iPhone. Apple knew it had to move on from IBM’s overly-restrictive roadmap, and that Intel was its only real choice.

Nor could it guarantee that it could convince the rest of the industry to port their software to the Mac.

In 2006, Apple released the first version of Bootcamp so users could dual-boot into both OS X and Windows and run Windows software. Buy a single machine, and use the operating system best suited for the job at hand.

Apple also worked with VMWare and Parallels to provide virtualization software that allowed Windows-based software to run in specialized partitions and even integrate directly with the Mac desktop.

Thus armed, a good percentage of Mac users could use engineering software that ran on Windows, run AutoCAD, and run Visio and Microsoft Project and Word and Excel.

Those were the days. Those were the days, in fact.

Intel’s Failures

Today, however, is not yesterday, and the world has changed. Today Word and Excel can be downloaded from the App Store. AutoCAD runs directly on macOS. And much of the world’s software isn’t desktop-based, but internet-based

But today Apple also has pretty much the same problems with Intel that they once had with IBM and PowerPC. Namely, complete and total reliance on a third-party company to provide the chips that power their entire notebook and desktop lineup.

It’s okay to be dependent on someone if that someone is reliable…not wonderful, you understand, but okay.

But what happens when they’re not?

For the past eight years, Intel has missed goal after goal on their processor roadmap, and Apple has caught a lot of flack from users and the tech industry over Apple’s lack of innovation and inability to bring updated computers and notebooks to market. Heck, I’ve given them a lot of flack.

Apple has caught the flack, but to be fair, it’s rather difficult to bring new computers to market when your primary processor supplier repeatedly fails to deliver on its promises.

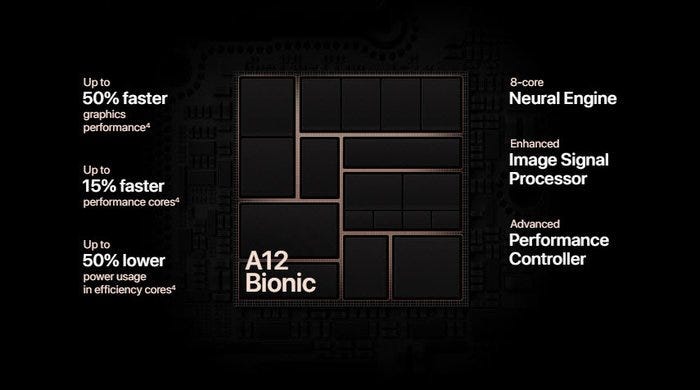

Intel is just now struggling to shift from a 14nm manufacturing process to a 10nm process for their chips, whereas Apple’s A12 chip series is manufactured by TSMC today using a 7nm process.

Apple’s an entire generation ahead of the point that Intel is just now reaching.

And that’s not just the process used to make the chips.

Apple’s Bionic Chip and the Neural Engine

One thing that many found surprising during Apple’s announcement of their new A12 “Bionic” chip was the inclusion of the Neural Engine, a separate portion of the chip dedicated to executing machine learning tasks and training in real-time.

With a dedicated 8-core design (over and above the CPU and GPU cores), Apple’s neural engine could perform an astounding 5-trillion operations per second…on a phone.

Apple is leaning heavily into AI and machine learning. Yes, on the iPhone the Bionic chip helps power silly things like Animoji, but it also powers FaceID and predictive text and natural language processing and palm-rejection features. It powers facial recognition and object classification in the camera and photo apps. It’s used to power object detection and their other tools for augmented reality—and on, and on.

The Bionic Neural Engine also powers Core ML, Apple’s toolkit that allows developers to import and use trained machine learning models within their own applications.

Apple knew that to bring all of these AI and ML features to iOS, they were going to need custom silicon. And so they designed those capabilities right into their chipset.

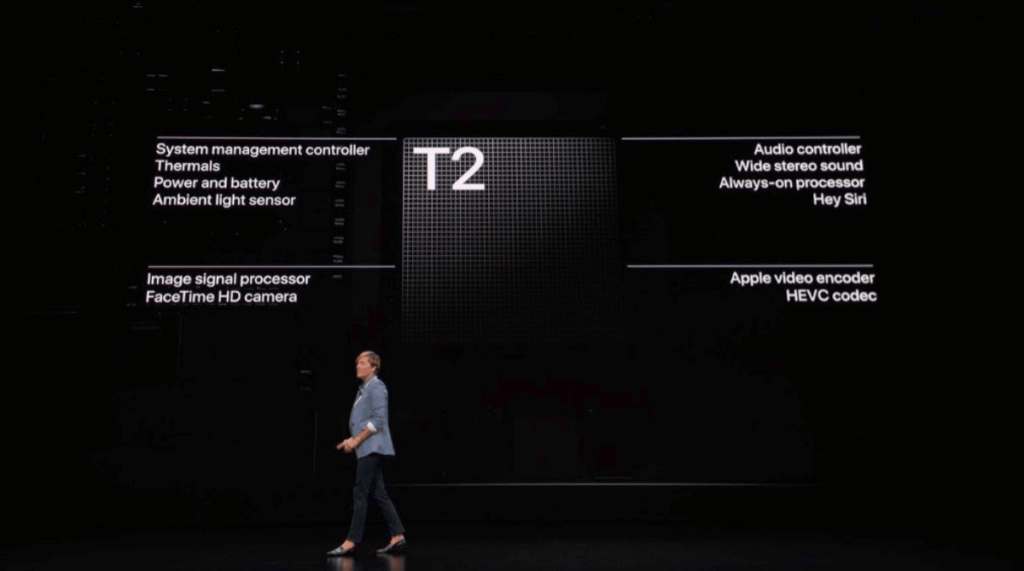

They also added dedicated silicon for video encoding/decoding, more silicon for an image signal processing (photos), and more for the Secure Enclave (also SEP, or secure enclave processor), which handles and stores sensitive data.

Apple isn’t shy about adding additional capabilities to their processors to help get the job done. Nor would it be wrong to say that it’s one of their primary differentiating features in the marketplace.

Which is great for iOS…but what about the Mac?

Roadmap Two: The High Road

So if we postulate that Apple will make the jump to ARM, one must also ask:

What if Apple decided to build a custom-designed ARM chip not just for the Air and MacBook lines, but for the Pro lines as well? I’m not saying that ARM is ready to take on Xeon quite yet…but could it power a MacBook Pro?

After all, the iOS platform isn’t the only one that needs world-class AI and machine learning support. To quote Ars Technica:

With that in mind, the high road has basically two paths:

- System on a Chip

- Discrete Components

Let’s walk down each.

1. System on a Chip

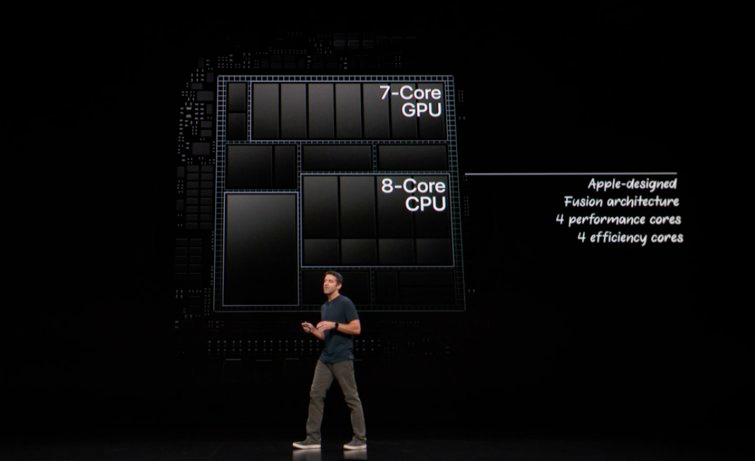

The easiest route is System on a Chip, or SOC. With this design, Apple basically crams everything (CPU, GPU, NE) onto a single chip.

The problem here is whether or not Apple can cram enough CPU and NE cores onto a single chip. Physical realities have to be observed, not to mention the fact that each core added increases the rejection rate and decreases the yield, driving up costs.

Regardless, it’s all but certain the SOC designs will fill the low and mid-level tiers in the Mac lineup, and it’s entirely possible we could see the 12-core design mentioned above.

2. Discrete Components

This one has more potential for professional systems.

Suppose Apple were to pull the GPU and NE cores from a custom ARM chip and used the space to add four more high-performance cores, giving us the aforementioned high-end 12-core ARM processor?

And suppose they use a discrete GPU and then also add another custom chip with say, 48 NE cores for dedicated AI/ML processing. A chip capable of executing 30-trillion operations per second…on a notebook?

Apple’s added its own custom chips to the Mac and MacBook Pro before, namely the T1 and T2 chips used to provide Secure Enclave support for fingerprint recognition, plus a host of other features.

Bundling additional NE support into such a chip is not that far fetched.

An AI/ML System for Professionals?

Such a MacBook Pro would be a “Pro” system indeed, with 8-high-performance cores and 4-low-performance cores for efficiency. If the leaked 12-core processor scores are accurate, we’re looking at scores of 6,912 single-core and 24,240 multi-core, on a processor clocked at 3.1GHz.

For reference, Geekbench 4 uses scores that are calculated against a baseline score of 4,000, which represents the performance of an Intel Core i7–6600U @ 2.60 GHz.

The single-core score for an Intel Core i9–9900K is just 6,216 @ 3.6GHz.

And that doesn’t include the power added by a dedicated GPU, and by a chip dedicated to both machine learning processing and training.

The Neural Engine Processor

Building a discrete NE processor also raises another distinct possibility.

What if Apple were to then throw a dozen or so NE chips onto a board, and then were to plug in two or three or four of those boards into a certain new, high-end customizable Mac computer?

This is indeed entering the realm of pure speculation, but once you start down the dark path…

Core ML / Create ML

Apple went to great lengths to create Core ML and Create ML, development frameworks that let you build real-time, personalized experiences on iOS with on-device machine learning.

Core ML takes advantage of the CPU, GPU, and Neural Engine to provide maximum performance and efficiency, and Create ML allows Mac developers to build, train, and deploy machine learning models right from their Mac’s.

As of iOS 13 and iPadOS 13, Create ML will let developers personalize their models, much in the same way that FaceID learns how you look with and without glasses or a hat. This information uses the Neural Engine to execute machine learning tasks in real-time, with data that stays on the device and as such insures user privacy.

Apple wants developers to integrate AI/ML into their applications, and they’re doing everything they can to support them in their efforts.

Swift For TensorFlow

Another advantage of doing ML on Mac is Swift for TensorFlow (S4TF).

As you’re probably aware, S4TF is a Google effort to find and use a new, high-performance language for deep learning and differentiable programming. After a diligent and thorough search, Google choose Swift.

Also note that S4TF is stewarded by Chris Lattner, a former Apple employee who’s best known as the main author of LLVM and the Swift programming language.

And what better environment for Swift and S4TF development than the home on which it was created and raised, and with the toolset designed for its use?

Especially if that platform was specifically hardware-accelerated to support it.

Straws in the Wind

Obviously, much of this, especially the high road roadmap, is speculation and primarily an exercise in reading the straws in the wind. And yet…

Bloomberg reports that Apple has just poached one of ARM’s top chip architects, Mike Filippo. To quote:

Filippo was also formerly a chief architect at Intel and AMD.

This is backed by the news that some officials at Intel told Axios privately that they expect Apple to start the Intel-to-ARM transition as soon as next year.

Another older Bloomberg report indicates that a plan for ARM-based Macs has already been greenlit by Apple’s leadership and could begin appearing in 2020.

And then there’s reports that Microsoft, who’s long worked hand -in-hand with Intel, is currently testing prototypes of a Surface Pro with a custom ARM Snapdragon chip inside.

If Microsoft isn’t too worried about backwards compatibility with Intel, shouldn’t Apple follow their lead?

And finally, unreleased AMD Radeon GPU signatures have just been discovered in the second macOS Catalina beta.

Such GPU signatures are known precursors of an imminent hardware release. Apple just dropped the Mac Pro change with known GPU boards and just refreshed the MacBook Pro line with new processors, so what other computer with discrete GPU’s is coming in the near future?

Pain Points

Yes, a Mac transition from Intel to ARM would involve a few pain points, but only a few, and for most of us, they probably wouldn’t be that painful either.

Apple has, after all, made transitions before: from 68000 to PowerPC, and from PowerPC to Intel…and from Intel to ARM, when you get right down to it, as Apple ported the core of their entire OS to ARM when it created the iPhone.

Apple’s transitioned before, and they’re getting pretty good at it.

And as mentioned above, the world has changed. Today’s software is often downloaded directly from App Store servers to the user’s device. More often than not, that software is resource thinned and recompiled from Bitcode to a format specifically tailored to run efficiently on a given processor.

By and large, the days of the “fat binary” are over.

Of greater import in dropping support for Intel will be the loss of Bootcamp and Parallels and VWWare Window’s virtualization. Then again, that may still be possible, as well. Technology has also moved on, and we’ve gotten much, much better at JIT and AOT compilation and processor emulation.

Emulation isn’t running applications at full speed, that’s true. But studies have shown that when doing emulation, it’s really just a handful of processor commands that cause 80% of the bottlenecks. So what if Apple threw us a bone and were to add a few extra instructions to ARM to help out?

Plus, and as has been mentioned again and again, today is not yesterday. Today, if need be, I can remote into an AWS instance at the drop of a hat. In months we’ll be streaming high-end games from remote servers. If one must have access to a dedicated Windows server, then the services are out there.

And if not, well, it’s still perfectly clear that the writing is on the wall.

If losing a few customers devoted to running Windows directly on a Mac is the price Apple must pay to gain control over its processor roadmap and regain control over its own destiny…

Apple will pay it.

Without a second thought.

A Mac for the Future

Apple fully recognizes the need to be at the forefront of the artificial intelligence and machine learning wave, and what better way to do so than with a machine that supports both developers and end users with the most powerful AI and ML tools and capabilities available?

The original Macintosh with its mouse and WYSIWYG interface provided the base that spawned the entire nascent desktop publishing industry.

So it would be fitting indeed for the ARM transition to reboot the Mac into a new era, with new technology specifically designed to support the needs of the future.

It’s no longer a question of if they’re going to it.

But when. And how.

Comments 0 Responses