On-device machine learning is gaining more and more traction among developers around the world. It’s now considered the best way to bring machine-learning powered tools and applications to the masses. The reason behind this is the ever-increasing on-device computational power that can be harnessed by developers, as well as the increased data privacy that on-device machine learning offers.

The rise has been phenomenal and is expected to continue, but mobile companies need to actively innovate and provide new and better tools to developers to build these solutions.

We’ve seen loads of libraries released by companies and organizations around the world to support on-device machine learning, and Apple (as always) leads by a big margin in this field. At WWDC 2018, Apple announced iOS 12 and along with it a lot of new tools for developers to make machine learning-powered solutions for mobile platforms.

And at their most recent event, Apple announced a new series of consumer devices that come with a lot of hardware support for machine learning. In this article, I’ll discuss the new tools offered and how they’ll impact the use of machine learning by mobile developers.

Hardware Improvements

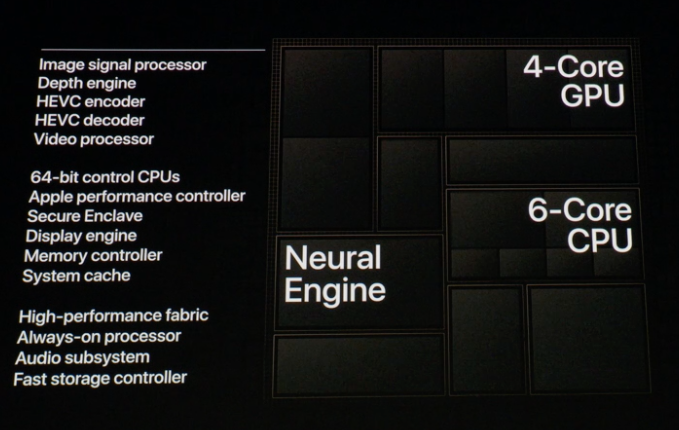

The latest iPhones (Xs, Xs Max & Xr) come with the A12 bionic chip that is by far the most powerful processor ever in a mobile phone. This means that there is a lot of computing that can be utilized by developers to power their machine learning tasks. The A12 bionic is the first 7nm chip to be used in a mobile phone, and it’s packed with 6.9 billion transistors. It consists of a 6 Core CPU, 4 Core GPU, and the neural engine.

The CPU has 2 high performance cores and 4 high efficiency cores, and all the 6 cores can run at once. This makes it 15% faster and 40% more efficient than the previous chip (A11). The GPU is also 50% faster than the A11 GPU, but what really affects on-device machine learning is the new Neural Engine.

The Neural Engine was introduced in the A11 chip, but this year Apple has taken it one step further. It is a new 8-core dedicated machine learning engine that can power all the machine learning apps developers come up with. It also has a smart compute system that can analyze all the neural network data and decide whether to run it on the CPU, GPU, or the neural engine.

The A12 bionic chip can power up to 5 trillion neural network operations per second which will make Core ML up to 9x faster (while consuming 1/10th the energy). This new neural engine is a breakthrough in the field of on-device machine learning that makes it easier for developers to bring more sophisticated machine learning architectures and algorithms that require high computational power to mobile platforms. In other words, this development makes it accessible to a lot more people.

The hardware on the new iPhones is without question a big breakthrough in the field of on-device machine learning. The improvements will enable developers to bring machine learning experiences to mobile platforms that were not possible. But this is only a small part of the picture. The evolution of iOS software is the improvement really driving these changes.

Software Improvements

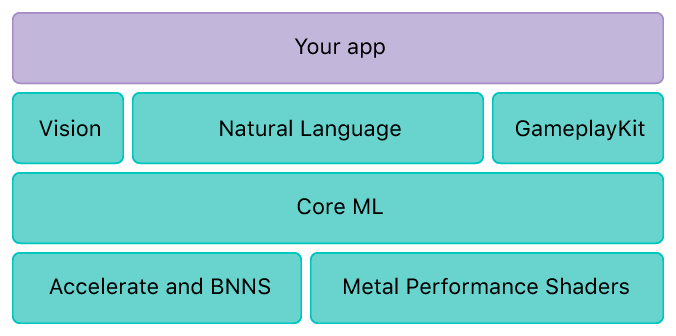

Apple first announced Core ML at WWDC 2017, and it was the first big step in on-device machine learning. It was optimized for on-device performance and also provided better data privacy than cloud-based machine learning solutions. Core ML 2 was announced at this year’s WWDC, along with iOS 12, making it one of the most advanced on-device machine learning libraries available.

Core ML 2 improves inference time by 30% by using techniques called quantization and batch prediction. In machine learning, weights are the places where the model stores the information it has learned during training, and if your model has been trained to do a complex task, it’s not uncommon to see a model with tens of millions of weights. This increases the size of your app significantly. Quantization solves this issue by discontinuing strings of values and constraining them to take a small and discrete subset of possible values.

Core ML 2 also comes with a new batch prediction API. Previously, if you wanted to get predictions on multiple inputs, you had to loop over the inputs and call separate predictions, but with the batch prediction API, you can use a single line of code to take an array of inputs and produce an array of outputs. Core ML takes care of the rest.

The list of supported operations in Core ML is increasing to keep up with the rapidly growing field of machine learning. Previously, if your neural network had a layer not supported by Core ML, you either had to wait or modify your neural network. But now, Apple has released custom layers for neural network models. Now if a layer is missing, you can simply define an implementation class, and it will work seamlessly with the rest of the model.

Along with Core ML 2, Create ML was also announced at this years WWDC. Create ML is a revolutionary step in the field of on-device machine learning. It provides a simple way to train Core ML models. Create ML is extremely easy to use and developers with no background in machine learning can use it in the MacOS Playgrounds to train machine learning models and implement them in their apps without having to learn many of the nuanced complexities of machine learning.

Last year, Apple also launched the NSLinguisticTagger, which is a class that provides APIs for Natural Language Processing (NLP). This year, they launched a brand new framework for NLP called Natural Language. It’s a one-stop shop for everything related to NLP on devices across all Apple platforms, and it has many useful functionalities. To learn more about Natural Language, refer to this.

Apple also updated the Metal library to Metal 2. The Metal Performance Shaders is a framework built on top of Metal, which gives developers the ability to use the GPU to accelerate machine learning tasks. This year Apple has added support for training on both iOS and MacOS. So now, using MPS, developers can train neural networks on-device.

iOS 12 and the updates to the developer tools are truly amazing, and the best part is that they’re extremely easy to use. These new tools allow developers to build amazing machine learning experiences for mobile and have truly revolutionized on-device machine learning.

The Impact

Apple’s recent hardware and software improvements are big steps forward in on-device machine learning. The high computational power and efficiency offered by the new A12 bionic chip will allow developers to bring more advanced machine learning tools to mobile platforms without worrying as much about the the time or compute resources it takes to complete tasks.

Meanwhile, the new software and developer tools make it extremely easy for developers with no background in machine learning to easily train and implement models in their apps. They might not support advanced algorithms and architectures right now, but they provide developers with an extremely easy way to perform complicated machine learning tasks on-device. These improvements will have a huge impact on the types of apps we see in the App Store, and there will be a monumental rise in the number of apps utilizing on-device machine learning, making it an easily accessible technology for both developers and users.

Discuss this post on Hacker News

Comments 0 Responses