Smartphones are ideal devices for machine learning because of the sheer number of sensors they have. Combining data from multiple sensors at the same time can allow developers to make more accurate and quicker predictions inside their apps.

Today almost every smartphone comes with location sensors that provides user’s geolocation with high accuracy.

By using geo-sensor data and knowledge about points of interest around a given location, we can dramatically improve speed and accuracy for a number of machine learning tasks including landmark detection and object detection.

Beyond these core functions, this data also opens a world of innovative mobile experiences powered by machine learning.

Don’t believe me? Google does.

Turns out, the major players are already using this technology (a little bit). At the past two Google I/O events, Google Lens has been one of the products taking center stage. With the introduction of Google Lens inside Google Maps, locating nearby places has become easier and more intuitive.

Let’s say you’re on vacation and exploring a city you aren’t yet familiar with. You’re hungry, and you see a number of restaurants nearby. You want to know the kinds of food the restaurants you’re looking at serve, or what others have said about these places, or how costly they are, etc. You can do that right from Google Maps simply by just pointing your camera towards a given location. Since Google knows where you are and what you’re looking at, it can show you in the blink of an eye.

But Google’s attempt at this is just scratching the surface of what’s possible. Let’s code something better for Sundar to present at next Google I/O 😜

Under the Hood

Let’s get to know how it all happens under the hood. In this use case, as the user captures the image and provides it to our AI Engine, we break down our analysis into smaller parts.

Before jumping into the execution of our landmark detection algorithms, we lower the number of possible landmarks that can be present inside that image. We’ll start by doing a couple of things:

- At the very beginning of our calculations, we collect a user’s current location from device’s geo-sensors.

- Context and geo-referenced information about points of interest around the user’s location can be obtained via places data services.

- We determine the focus area of a user in terms of radius. Using our contextual location data, we’ll know if a user is standing in a vacant lot or in between high-rise buildings. In a vacant area or open space, the focus area radius will be larger as compared to an area surrounded by buildings.

- We then collect data about all the landmarks within the focus area and the distance between landmarks and the user’s location. The output of this search is limited, as it will filter out the landmarks that lie outside the possible focus area.

Now that we have a refined list of landmarks that can be present inside this image, we can launch landmark detection with this limited set of possibilities. This more contextualized version of landmark detection can thus outperform more straightforward vision-based detection, giving a more accurate and seamless experience for our users.

Location data can serve as input for the model to improve its accuracy in detecting landmarks, while also serving as valuable information for the user about what to do next.

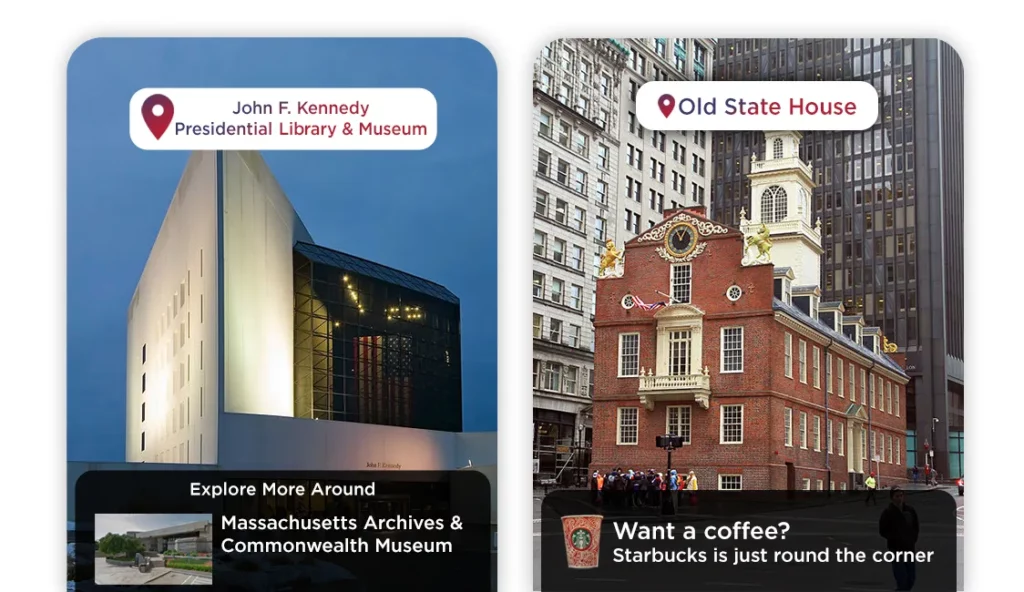

For example, say you’ve spent the first part of a day in Boston, MA, at the John F. Kennedy Presidential Library and Museum. Turns out, there are a lot more amazing things to discover near the Library.

Similarly, we can see that there’s a Starbucks just down the street from the Old State House [not to mention the Fritz team, whose offices are close by]. With this kind of location-based connectivity, the experience of being a tourist or city-dweller can become more convenient, fun, and interesting.

Let’s Build More — AI-Powered Food Review App

Given what we’ve discussed above, let’s take this one step further. Imagine you’re building an AI-powered restaurant review app.

You want the users to be able to easily review each food item they purchase. So to make things easier for them, you use a machine learning model to automatically identify and classify food objects in each image.

Unfortunately, your machine learning model keeps confusing your coffee cup with a glass of wine. The model needs additional information to make a better prediction.

Using contextualized location data can help solve this problem—if the model knows the user is at a coffee shop, it can eliminate certain predictions—i.e. it can exclude food items like pizza, noodles, or others.

Let’s Power Our Model

The use of contextualized location data can make the above predictions faster and more accurate, but there are many more possibilities here.

As an example, let’s imagine our user visited the Old State House and saw, via the landmark-based “nearby attractions” feature, the Starbucks just down the street. Say our user takes the following photo of their coffee:

Now that we know the image has been taken at Starbucks (and this Starbucks in particular), there are lots of additional features we can add to the user experience.

For example, we can add stickers and tags to our image, show amazing discounts available, show what other users liked about Starbucks, showcase current specials and seasonal products, dome delivery / pre-ordering options, and much more.

How Peekaboo Guru can help you with this?

High-quality image data is easy to get these days, but geographic information can still be a challenge. At Peekaboo Guru, we supply companies with this valuable data.

Consider our Food Review App—we know a lot about restaurants; their locations, cuisine, reviews, menus, specialties, deals, discounts, delivery areas, and much more. This enables companies to give their users a complete experience.

We have also developed google lens style feature Guru Eyes, Geo-Located Augmented Reality way before google did it 😉. Also powered by our places data repository.

We have a large repository of places data used by banks, telcos, mobile wallets, and several other brands across a number of industries to assist in their decision making.

For instance, A number of large banks are using this data to find the optimal location for their next ATM. Telecom companies are analyzing signal strength and service upgrade areas by developing context around customer locations.

You can reach out to us via [email protected] — we are always looking to solve huge problems businesses have, using modern AI techniques along with our contextual location intelligence engines.

Credits

Special thanks to Lucas Palleta & Katrin Amlacher for their amazing work on this subject you can check detailed work and success they achieved by implementing this technique

Geo-Services and Computer Vision for Object Awareness in Mobile System Applications. [accessed Nov 22 2018].

An Attentive Machine Interface Using Geo-Contextual Awareness for Mobile Vision Tasks. [accessed Nov 22 2018].

Discuss this post on Hacker News and Reddit

Comments 0 Responses