Latest news about AI and ML such as ChatGPT vs Google Bard

We use loss functions to calculate how well a given algorithm fits the data it’s trained on. Loss calculation is based on the difference between predicted and actual values. If the predicted values are far from the actual values, the loss function will produce a very large number.

Keras is a library for creating neural networks. It’s open source and written in Python. Keras does not support low-level computation, but it runs on top of libraries like Theano and TensorFlow.

In this tutorial, we’ll be using TensorFlow as Keras backend. Back-end is a Keras library used for performing computations like tensor products, convolutions, and other similar activities.

Time Complexity of Code with Python Example

Space Complexity of Code with Python Example

Commonly-used loss functions in keras

As aforementioned, we can create a custom loss function of our own; but before that, it’s good to talk about existing, ready-made loss functions available in Keras. Below are the two most used commonly-used ones.

LOGCOSH

Logcosh is the Logarithm of the hyperbolic cosine of the prediction error. logcosh in general is similar to the mean squared error described below , but it is not strongly affected by the incorrect predictions.

MSE

MSE or Mean squared error is similar to logcosh as described above however, MSE measures the average of the squares of the errors. This is calculated as the average squared difference between the predicted values and the actual value.

MAE

Mean absolute error or MAE is a variant of MSE and it is the measure of the difference between two continuous variables, generally denoted by say x1 and y1. The mean absolute error is an average of the absolute errors error = y1-x1, where y1 is the predicted value and x1 is the actual value.

What do we mean by a custom loss function?

The formula for calculating the loss is defined differently for different loss functions. In certain cases, we may need to use a loss calculation formula that isn’t provided on the fly by Keras. In that case, we may consider defining and using our own loss function. This kind of user-defined loss function is called a custom loss function.

A custom loss function in Keras can improve a machine learning model’s performance in the ways we want and can be very useful for solving specific problems more efficiently. For example, imagine we’re building a model for stock portfolio optimization. In this case, it will be helpful to design a custom loss function that implements a large penalty for predicting price movements in the wrong direction.

We can create a custom loss function in Keras by writing a function that returns a scalar and takes two arguments: namely, the true value and predicted value. Then we pass the custom loss function to model.compile as a parameter like we we would with any other loss function.

Let us Implement it !!

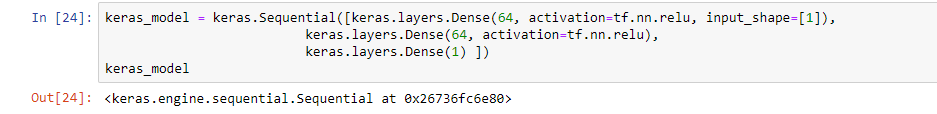

Now let’s implement a custom loss function for our Keras model. As a first step, we need to define our Keras model. Our model instance name is keras_model, and we’re using Keras’s sequential() function to create the model.

We’ve included three layers, all dense layers with shape 64, 64, and 1. We have an input shape of 1, and we’re using a ReLU activation function (rectified linear unit).

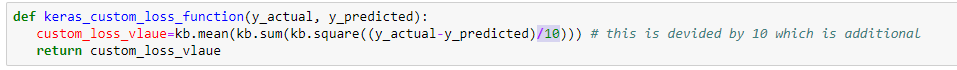

Once the model is defined, we need to define our custom loss function. This is implemented as shown below. We’re passing the actual and predicted values to this function.

Note that we’re dividing the difference of the actual and predicted value by 10—this is the custom part of our loss function. In the default loss function, the difference in the values of actual and predicted is not divided by 10.

Remember— it all depends upon your particular use case as to what kind of custom loss function you’ll need to write. Note that here we’re dividing by 10, which means we want to lower the magnitude of our loss during the calculation.

In the default case of MSE, the magnitude of the loss will be 10 times this custom implementation. So we can use this kind of custom loss function when our loss value is becoming very large and calculations are becoming expensive.

Here, we’re returning a scalar custom loss value from this function.

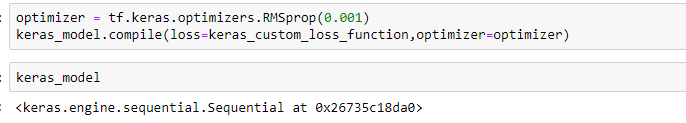

To use our custom loss function further, we need to define our optimizer. We are going to use the RMSProp optimizer here. RMSprop stands for Root Mean Square Propagation. The RMSprop optimizer is similar to gradient descent with momentum. The commonly-used optimizers are named as rmsprop, Adam, and sgd.

We need to pass the custom loss function as well as the optimizer to the compile method, called on the model instance. Then we print the model to make sure there is no error in compiling.

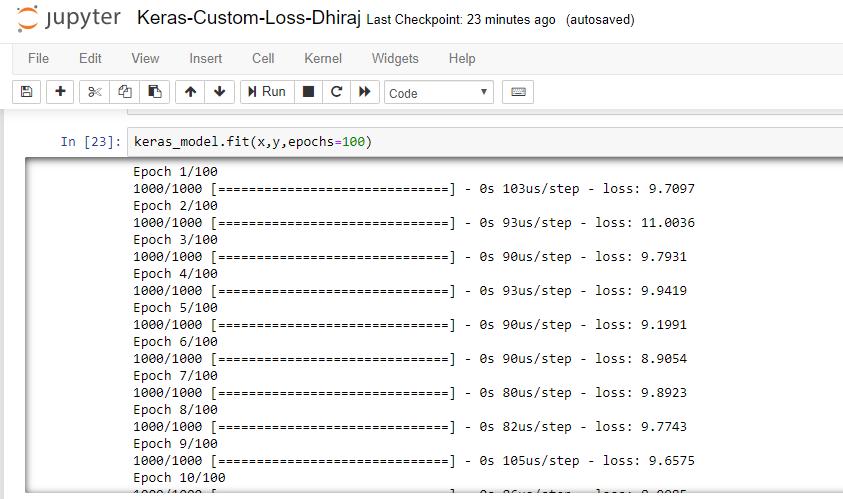

Now it’s time to train the model and see that it’s working without any error. For this, we use the fit method on the model and pass the independent variable x and dependent variable y, along with epochs = 100.

The aim here is to see that model is training without any error and that the loss is gradually reducing as the epoch count increases. You can check out the model training result in the image below.

End notes

In this article, we learned what a custom loss function is and how to define one in a Keras model. Then, we compiled the Keras model using custom loss function. Finally, we were able to successfully train the model, implementing the custom loss function.

Thanks for reading!

Comments 0 Responses