What is Edge AI, and why does it matter?

Traditionally, AI solutions have needed a large amount of parallel computational processing power. So for a long time, a requirement of AI-based service was server-based Internet connectivity. But solutions that require real-time action need on-device computation—this is where edge AI enters the picture.

You can use GPU-based devices, but it makes the process costly, and with this come the problems of bloated size and high energy consumption. But more and more, edge AI is becoming an essential part of the ongoing deep learning revolution, both in terms of research and innovation.

With ever-more powerful handheld devices in the hands and pockets of billions around the world, we’re steadily seeing increased demand for on-device AI computations. As such, the development of mobile processors has become more AI-focused, with dedicated hardware for machine learning.

Quick overview of current hardware (Intel, etc)

Though this is the first Edge TPU, we’ve seen a couple of similar AI-dedicated hardware before, such as:

- Intel’s Myriad™ VPU-based Neural Compute Stick, with Google’s Vision Kit.

- Cuda-based NVIDIA Jetson TX2

Coral Beta

The TPU—or Tensor Processing Unit—is mainly used by Google data centers. For general users, it’s available on the Google Cloud Platform (GCP), and to try it free you can use Google Colab.

Google first showcased their Edge TPU in a demo at CES 2019 (and again at this year’s TensorFlow Dev Summit). And in March, they released Coral Beta.

The Beta release consists of a Dev Board and USB Accelerator, and previews of a PCI-E Accelerator and System-on-Module (SOM) for production purposes.

What can you do with the Edge TPU?

With the Edge TPU, you can train models on-device, but for now, it only supports classification models to be retrained on the device using transfer-learning, based on the weight imprinting technique proposed in Low-Shot Learning with Imprinted Weights. This technique opens up many possibilities for real-time systems. Furthermore, it’s the fastest inference device of its kind.

USB Accelerator:

The Edge TPU accelerator is like any other USB device—just with a bit more power. Let’s open it up and see what it can do.

Typical unboxing:

Here’s what you’ll find in the box:

- Getting Started Guide

- USB Accelerator

- USB Type C cable

Getting started

The getting started guide helps with installation, which is very fast and easy. Everything you’ll need—including model files—comes with the installation package. No TensorFlow or OpenCV library dependencies necessary.

Demos

The Coral Edge TPU API documentation includes a helpful overview and demos for both image classification and object detection:

The Edge TPU API

Before we work through them, there a couple of things to mention about the Edge TPU API:

- The edgetpu Python module is needed to run TensorFlow Lite models on the Edge TPU. It’s a high-level API that reveals a set of other simple APIs that allow for simple execution of model inference.

- These APIs are pre-installed on the Dev Board, but if you’re using the USB Accelerator, you’ll need to download the API. Check out this setup guide for more info.

- There area couple of key APIs for performing inference: ClassificationEngine for image classification and DetectionEngine for object detection; and then ImprintingEngine to implement transfer learning.

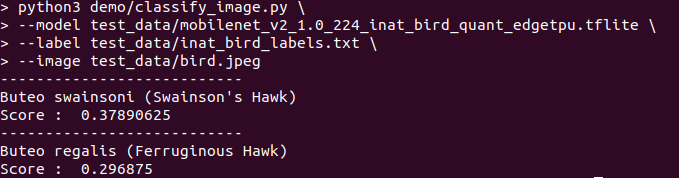

Image classification:

Implementation of these demos is very simple. I’ve chosen to input the picture below using the ClassificationEngine API:

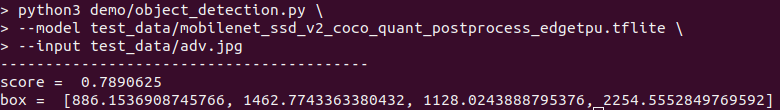

Object Detection:

Similarly, calling the DetectionEngine API, we can easily detect and draw a bounding box around input image:

I changed the threshold of the default demo from 0.05 to 0.5, as it was giving other false positive results. I also added width=5 in the rectangle.

As Coral is still in beta, the API docs don’t have a lot of detail yet. But it was easy enough to use for these types of demos.

Notes about Demo Walkthrough:

All of the code, models, and label files used in these demos come with the library included with the installation package. For now, it can perform classification or detection, according to the operation model and label input that ’s given.

For classification, it returns the top 2 class predictions and a confidence score. And for object detection, it returns a confidence score and bounding box coordinates. If you input the class label file, it will also give you the output with the class name.

Limited performance on Raspberry Pi

It’s unfortunate that the hobbyist-favorite Raspberry Pi can’t fully utilize the USB Accelerator’s power and speed. The Edge TPU uses a USB 3 port, and current Raspberry Pi devices don’t have USB 3 or USB C, though it will still work with USB 2 speed.

Currently, it only runs on Debian Linux, but my guess is that, soon enough, people will find out hack-y ways to support other operating systems.

Let’s see what other products Coral has to offer with the power of TPU.

Dev Board

As a dev board, Raspberry Pi is typically the most popular choice, but this time Google preferred the NXP i.MX 8M SOC (Quad-core Cortex-A53, plus Cortex-M4F) board.

You can read more about the Dev Board here:

But for experimental purposes and to focus specifically on the Edge TPU, I preferred and ordered the USB Accelerator.

What’s Next?

Say you’ve prototyped something great using the Dev Board or USB Accelerator—and now it’s time to take it to large-scale production using the same code. What do you do then?

If we look at the products lineup, it seems Google has already thought this far ahead, with enterprise support through the following modules, which are currently labeled as Coming soon.

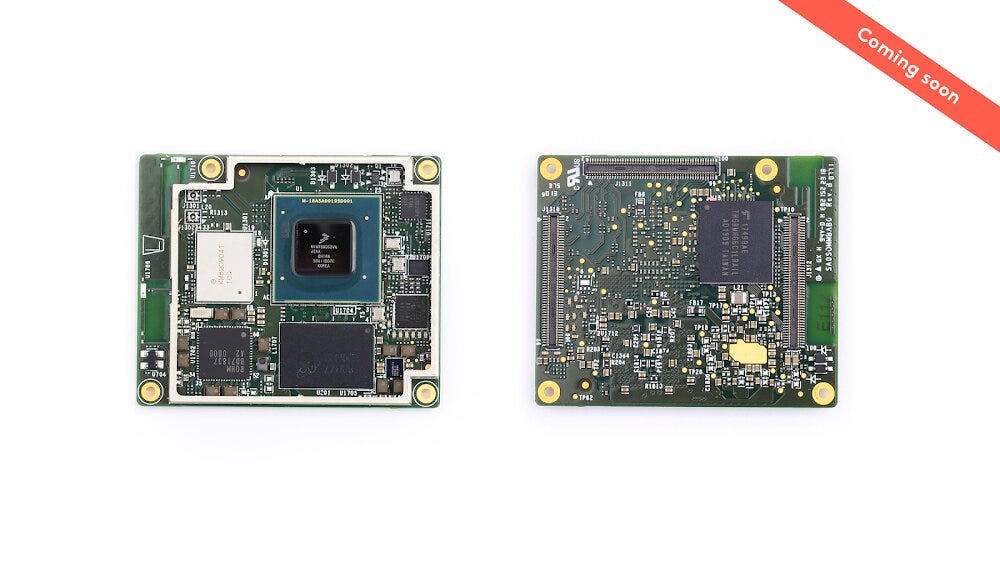

System-on-Module (SOM)

A fully-integrated system (CPU, GPU, Edge TPU, Wifi, Bluetooth, and Secure Element) in a 40mm x 40mm pluggable module.

This is for large-scale production. Manufacturers can produce their own board with their preferred IO, following the guidelines of this module. Even the Dev Board contains this module, which is detachable.

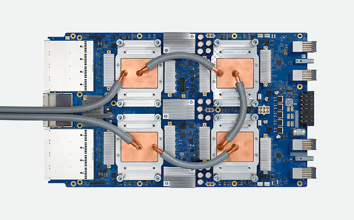

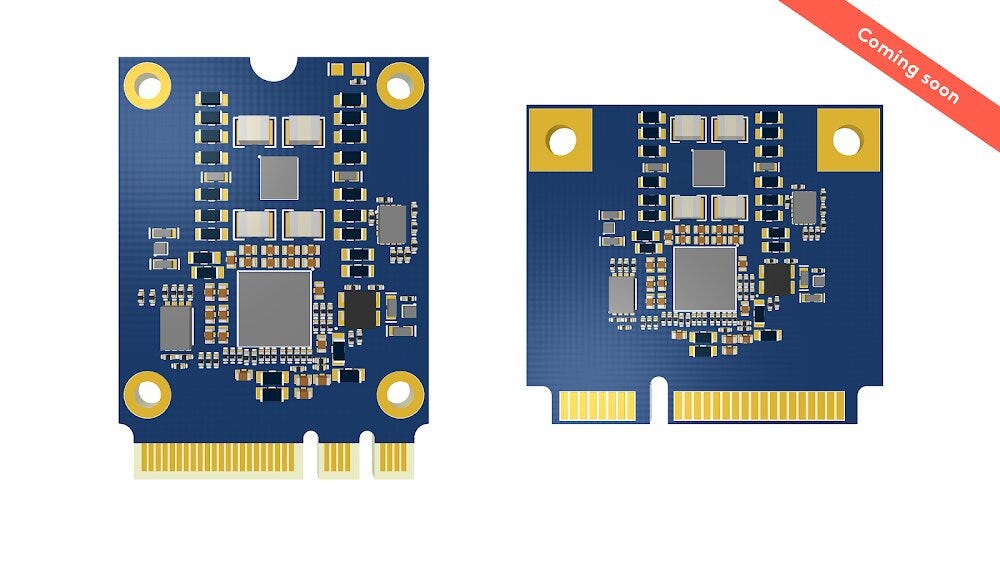

PCI-E Accelerator

There is very little info about the PCI-E Accelerator, but as its name suggests, it will obviously be a module with PCI-E (Peripheral Component Interconnect Express) and there will be two variants—perhaps similar to the USB Accelerator, except instead of USB it will be PCI-E.

The idea here is that you’d be able to use it in a device with a PCI-E slot, so you can use it with most laptops, desktop computers, and single-board computers that have a PCI-E slot.

Seeing this wide variety of modules on the way, we can expect some enterprise-level projects are just around the corner. Coral thinks so, too, with the following copy featured on their website:

TensorFlow and Coral Project

It’s hard to imagine Google products without TensorFlow. Currently, the Edge TPU only supports custom TensorFlow Lite models. Also, TensorFlow Lite Stable version was just released.

Currently, this means you have to convert tflite models to tflite-tpu by using a web compiler. But don’t worry! If you’re a PyTorch fan or prefer another framework, you can try to convert your model to TensorFlow using ONNX.

Looking Ahead

Despite its lack of full support for Raspberry Pi and limited documentation in the Beta release, I’m very optimistic about the Coral project. We can’t be sure how exactly this tech will evolve, but there are certainly high hopes for more power, less energy consumption, greater cost efficiency, and further innovation.

For a future post, I’ll use a project-based approach to compare the performance of the Intel® Neural Compute Stick and Coral’s Edge TPU.

Comments 0 Responses