Learning and implementing different AI-powered apps using the TensorFlow.js library empowers you to do so many amazing things with ML in the browser.

This tutorial is the latest in my series using TensorFlow.js for machine learning and implementing those models in React apps. Here, we’ll learn about another TensorFlow library that helps with body segmentation.

Body segmentation is a deep learning task that segments and highlights the boundaries between different sections of the body, such as the torso, lower arm, upper arm, thigh, and lower leg. The body-pix model provided by TensorFlow.js can detect up to 23 different segments of the human body.

In this tutorial, we will learn how to segment the body parts using the webcam in real-time and then draw colored masks based on body parts in the canvas.

The idea is to create a React app with a webcam stream that feeds the video data to the body-pix TF.js model, which segments out body parts, allowing us to draw colored masks on top of those body parts.

Table of contents:

What we will cover in this tutorial

- Creating a canvas to stream video out from the webcam.

- Detecting and segmenting body parts using a pre-trained body-pix model from TensorFlow.js.

- Drawing colored segment masks around the body parts on the JavaScript canvas in real-time using the webcam feed.

Let’s get started!

Creating a React App

First, we’re going to create a new React App project. For that, we need to run the following command in the required local directory:

After the successful setup of the project, we can run the project by running the following command:

After the successful build, a browser window will open up showing the following result:

Installing the required packages

Now, we need to install the required dependencies into our project. The dependencies we need to install in our project are:

- @tensorflow/tfjs: The core TensorFlow package based on JavaScript.

- @tensorflow-models/body-pix: This package delivers the pre-trained body-fix TensorFlow model for segmenting out body parts. This package also offers modules to draw a colored mask around the segmented body parts.

- react-webcam: This library component enables access to the webcam in a React project.

We can either use npm or yarn to install the dependencies by running the following commands in our project terminal:

Now we need to import all the installed dependencies into our App.js file, as directed in the code snippet below:

Set up webcam and canvas

Here, we’re going to initialize the webcam and canvas to view the webcam stream in the web display. For that, we’re going to make use of the Webcam component that we installed and imported earlier. First, we need to create reference variables for the webcam as well as a canvas using the useRef hook, as shown in the code snippet below:

Next, we need to initialize the Webcam component in our render method. Using this, we can stream the webcam feed in the canvas, also passing the refs as prop properties. We also need to add the canvas component just below the Webcam component. The canvas component enables us to draw anything that we want to display in the webcam feed.

Here, the styles applied for both Webcam and canvas components are the same since we’re going to draw the colored mask on a canvas that’s placed on top of the webcam stream.

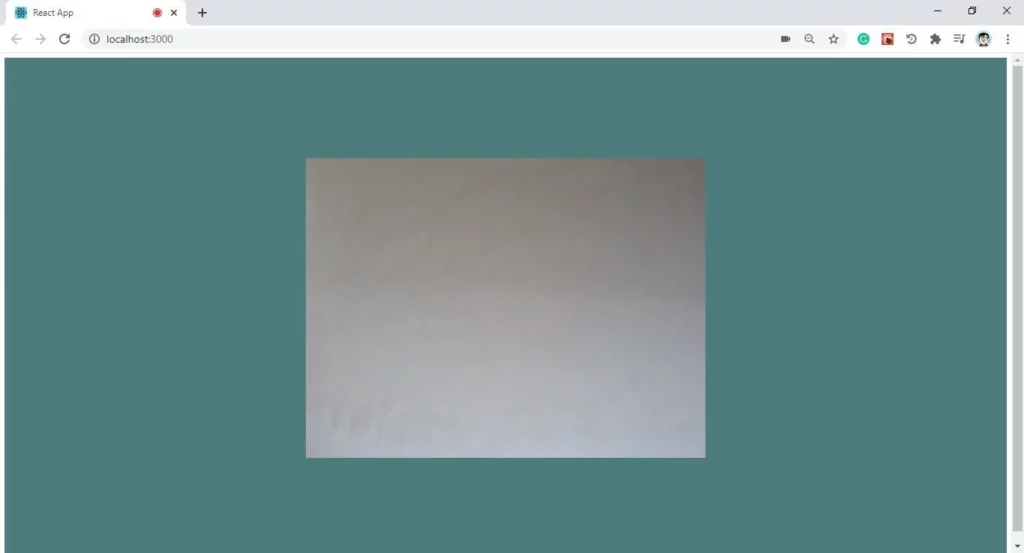

Hence, we’ll now get the webcam stream in our browser window:

Loading the body-pix model

In this step, we’re going to create a function called loadBodyPixModel, which initializes the body-pix model using the load method from the bodyPix module. We’re loading the model like a neural network in the network constant. The overall code for this function is provided in the snippet below:

Now, to trigger this function, we need to call it in the main functional component function:

Detecting body segments

Here, we’re going to create a function called detectBodySegments, which will handle the body part segmentation as well as the colored mask drawing. First, we need to check to see if the webcam is up and running and receiving the video data stream using the following code:

Then, we need to get the video properties along with dimensions using the webcamReference that we defined before:

Next, we need to set the dimensions of the Webcam as well as the canvas to be the same based on the height and width of the video stream, using the code from the following snippet:

We now start the segmentation process using the segmentPersonParts method provided by the network neural network model obtained as a parameter. The segmentPersonParts method takes video stream data as a parameter, as shown in the code snippet below:

Next we need to call this detectBodySegments function inside the loadBodyPixModel method under the setInterval method, passing the network neural network model as a parameter. This enables the detectBodySegments function to run every 100 milliseconds. The coding implementation is provided below:

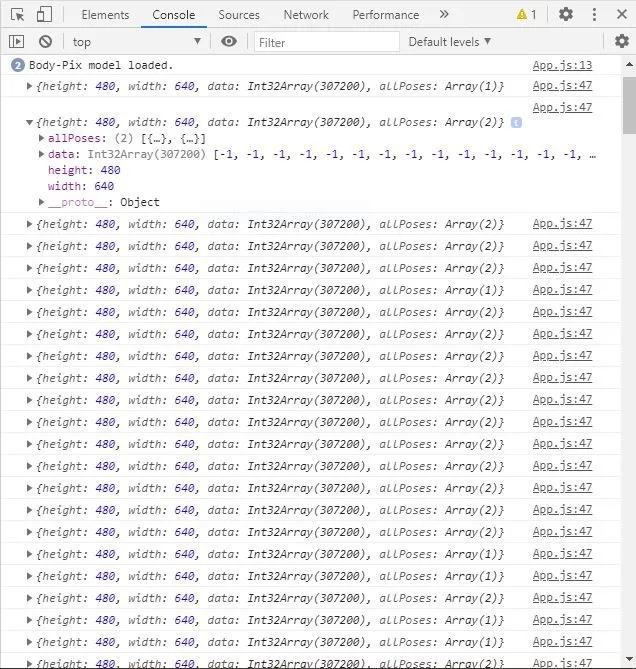

If we run the project, our body segmentation data from the webcam will get logged in the browser console:

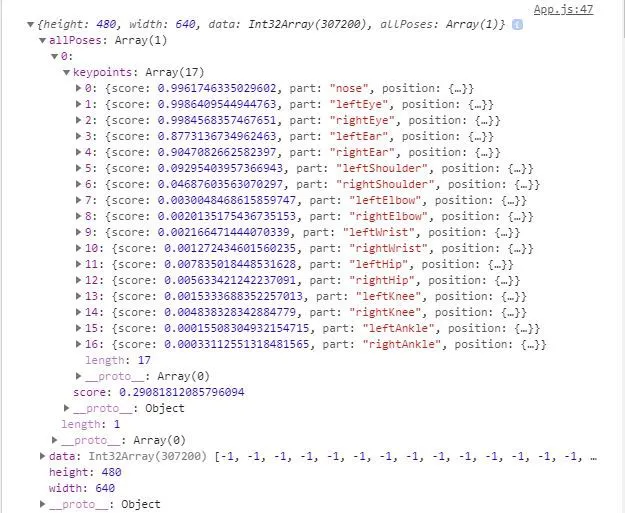

Each data stream from the segmentation process will hold the body part data along with their key points:

In the above screenshot, we can see the body part data along with the position coordinates, which will enable us to draw a colored mask around it.

Drawing a colored mask on the canvas

Now that we have the coordinate data of segmented body parts, we can use this data to draw a colored mask around the appropriate body parts.

Fortunately, the body-pix model library provides us with a method to color the mask: toColoredPartMask. To draw the mask, we can use the drawMask method from the bodyPix module, passing the configurations as canvas, video, initialized coloredBodyParts data, opacity value, blur density, and flip horizontal properties. All of this is shown in the code snippet below:

And here’s a complete function:

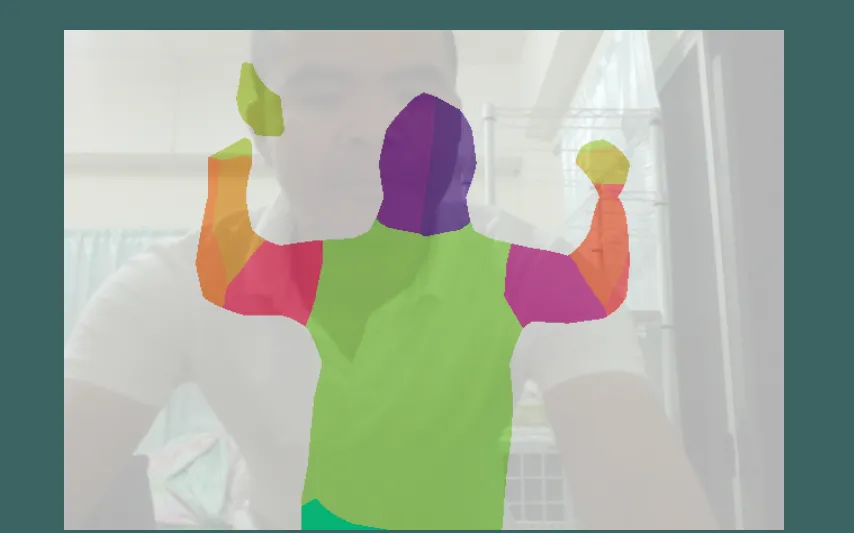

Hence, the final result is demonstrated:

We can see the formation of colored masks around the segmented body parts as the body moves.

We have successfully implemented real-time body segmentation using the TensorFlow model and Webcam feed in our React project.

Conclusion

In this tutorial, we learned how to use the body-pix TensorFlow.js model and React’s webcam library to segment out human body parts and draw colored masks around those segmented parts. The entire process of implementing this body segmentation React app was simplified due to the availability of a pre-trained body-pix TensorFlow model.

I hope you’ve found this tutorial knowledgeable and beneficial. Such body segmentation can be used in various fashion-related apps to enable virtual try-on’s or otherwise add effects to a person’s body.

In a deeper sense, it can also be used to determine the posture of the body parts, as the coordinates of each body part are collected separately. However, do note that human pose estimation is an established computer vision deep learning task that does this as well.

For the demo of the entire project, you can check out the Codesandbox.

Comments 0 Responses