Position Map Regression Networks (PRN) is a method to jointly regress dense alignment and 3D face shape in an end-to-end manner. In this article, I’ll provide a short explanation and discuss its applications in computer vision.

When I was a child, I imagined that (due to movies, of course), in the future, we’d be able to have these crazy holograms where you could see people talking to you as if they were there. These kinds of applications for computer vision suggest we aren’t that far from achieving something similar.

In the last few decades, a lot of important research groups in computer vision have made amazing advances in 3D face reconstruction and face alignment. Primarily, these groups have used CNNs as the de facto ANN for this task. However, the performance of these methods is restricted because of the limitations of 3D space defined by face model templates used for mapping.

Position Map Regression Networks (PRN)

In a recent paper, Yao Feng and others proposed an end-to-end method called Position Map Regression Networks (PRN) to jointly predict dense alignment and reconstruct 3D face shape. They claim their method surpasses all previous attempts at both 3D face alignment and reconstruction on multiple datasets.

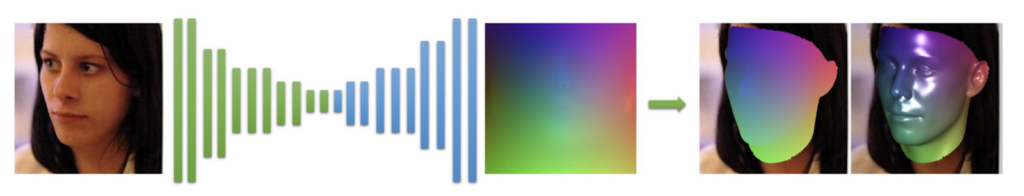

Specifically, they designed a UV position map, which is a 2D image recording the 3D coordinates of a complete facial point cloud, which maintains the semantic meaning at each UV polygon. They then train a simple encoder-decoder network with a weighted loss that focuses more on discriminative region to regress the UV position map from a single 2D facial image.

Their contributions can be summarized here (from the same paper):

Implementation

Their code is implemented in Python using TensorFlow. You can take a look at the official repo here:

If you want to run their examples you’ll need this:

- Python 2.7 (numpy, skimage, scipy)

- TensorFlow >= 1.4

- dlib (for detecting face. You do not have to install if you can provide bounding box information. )

- OpenCV 2 (for showing results)

The trained model can be downloaded at BaiduDrive or GoogleDrive.

Right now the code is in development and they will be adding more and more functionalities in the near future.

Applications

Basics (Evaluated in paper)

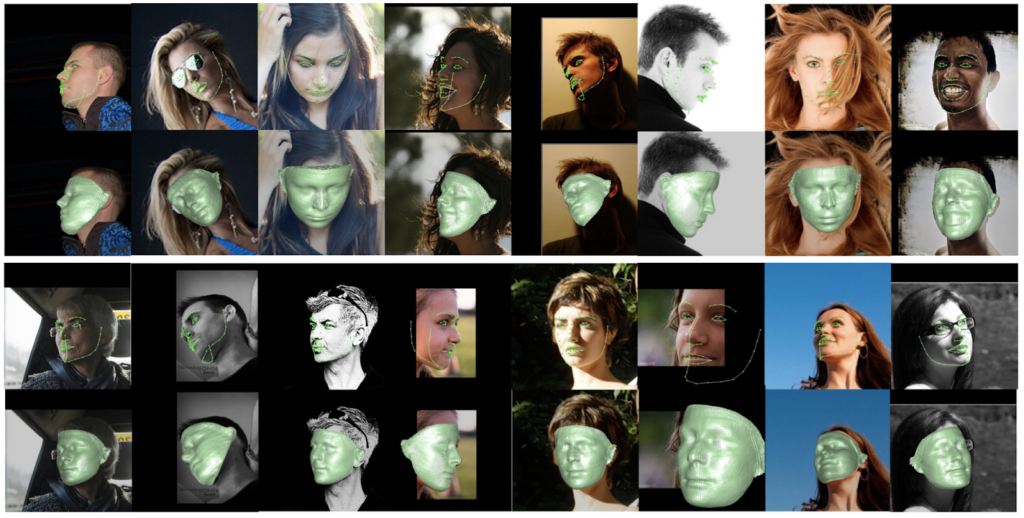

- Face Alignment: Dense alignment of both visible and non-visible points(including 68 key points).

- 3D Face Reconstruction: Get the 3D vertices and corresponding colours from a single image. Save the result as mesh data(.obj), which can be opened with Meshlab or Microsoft 3D Builder. Notice that, the texture of non-visible area is distorted due to self-occlusion.

To be added:

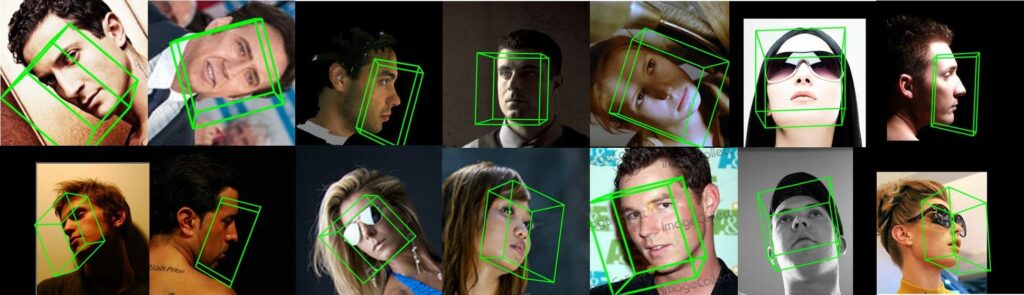

3D Pose Estimation: Rather than only use 68 key points to calculate the camera matrix(easily effected by expression and poses), we use all vertices(more than 40K) to calculate a more accurate pose.

Depth image:

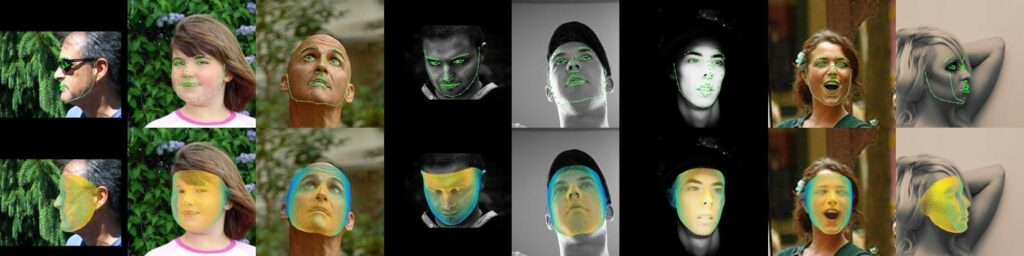

Texture Editing: Data Augmentation/Selfie Editing, modify special parts of input face, eyes for example:

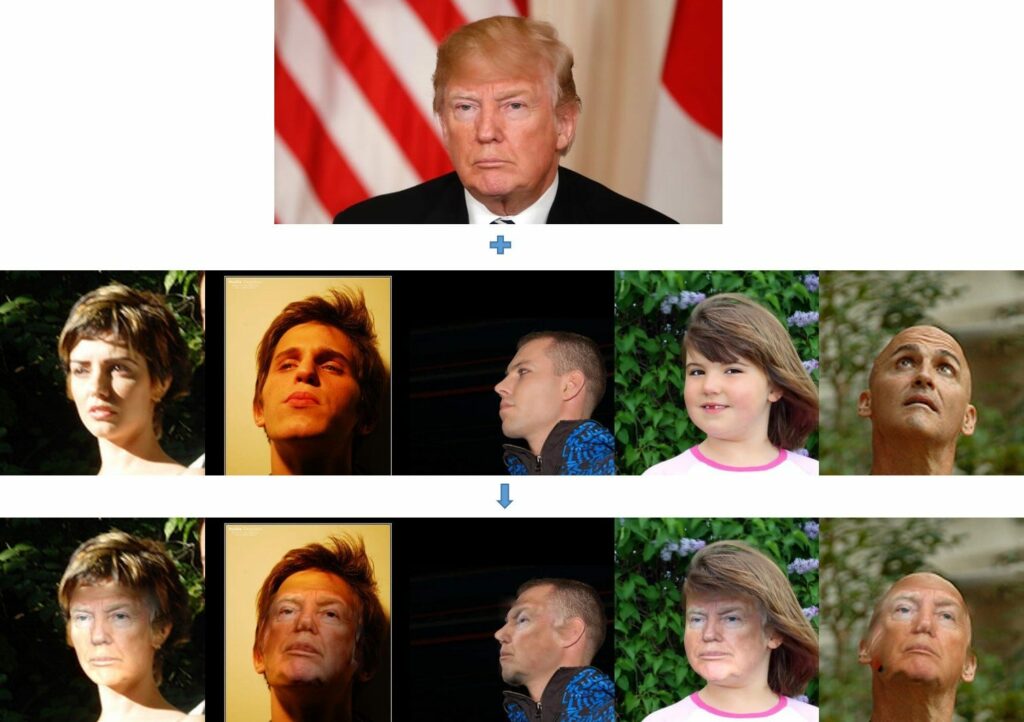

Face Swapping: replace the texture with another, then warp it to original pose and use Poisson editing to blend images.

Basic usage

- Clone the repository

- Download the PRN trained model at BaiduDrive or GoogleDrive, and put it into Data/net-data

- Run the test code. (test AFLW2000 images)

python run_basics.py #Can run only with python and tensorflow

- Run with your own images

python demo.py -i <inputDir> -o <outputDir> –isDlib True

run python demo.py –help for more details.

I’ll be using Deep Cognition’s Deep Learning Studio to test this and other frameworks in the near future, so start by creating an account :).

Thanks for reading this. I hope you found something interesting here 🙂

If you have questions just follow me on Twitter

and LinkedIn.

See you there 🙂

Discuss the post on Hacker News.

Comments 0 Responses