In this piece, we’ll look at some of the top open source machine learning projects in 2019, as ranked by MyBridge.

Real-Time-Voice-Cloning (13.7K ⭐️)

This project is an implementation of the SV2TTS paper with a vocoder that works in real-time. Using this repo, one is able to clone a voice in 5 seconds to generate arbitrary speech in real-time.

The SV2TTS three-stage deep learning framework allows the creation of a numerical representation of a voice from a few seconds of audio.

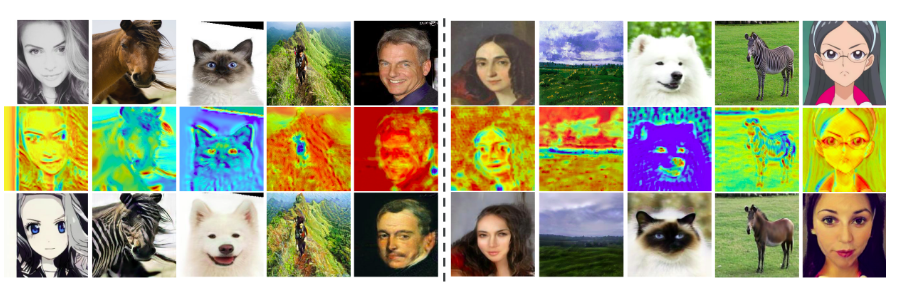

UGATIT: Unsupervised Generative Attentional Networks with Adaptive Layer-Instance Normalization for Image-to-Image Translation (4.4K ⭐️)

This a TensorFlow implementation of U-GAT-IT. The paper it’s based on proposes a method for implementing unsupervised image-to-image translation with the addition of a new attention module and a new learnable normalization function in an end-to-end manner.

The attention module directs the model to focus on more important areas, hence differentiating between source and target domains based on the attention map obtained by the auxiliary classifier. The AdaLIN (Adaptive Layer-Instance Normalization) function assists the model in controlling the amount of change in shape and texture by learned parameters.

RAdam: On The Variance of the Adaptive Learning Rate and Beyond (1.9K ⭐️)

This is an implementation of the following paper:

The authors propose RAdam, a variant of Adam. This is done by rectifying the variance of the adaptive learning process. The authors used image classification, language modeling, and neural machine translation tasks to obtain experimental results.

Dlrm: An implementation of a deep learning recommendation model (DLRM) (1.7K ⭐️)

This is a state-of-the-art deep learning recommendation model (DLRM) with implementations available in PyTorch and Caffe2.

The model has a specialized parallelization scheme that utilizes model parallelism on the embedding tables in order to migrate memory constraints. This enables the exploitation of data parallelism to scale-out computation from fully-connected layers.

TecoGAN ( 1.3K ⭐️)

This repo contains the code for the TEmporally COherent GAN.

The paper proposes an adversarial training video super-resolution that leads to temporally coherent solutions without sacrificing spatial detail. It also proposes a Ping-Pong loss that can remove temporal artifacts in recurrent networks without reducing perceptual quality.

Megatron-LM (1.1K ⭐️)

The Megatron repo is an ongoing research project on training large, powerful transformer language models at scale. It currently supports model-parallel, multi-node training of GPT2 and BERT.

It’s currently capable of training 72-layer, 8.3 billion parameter GPT2 language model with an 8-way model and 64-way data parallelism across 512 GPUs. It also trains BERT Large on 64 V100 GPUs in 3 days. Megatron achieves a language model perplexity of 3.15 and a SQuAD F1-score of 90.7.

TensorNetwork (1K ⭐️)

TensorNetwork is an open-source library for implementing tensor network algorithms. It’s a tensor network wrapper for TensorFlow, JAX, PyTorch, and NumPy.

Tensor networks are sparse data structures that are currently being applied in machine learning research. The developers don’t advocate for use of the tool in production environments yet.

Python_autocomplete (708 ⭐️)

This is a project that was started to test how well an LSTM can autocomplete Python code. It’s based on TensorFlow.

Buffalo (365 ⭐️)

Buffalo is a fast and scalable, production-ready open source project for recommender systems. It utilizes system resources efficiently and hence achieves high performance on low-spec machines.

Realistic-Neural-Talking-Head-Models ( 312 ⭐️)

This is an implementation of the paper “Few-Shot Adversarial Learning of Realistic Neural Talking Head Models”. The paper proposes a model that creates personalized photorealistic talking head models. Its aim is to synthesize photorealistic personalized head images given a set of face landmarks.

This is applicable in telepresence, videoconferencing, the special effects industry, and in multi-player games. The proposed system is able to initialize the parameters of both the generator and the discriminator in a person-specific way. This enables the training process to consume a few images quickly.

Looking Ahead

Looking forward into 2020, we cam certainly expect even more interesting open-source projects as machine learning tools become more advanced and easier to implement.

Comments 0 Responses