In terms of usefulness and popularity, object detection is arguably at the forefront of the computer vision domain. A diverse application of CV with many facets, object detection helps solve some of the most explored problems—face detection, and more recently, pose estimation, hand/gesture tracking, AR filters, and so on.

Each of these has usually been looked upon as an independent problem, with its own model and some fixed applications. However, combining several of these tasks into a single end-to-end solution, in real-time, is a very complex job that requires the simultaneous inference of multiple neural networks uniquely linked to each other.

Google provides a solution using their MediaPipe ML framework that combines face detection, pose estimation and hand tracking into a single efficient end-to-end pipeline.

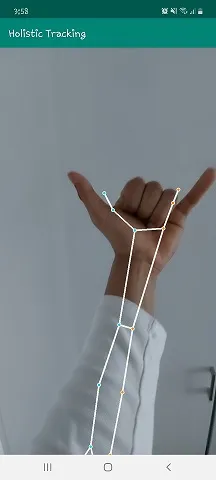

Dubbed “Holistic tracking”, the pipeline makes use of up to 8 different models that coordinate with each other in real time while minimizing memory transfer. This can lead to much more enhanced real-time machine perception of human activities, with a variety of applications such as fitness/sport analysis, gesture detection, sign language recognition, and AR effects.

Pipeline Design and Inner Workings

The ML pipeline makes use of multiple independent models, each of which is locally-optimized to their task — pose, hand, and face detection. While they coordinate with each other, they use separate inputs, and the input vector for one model may not be suitable for another model.

For example, the pose detection model makes use of a lower resolution (256×256) video frame as its input. However, if the regions for the face or hands were to be cropped from this frame, the resulting frame would have a resolution that’s much too low to be used accurately as an input for the other models. As a result, each model makes use of its own input frame from the original captured video feed.

The outcome, thus, is a multi-stage pipeline, where each model gets its own frame that zeroes in on the corresponding region of interest (face, hand, etc.), with the resolution that’s deemed appropriate for that specific region.

The pose estimation model thus comes first in the pipeline. MediaPipe makes use of the BlazePose pose estimation model for this. To maximize performance, the pipeline assumes that the object does not move significantly from frame-to-frame, so the result of the previous frame—i.e., the body region of interest—can be used to start inference on a new frame.

During fast movements, the tracker can lose the target, which means the detector needs to re-localize it. Using the inferred pose landmark points, the model derives three ROI crops: one for each hand and one for the face.

A recrop model may be also be used in the pipeline to crop and improve resolution for the child images. The pipeline then applies task-specific face and hand models to estimate their corresponding landmarks. In the subsequent step, all the key points (face, pose, and hand) are merged together, enabling the pipeline to detect over 540 key points in real-time.

To deal with the issue of losing the target pose during fast movements, MediaPipe uses pose predictions on every frame as an additional ROI to reduce the response time. This method ensures that the subsequent models don’t mix up body parts. such as between left and right hands or (when there are multiple people in a frame) between body parts of different people.

The low image resolution of 256×256 for the pose model means that the resulting ROIs for face and hands are too inaccurate to be used in the subsequent models. The re-crop models act as spatial transformers that correct this and are designed to be lightweight, costing only around 10% of the corresponding model’s inference time.

Structure

The pipeline is structurally implemented as a MediaPipe graph with a holistic landmark subgraph. This main graph throttles the images flowing downstream as a measure of flow control.

The first incoming image passes through unaltered, and subgraphs are required to finish their tasks before the next frame can pass. All incoming frames that come in while waiting for the subgraphs to finish processing are dropped, limiting the queuing up of incoming images and data, which leads to increased latency and memory usage—a big win for any real-time mobile application.

The holistic landmark subgraph uses three separate models internally:

1. The pose landmark module

The MediaPipe Pose Landmark model allows for high-fidelity body pose tracking, inferring 33 2D landmarks on the whole body (or 25 upper-body landmarks) from RGB video frames, utilizing BlazePose. It detects the landmarks of a single body pose, full-body by default, but it can be configured to cover the upper-body only, in which case it only predicts the first 25 landmarks. It can run using either CPU or GPU depending on the type of module chosen.

2. The hand landmark module

MediaPipe Hands is a high-fidelity hand and finger tracking solution. It can infer up to 21 3D landmarks of a hand from just a single frame. It’s a hybrid between a palm detection model that operates on the full image and returns an oriented hand bounding box and a hand landmark model that operates on the cropped image region defined by the palm detector, which returns high-fidelity 3D hand keypoints. It detects landmarks of a single hand or multiple hand depending on the module type. The option to run using CPU or GPU is also featured.

3. The face landmark module

The MediaPipe Face Mesh estimates 468 3D face landmarks in real-time on mobile devices. It employs deep neural networks to infer the 3D surface geometry, requiring only a single camera input ,without the need for a dedicated depth sensor. Utilizing lightweight model architectures with optional GPU acceleration throughout the pipeline, it can track landmarks on a single face or multiple faces. Additionally, it establishes a metric 3D space and uses the face landmark screen positions to estimate face geometry within that space.

Performance and APIs

The ML pipeline here features highly-optimized models combined with significantly upgraded pre- and post-processing algorithms, as well (for example, affine transformations). This helps reduce processing time on most current mobile devices and keeps the complexity of the pipeline in check.

Furthermore, moving the pre-processing computations to GPU results in an average pipeline speedup for all devices. As a result, MediaPipe Holistic can run in near real-time performance, even on mid-tier devices and in the browser.

For each frame, the pipeline coordination between up to 8 models per frame — the pose detector, the pose landmark model, 3 re-crop models, and 3 keypoint models for hands and face. Since the models are mostly independent, they can be replaced with lighter or heavier versions (or even turned off completely) depending on performance and accuracy requirements.

Also, once the pose is inferred, the pipeline knows precisely whether hands and face are within the frame, allowing it to skip inference on those body parts if they are absent.

If you want to try it out for yourself, you have quite a few options. You can either build and run on a desktop using the Python , JavaScript, or C++ APIs configured to run with either CPU or GPU. Remember to first install the MediaPipe package. You can also try out the installation for iOS or Android. Even here, there are multiple options such as building with command line or using Android Studio etc. Let’s take a look at how to build with Bazel in the command line.

- To build an Android example app, build against the corresponding andoid_binary build target. The android target for this build is : mediapipe/examples/android/src/java/com/google/mediapipe/apps/holistictrackinggpu:holistictrackinggpu So we need the following command:

2. Install on the device using:

Alternatively, you can install this pre-built APK for Arm-64 devices. It’s not the greatest and sadly, doesn’t seem to offer functionality for the front facing camera, but works decently and is a good showcase of the holistic pipeline.

Use in Practice

According to Google, this integration of pose, hand tracking, and face detection all in the same pipeline will result in new applications such as remote gesture interfaces, full-body augmented reality, sign language recognition, sports/activity analytics, and more.

Utilizing upwards of 540 keypoints in one frame, a Holistic Tracking API offers better, simultaneous perception of body language, gesture, and facial expressions. To demonstrate the power, use-cases and performance of MediaPipe Holistic, Google engineers have built a simple remote control interface that runs locally in the browser and enables a highly-interactive visual correspondence with the user, without any kind of hardware (i.e. touchscreens, keyboards, mouse etc.)

A user can interact with objects on the screen, type on a virtual keyboard while sitting on the sofa, point to or touch specific face regions, or carry out specific activities such as mute or turn off the camera.

When performing these interactions, behind the scenes the pipeline relies on accurate hand detection with subsequent gesture recognition mapped to a “trackpad” space, enabling remote control from up to 4 meters. This gesture control method can enable various use-cases when other human-computer interaction modalities are not convenient.

Google is hopeful that the Holistic tracking solution will inspire research and development for community members to build new, unique applications. They are already using it as a stepping stone for future research into challenging domains such as sign-language recognition, touchless control interfaces, and a variety of other complex use cases.

Editor’s Note: Heartbeat is a contributor-driven online publication and community dedicated to providing premier educational resources for data science, machine learning, and deep learning practitioners. We’re committed to supporting and inspiring developers and engineers from all walks of life.

Editorially independent, Heartbeat is sponsored and published by Comet, an MLOps platform that enables data scientists & ML teams to track, compare, explain, & optimize their experiments. We pay our contributors, and we don’t sell ads.

If you’d like to contribute, head on over to our call for contributors. You can also sign up to receive our weekly newsletters (Deep Learning Weekly and the Comet Newsletter), join us on Slack, and follow Comet on Twitter and LinkedIn for resources, events, and much more that will help you build better ML models, faster.

Comments 0 Responses