Neural networks have many practical use cases, from identifying street signs and people in self-driving cars to recognizing text and transcribing audio.

But one of the more fun use cases is applying the “style” of one image to another. Neural networks can be trained to tease apart the content of a painting from the style of a painting.

The results can then be applied to any image. The result is a set of fun photo filters that replicate famous paintings.

Style transfer has been around for a few years now, but the existing implementations haven’t been fast enough to run in real-time on a mobile phone.

At Fritz, we’ve been working hard to take popular algorithms and optimize them to run directly on mobile phones. We’ve made great progress with the style transfer model, and today we’ll show you how you can build an app that stylizes live video using the Fritz SDK.

Creating a basic demo app

We will be creating a simple app to use style transfer to transform live video into van Gogh’s Starry Night. It will probably take about 30 minutes to get all the way through. The completed demo app is available here. For a more full-featured style transfer demo, you can check out the example in Fritz AI Studio.

Step 1: Setup your Xcode Project

First things first, create a new Xcode project. If you want, fork the Fritz Style Transfer Demo project. Each commit in the demo app will follow a step in this tutorial. Before going onto the next step, make sure your empty project builds (you will likely need to change the Bundle Identifier).

Step 2: Install Fritz

To use the Style Transfer Feature, you need to create a Fritz account and setup the SDK in your app. This is a quick process that shouldn’t take you more than 5 minutes. The Fritz SDK is available on CocoaPods.

Overview of Fritz Setup Steps:

- Create an account here

- Create a project — projects group your apps together e.g. iOS and Android flavors

- Add an app to the project. You will need the bundle identifier you used in Step 1.

- After following the directions in the webapp, run your app with the Fritz SDK configured

Today we’re just using the Fritz SDK for style transfer, but you can also use the Fritz SDK to create apps that can label the contents of an image (think “not hotdog”) or detect the location of objects in an image. Check out the docs for all the machine learning powered features you can build.

After you’ve initialized your app per the instructions in the webapp, you’re ready to start building your Style Transfer app.

Step 3: Add PreviewView to Display Live Video

In this step, we’re going to use some helper code to display live video from the camera, to make sure that our app will be ready to display live style transfer code.

To do this, we’re going to add a UIImageView to our ViewController.

import UIKit

import Photos

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

var previewView: UIImageView!

override func viewDidLoad() {

super.viewDidLoad()

// Add preview View as a subview

previewView = UIImageView(frame: view.bounds)

previewView.contentMode = .scaleAspectFill

view.addSubview(previewView)

}

override func viewWillLayoutSubviews() {

super.viewWillLayoutSubviews()

previewView.frame = view.bounds

}

}

This way, the preview view will be rendered when the view controller launches.

Build the project here to make sure that all is well.

Step 4: Setup Camera and Display Live Video

Next, we’re going to add add the camera to our app and just display live video.

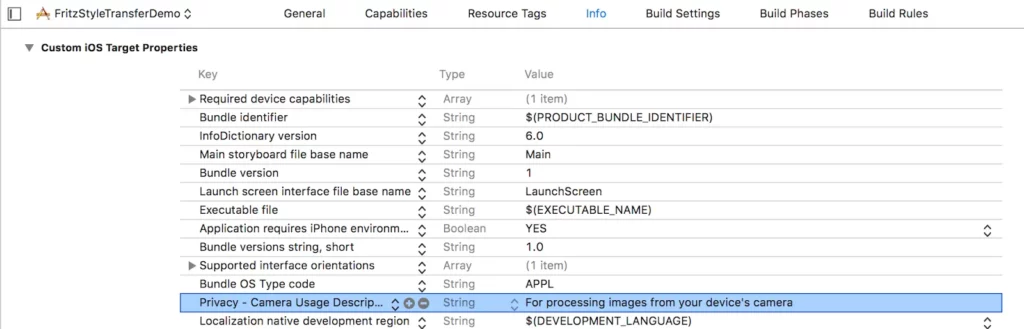

First things first, we need to add camera permissions to the Info.plist. As a key, add “Privacy — Camera Usage Description” with a description

To display frames from the camera, we need to configure AVCaptureSession and AVCaptureVideoDataOutput objects.

When we are configuring the AVCaptureSession, we need to use the 640×480 sessionPreset in preparation for sending the data to the style transfer model:

import UIKit

import Photos

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

// ...

private lazy var captureSession: AVCaptureSession = {

let session = AVCaptureSession()

guard

let backCamera = AVCaptureDevice.default(.builtInWideAngleCamera, for: .video, position: .back),

let input = try? AVCaptureDeviceInput(device: backCamera)

else { return session }

session.addInput(input)

// The style transfer takes a 640x480 image as input and outputs an image of the same size.

session.sessionPreset = AVCaptureSession.Preset.vga640x480

return session

}()

// ...

}Next, configure the AVCaptureVideoDataObject and add that to the session:

import UIKit

import Photos

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

var previewView = VideoPreviewView()

private lazy var captureSession: AVCaptureSession = { /* ... */ }

override func viewDidLoad() {

super.viewDidLoad()

// Add preview View as a subview

view.addSubview(previewView)

let videoOutput = AVCaptureVideoDataOutput()

// Necessary video settings for displaying pixels using the VideoPreviewView

videoOutput.videoSettings = [kCVPixelBufferPixelFormatTypeKey as String: kCVPixelFormatType_32BGRA as UInt32]

videoOutput.setSampleBufferDelegate(self, queue: DispatchQueue(label: "MyQueue"))

self.captureSession.addOutput(videoOutput)

self.captureSession.startRunning()

}

}To make sure we have everything hooked up correctly, let’s display the raw video coming from the camera on the subview. When you run the app, you should see normal video being displayed. Here we make sure to update the previewView asynchronously so we don’t block the UI Thread:

import UIKit

import Photos

import Fritz

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

let image = FritzVisionImage(sampleBuffer: sampleBuffer, connection: connection)

guard let rotatedImage = image.rotate() else { return }

if let imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) {

DispatchQueue.main.async {

self.previewView.image = UIImage(pixelBuffer: rotatedImage)

}

}

}

} Run this and you should see live video in your app!

Step 5: Add some style

Once you have the video displaying to your preview view, it’s time to add the style transfer model!

First, update your Podfile to include the Style Transfer Model:

Initialize the style transfer model as a variable in the view controller and run the model on the captureOutput sample buffer:

import Fritz

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

lazy var styleModel = FritzVisionStyleModel.starryNight

// ...

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

let image = FritzVisionImage(sampleBuffer: sampleBuffer, connection: connection)

guard let stylizedImage = try? styleModel.predict(image) else {

print("Error encountered running Style Model")

return

}

let styled = UIImage(pixelBuffer: stylizedImage)

DispatchQueue.main.async {

self.previewView.image = styled

}

}

}

Run the app to see a live working style transfer model! There are 11 different Style Transfer models included out of the box.

Congrats! You’ve made your first app using real-time Style Transfer!

Check out Fritz AI Studio for a more complex implementation of Style Transfer. It uses a gesture recognizer to switch the style transfer model when you tap the screen.

Flexible Core ML Models

With the release of Core ML 2, Apple added the ability to create models that accept flexible input and output sizes. Because our style models are fully convolutional, it’s possible to create your own styles can be applied to images of any size. To change the input size of the model, adjust flexibleModelDimensions option on FritzVisionStyleModelOptions

import Fritz

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

lazy var styleModel = FritzVisionStyleModel.starryNight

// ...

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

let image = FritzVisionImage(sampleBuffer: sampleBuffer, connection: connection)

let options = FritzVisionStyleModelOptions()

options.flexibleModelDimensions = FlexibleModelDimensions.highResolution

guard let stylizedImage = try? styleModel.predict(image, options: options) else {

print("Error encountered running Style Model")

return

}

}

}Build your own Styles

If you like what you see and want to build your own styles, check out 20-Minute Masterpiece: Training your own style transfer model.

Jameson Toole shows how you can use a Fritz AI training template to create a totally unique style from your own images.

Our goal is to make it easier for developers to get into mobile machine learning. With the Fritz SDK and the Style Transfer API, you can now take any image and transform it into a work of art. We can’t wait to see what you’ll create! Try it out and let us know what you think.

For a high-level look at style transfer, check our helpful guide, which includes the basics, model architectures, use cases, and more.

Comments 0 Responses