Doing cool things with data!

Tracking is an important problem in the domain of computer vision. It involves tracking an object through a sequence of frames. An ID is assigned to an object the first time it appears, and then this ID is carried forward in subsequent frames.

The use of tracking is exploding with applications in retail stores, self-driving cars, security and surveillance, motion capture systems, and many more.

Tracking is a challenging problem for several reasons — tracked items can get fully or partially occluded, tracked items may look similar to each other (causing ID switching), and an object may disappear completely only to reappear later.

Though these challenges cannot be completely removed, they can be reduced by wisely choosing tracking and detection algorithms and combining them with human intelligence.

In this post, we’re going to explore a trending use case of tracking—people walking outside a retail store. We researched, experimented, failed, experimented again, and finally achieved very good accuracy with real-time tracking on a low-compute edge device!

But why edge device? ML models can be deployed on cloud, local hardware, or the edge. Deployment on the edge is usually the hardest, but it’s also the most cost-effective way of scaling up. For a closer look at the benefits of performing inference on the edge, take a look at this overview (it centers on mobile, but similar benefits apply)

Check out the performance of our model in the gif below:

So how did we achieve this?

Real-time tracking on GPU

Detection is the first step before we can perform tracking. There are many pre-trained models for object detection, but if you want to run object detection in real-time without much accuracy loss, go for YOLOv3!

To learn more about object detection and how it’s different from tracking, please check out this blog:

A closer look at YOLOv3

YOLOv3 has been trained on the COCO dataset consisting of 80 classes. It uses a single neural network to make predictions (object locations and classes) for an image. The network divides the image into N*N regions and predicts B bounding boxes and probabilities for each region.

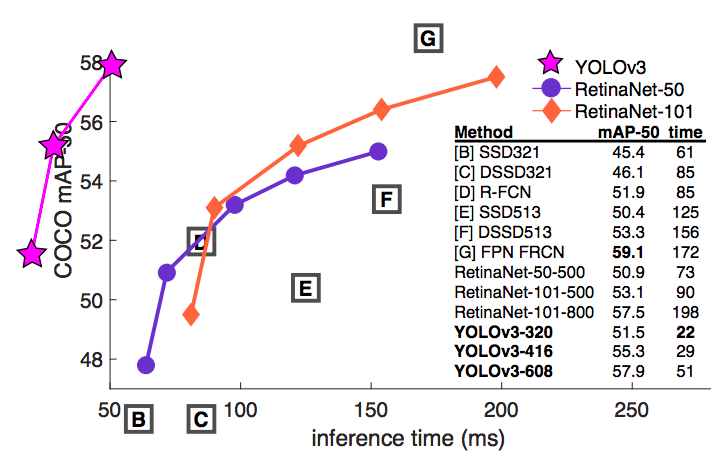

YOLOv3 has become a powerful modeling option since it achieves state-of-the-art mean average precision (mAP) with much lower inference time. See the chart and table below.

To use YOLOv3, you have to download the pre-trained weight file. For this, do

After detecting people using YOLOv3, we need a tracking algorithm to track these “objects” across the frames. For this, we’ve used a very popular algorithm called SORT(Simple Online RealTime Tracking).

Using the SORT algorithm

Once we have detections for the frame, a matching process is performed with similar detections from the previous frame.

The SORT algorithm keeps track of each unique object until that object is in the frame. It defines the state of each track based on detection of box-center, box scale, box aspect ratio. and their derivatives with time (i.e velocities).

You can find the original implementation of the SORT algorithm here:

YOLOv3 with SORT is able to run in real-time on a GPU. But most edge devices don’t have a GPU. So from here on, we’ll shift to real-time inference on an edge device. The edge device we used was a Raspberry Pi.

Real-time tracking on low-compute edge device

We started by experimenting with the same models and methods on edge that we’d used on GPU. To no surprise, it was running with very low FPS. We overcame this bottleneck by intelligently updating our entire pipeline till we got to real-time on edge.

1. Firstly, we replace YOLOv3 with Tiny YOLO. To our surprise, our pedestrian detection shows significantly improved FPS without much loss in accuracy

2. Secondly, we changed our approach of “when” to call the Tiny YOLO model. Instead of calling it every frame, the model is called only in frames where pedestrian motion is likely.

3. Thirdly, instead of running the model on the entire frame we strategically defined “where” to run this model.

The combination of the above did the trick.

Conclusion

Tracking is a powerful computer vision algorithm and can enable many real-world computer vision applications. The ability to run deep learning-based, real-time tracking on the edge allows for this feature to be implemented widely, at a lower cost, and without latency, which can lead to advanced data collection for all kinds of businesses.

I have my own deep learning consultancy and love to work on interesting problems. I have helped many startups deploy innovative AI based solutions. Check us out at — http://deeplearninganalytics.org/. If you have a project that we can collaborate on, then please contact me through my website or at [email protected]

Comments 0 Responses