To help sift through some of the incredible projects, research, demos, and more in 2019, here’s a look at 17 of the most popular and talked-about projects in machine learning, curated from the r/MachineLearning subreddit. I hope you find something inspiring, educational, or both in this list.

Few-Shot Unsupervised Image-to-Image Translation (913 ⬆️)

From the abstract: “Drawing inspiration from the human capability of picking up the essence of a novel object from a small number of examples and generalizing from there, we seek a few-shot, unsupervised image-to-image translation algorithm that works on previously unseen target classes that are specified, at test time, only by a few example images.

“Our model achieves this few-shot generation capability by coupling an adversarial training scheme with a novel network design. Through extensive experimental validation and comparisons to several baseline methods on benchmark datasets, we verify the effectiveness of the proposed framework.”

Decomposing latent space to generate custom anime girls (521⬆️)

The authors present an artificial neural network that is capable of drawing animes.

The Waifu Vending Machine allows you to select characters that you like and, based on that, you can generate animes that you might like.

A list of the biggest datasets for machine learning (499⬆️)

Here, the author has curated a list of machine learning datasets that one can use for machine learning experimentation.

This kind of resource could definitely reduce the work amount of time involved in looking for datasets online. The datasets are segmented by various tasks/domains, including: CV, NLP, Self-driving, QA, Audio, and Medical. You can also sort by license type.

Dataset: 480,000 Rotten Tomatoes reviews for NLP. Labeled as fresh/rotten (464⬆️)

The author scraped the internet for Rotten Tomatoes reviews, which could be very useful in natural language processing tasks.

The dataset is available on Google Drive.

Using ML to create a cat door that automatically locks when a cat has prey in its mouth (464⬆️)

This post is about a cat door created using machine learning.

The speaker in the video above created a cat door that would automatically lock for 15 minutes if the cat had something in its mouth. This prevented the car from bringing dead animals in the house. He did this by connecting a camera to the cat door and then applied machine learning to check if the cat had something in its mouth.

Neural Point-Based Graphics (415⬆️)

The authors present a new point-based approached for modeling complex scenes. It uses a raw point cloud as the geometric representation of a scene.

It then augments every point with a neural descriptor that can be learned. The neural descriptor encodes local geometry and appearance. New views of scenes are obtained by passing the rasterizations of a point cloud from new viewpoints through a deep rendering network.

AdaBound: An optimizer that trains as fast as Adam and as good as SGD (ICLR 2019), with A PyTorch Implementation (402⬆️)

AdaBound is an optimizer that aims for faster training speed and performance on unseen data. It has a ready PyTorch implementation

AdaBound behaves like Adam at the beginning of model training and transforms into SGD towards the end.

Facebook, Carnegie Mellon build first AI that beats pros in 6-player poker (390⬆️)

From the post on Facebook Research: “Pluribus is the first AI bot capable of beating human experts in six-player no-limit Hold’em, the most widely-played poker format in the world. This is the first time an AI bot has beaten top human players in a complex game with more than two players or two teams.

“Pluribus succeeds because it can very efficiently handle the challenges of a game with both hidden information and more than two players. It uses self-play to teach itself how to win, with no examples or guidance on strategy.”

NumPy implementations of various ML models (388⬆️)

From the project page: “numpy-ml is a growing collection of machine learning models, algorithms, and tools written exclusively in NumPy and the Python standard library.”

Code available for the model listed below.

PyTorch implementation of 17 Deep RL algorithms(388⬆️)

The author has curated PyTorch implementations of 17 deep reinforcement learning algorithms.

Some of the implementation included are DQN, DQN-HER, Double DQN, REINFORCE, DDPG, DDPG-HER, PPO, SAC, SAC Discrete, A3C, A2C.

1 million AI generated fake faces for download(373⬆️)

The author generated 1 million faces with NVIDIA’s StyleGAN.

As you can see, the images look exactly like those of real people.

Neural network racing cars around a track (358⬆️)

From the author: “Teaching a neural network to drive a car. It’s a simple network with a fixed number of hidden nodes (no NEAT), and no bias. Yet it manages to drive the cars fast and safe after just a few generations.

“Population is 650. The network evolves through random mutation (no cross-breeding). Fitness evaluation is currently done manually as explained in the video.”

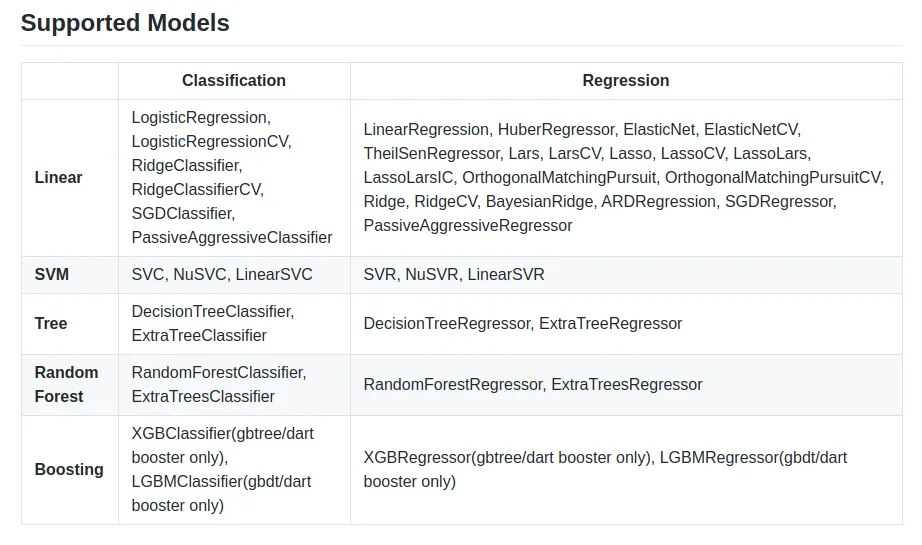

A simple library which turns ML models into native code (Python/C/Java) (345⬆️)

From the repo: “m2cgen (Model 2 Code Generator) — is a lightweight library which provides an easy way to transpile trained statistical models into a native code (Python, C, Java, Go, JavaScript, Visual Basic, C#).”

The models that are currently supported are as follows:

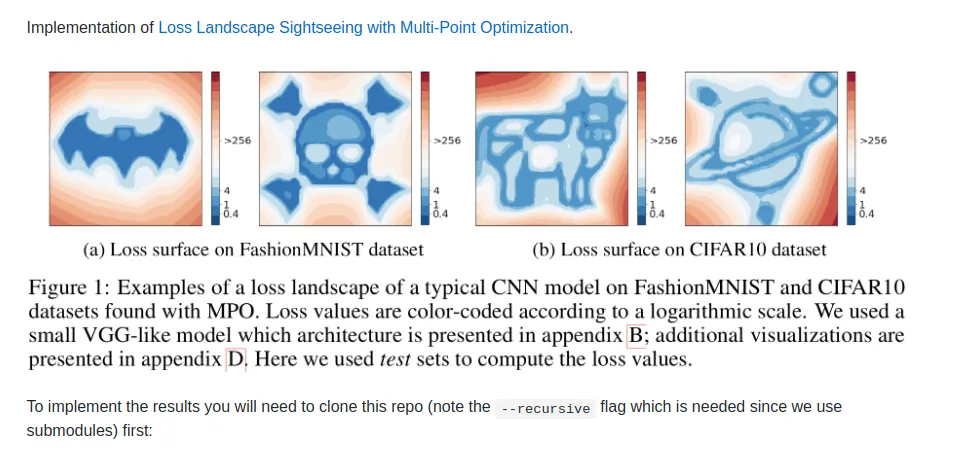

Exploring the loss landscape of your neural network (339⬆️)

From the author’s post: “The post is about finding different patterns in the loss surface of neural networks. Usually, a landscape around a minimum looks like a pit with random hills and mountains surrounding it, but there exist more meaningful ones, like in the picture below.

“We have discovered that you can find a minimum with (almost) any landscape you like. An interesting thing is that the found landscape pattern remains valid even for a test set, i.e. it is a property that (most likely) remains valid for the whole data distribution.”

OpenAI’s GPT-2-based Reddit Bot(343⬆️)

The author built a Reddit bot powered by OpenAI’s GPT-2.

The bot can be used by replying to any comment with “gpt-2 finish this”.

The bot’s code can be found in the repo below.

Super SloMo: A CNN to convert any video to a slomo video(332⬆️)

The author implemented the paper below in PyTorch.

His code implementation can be seen below.

A library of pretrained models for NLP: Bert, GPT, GPT-2, Transformer-XL, XLNet, XLM(306⬆️)

This is an open-source library of pre-trained transformer models for NLP. It has six architectures, namely:

- Google’s BERT

- OpenAI’s GPT & GPT-2

- Google/CMU’s Transformer-XL & XLNet

- Facebook’s XLM

The library has 27 pre-trained model weights for these architectures.

Comments 0 Responses